Learn

|

White Papers

|

Matilde Alves

|

6 July 2023

Navigate the challenges and risks of complying with the EU AI Act’s requirements for high-risk AI use cases in the financial services sector. This comprehensive guide, specifically tailored for financial service providers, is your indispensable resource to understand the high-risk EU AI Act for Financial Services, compliance requirements for dedicated use cases, and how Deeploy supports financial service providers in meeting these obligations. Build trust in the responsible use of AI while staying competitive in the financial services industry.

Learn how to comply with the EU AI Act in your financial institution

The EU AI Act is a groundbreaking, comprehensive legal framework that will shape and regulate the use and deployment of artificial intelligence in the European region. It aims to ensure the safe and ethical use of AI while fostering innovation and protecting fundamental human rights.

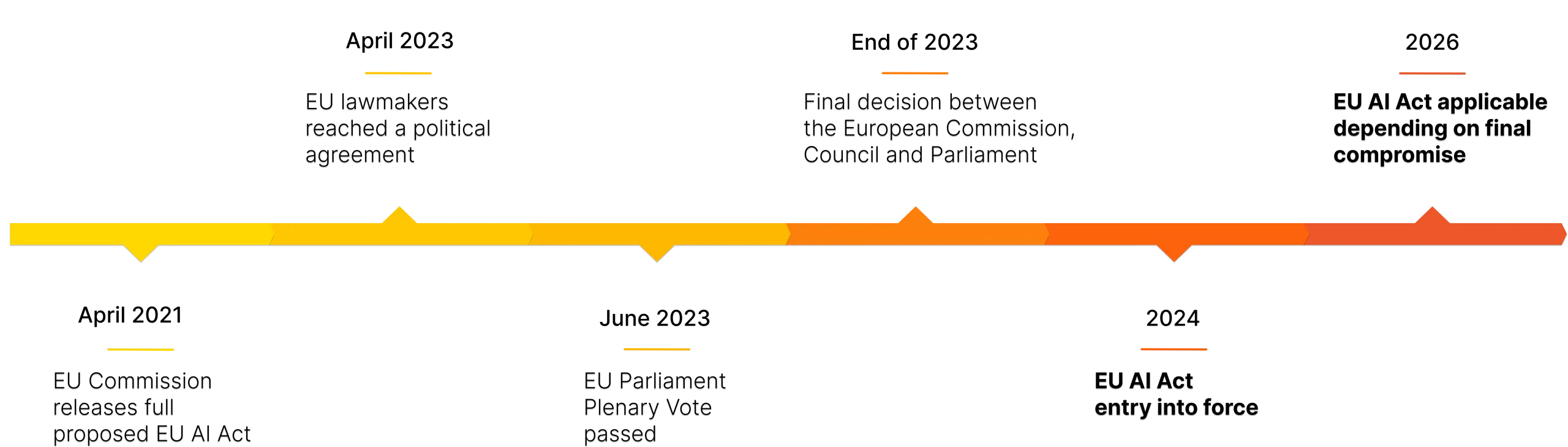

Timeline of the EU AI Act

The AI Act categorizes AI systems into four risk tiers and imposes regulatory requirements accordingly to limit the potential impact on individuals and society.

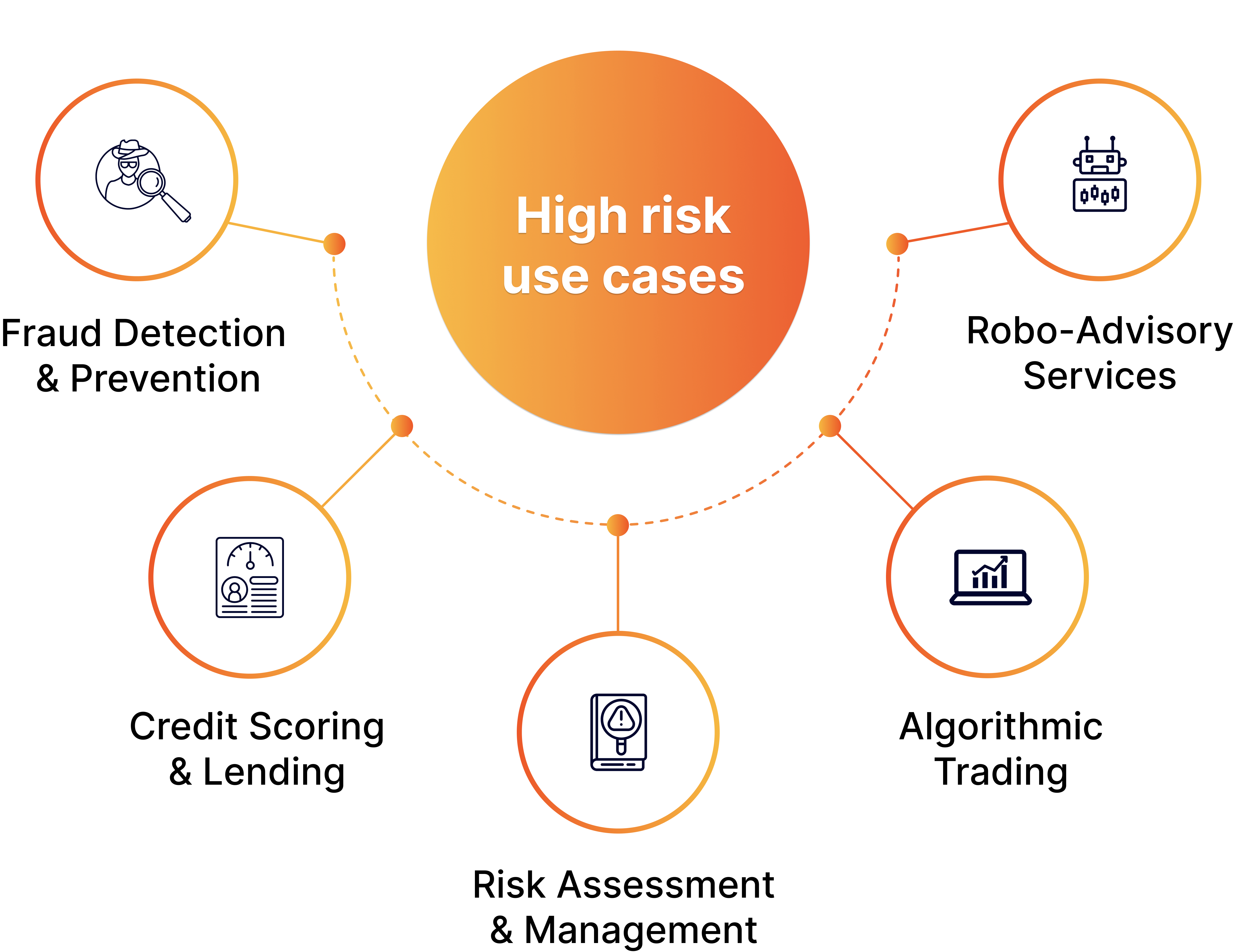

It specifically targets high-risk AI systems, which include a list of high-risk use cases in the financial service industry. Several applications are likely to be affected due to their significant impact on users and organizations.

The Pyramid of Risks

While the EU AI Act explicitly identifies credit scoring as a high-risk use case, it’s essential to recognize that the landscape of high-risk AI extends far beyond this domain. Many other applications also fall under this category, placing both AI providers and users under the scrutiny of stringent regulations. Compliance is mandatory, both before and following the marketing or deployment of these systems. Navigate the EU AI Act for Financial Services to avoid substantial penalties for non-compliance.

We understand the intricacies of high-risk AI use cases and have identified 5 applications that align with the classification outlined in the EU AI Act. In our white paper, you will gain insights into driving your organization’s AI strategy toward responsible innovation.

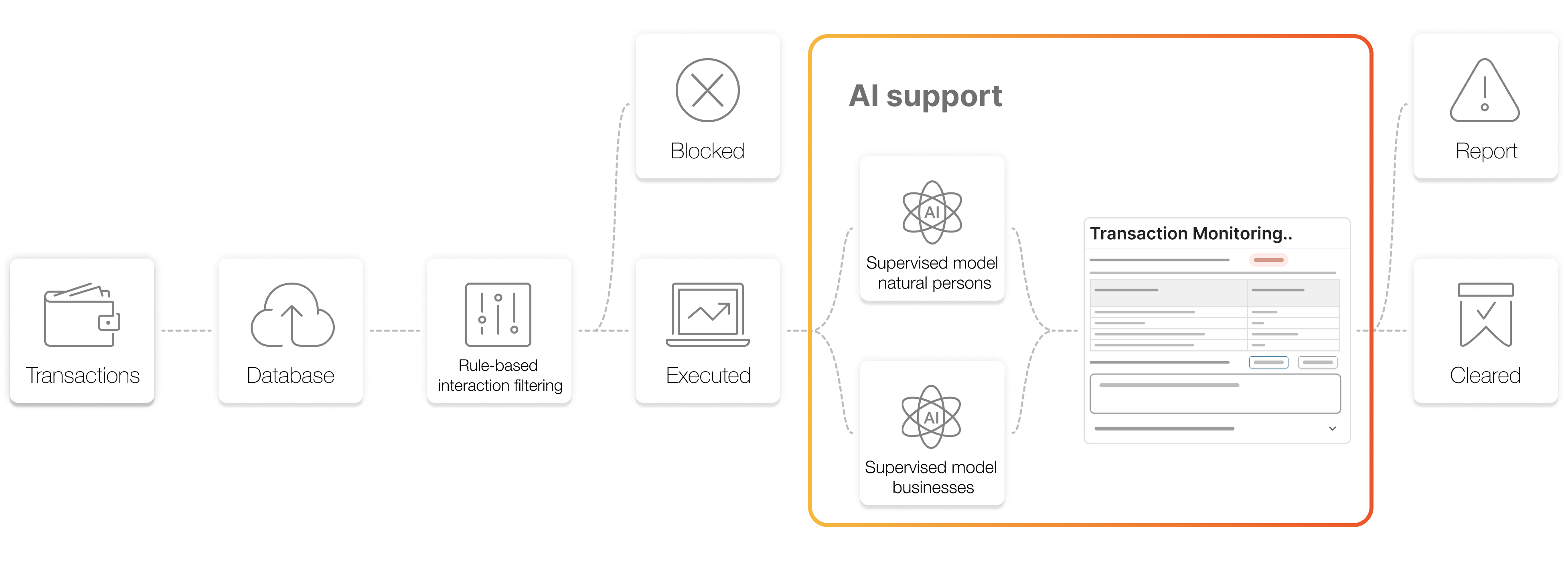

Banks like bunq are already leveraging AI to improve the efficiency of their transaction monitoring process. These capabilities provide financial institutions with a powerful tool for detecting fraud, supporting AML efforts, mitigating potential losses, and bolstering customer trust. However, it is crucial to recognize that transaction monitoring, despite its benefits, carries a substantial risk of financial harm. This is why the EU AI Act categorizes it as a high-risk use case. The potential for financial harm arises from the possibility of false positives and false negatives, which can disrupt legitimate transactions or fail to detect actual fraudulent activities, leading to financial losses for both the bank and its customers.

”As one of the most impacted industries, the financial service sector must adapt to these new regulations to remain compliant and competitive.

Jord GoudsmitDigital Ethics, Considerati

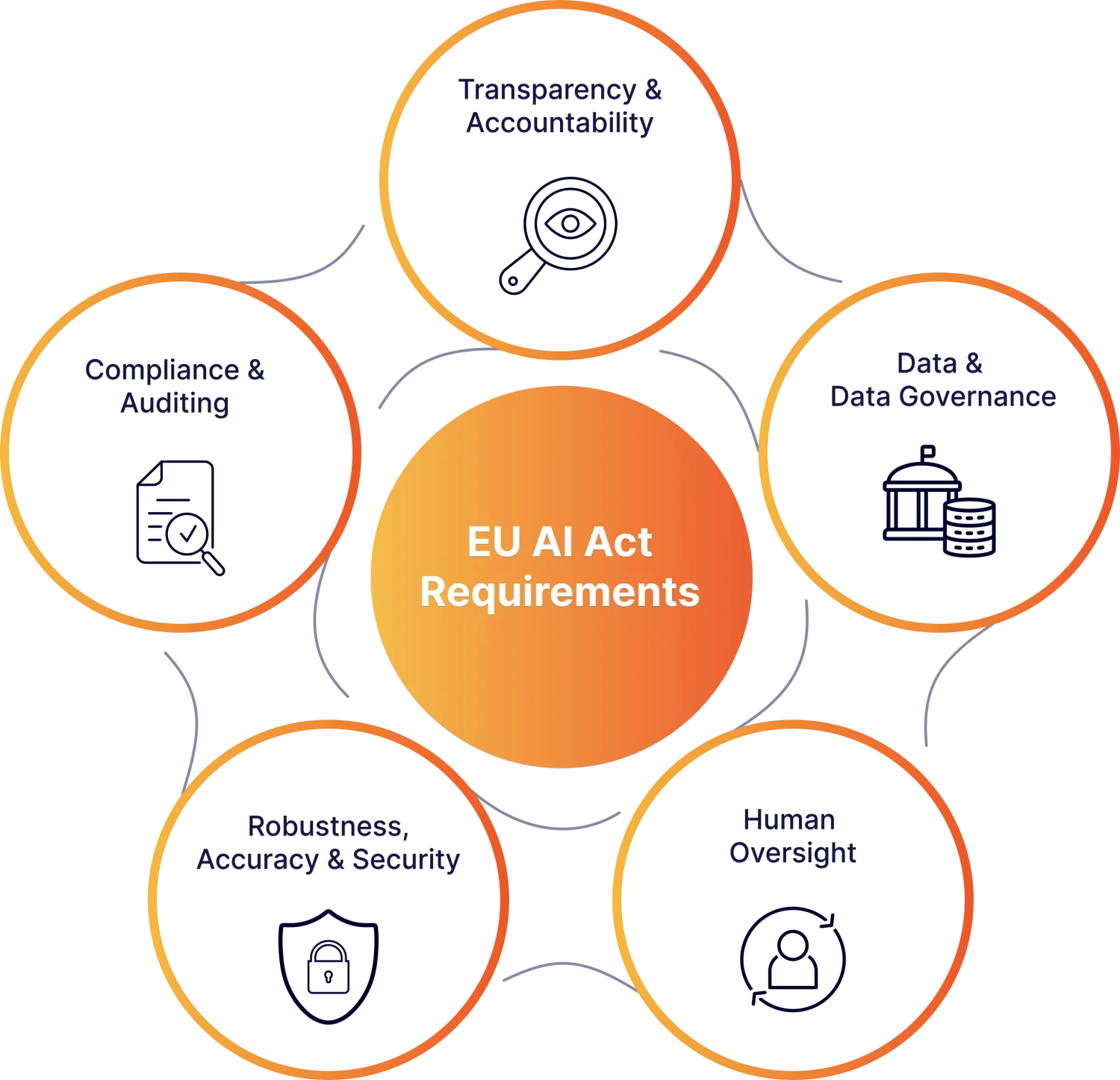

High-risk financial use cases can be beneficial but also pose significant challenges and risks, which must be addressed to maintain trust, ensure fairness, and protect users. Following the key requirements ensure the responsible development and deployment of AI in your company. In our white paper, financial service providers can explore these requirements and how they aim to mitigate risks associated with AI in financial technologies.