Trustworthy AI for Pensions

AI in pension management can boost efficiency, improve decision-making, and optimize investment strategies for a variety of use cases. However, productionalization is often hindered by concerns about control, reliability, trust, and regulations. Addressing these challenges requires ensuring AI systems are manageable, transparent, accountable, and compliant with regulatory requirements.

Learn more about Deeploy and the features that leading pension management organizations, to the likes of PGGM and Brand New Day, have used to stay in control of their AI production and maintain trust and integrity in pension management processes.

Financials using Deeploy

Ensuring control over running models

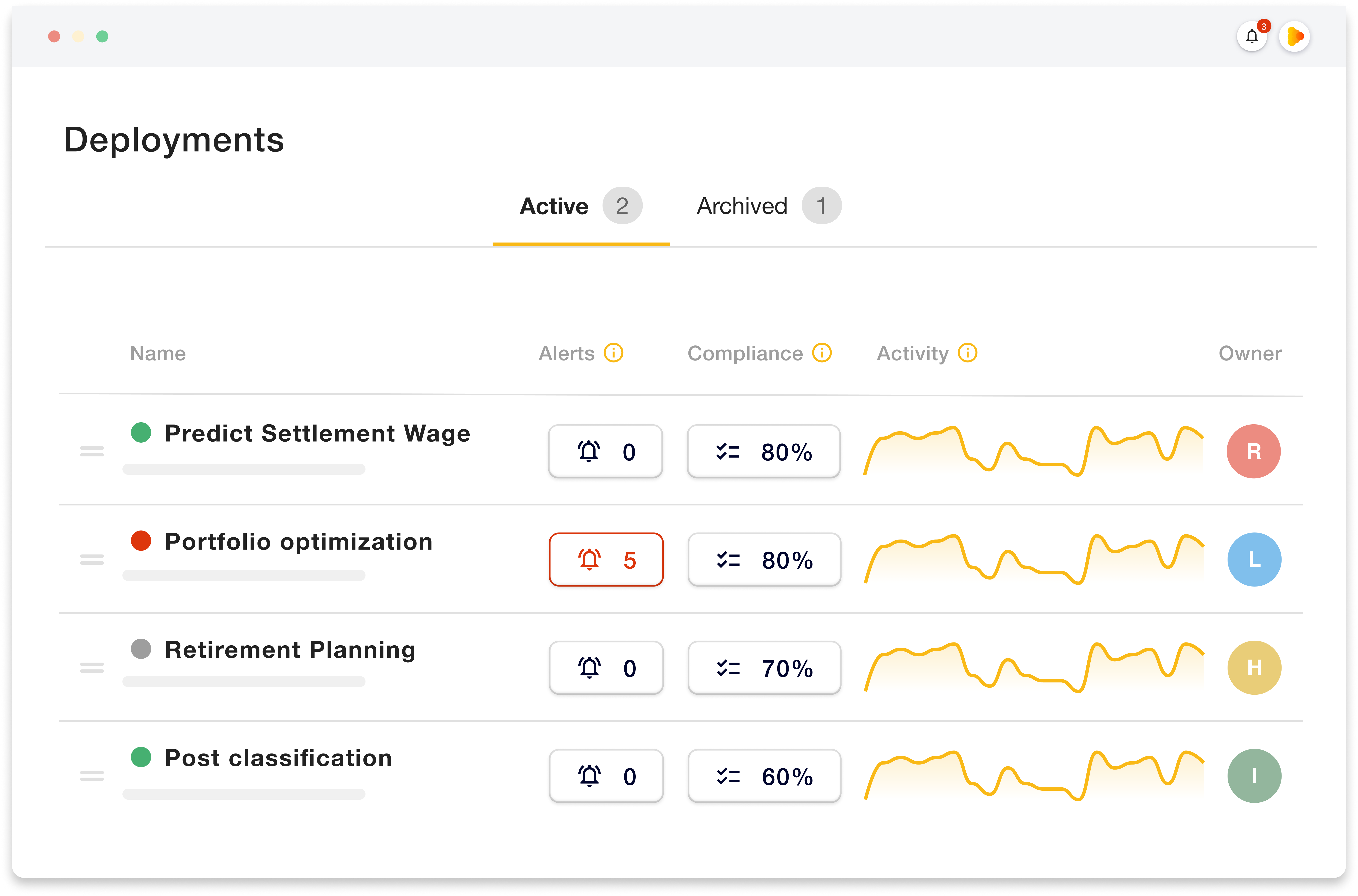

AI for pension organizations covers a wide range of use cases. These include risk assessment, portfolio optimization, pension schemes, predictive analytics, fraud detection, predicting settlement wage, and optimized administration. However, this variety of use cases can make organizations lose oversight of running models. This makes tasks like monitoring performance and ensuring compliance hard and inefficient for teams, leading to higher costs and increased governance risks.

Centralize models in one management system

Ideally, pension managers should centralize the deployment of all models in one central AI management system, where all relevant AI applications are included, automatically kept up to date, and monitored for performance and compliance. Deeploy enables teams to deploy, serve, monitor, and manage AI models all in one platform, saving time and resources.

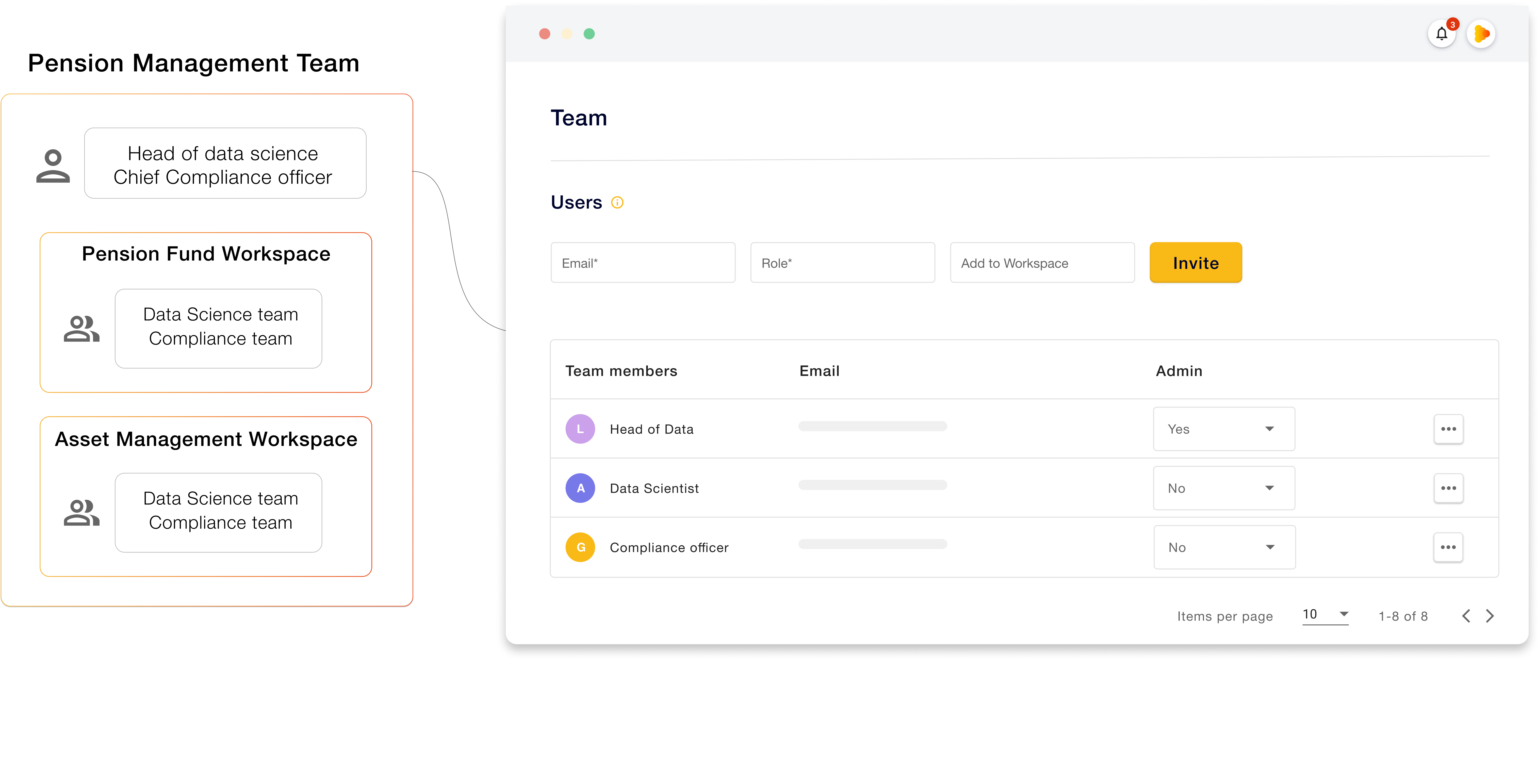

Moreover, a clear distinction between teams and workspaces helps ensure the right oversight over different AI applications within the same organization. Teams can be created to differentiate between departments and types of applications, while workspaces can be used to further drill down model access.

Establishing trust with customers & stakeholders

Pension management is a high-risk industry. Stakeholders and customers thus need clear explanations for investment decisions and risk assessments. Doubts on model performance and lack of interpretability and transparency in AI decisions will undermine trust and credibility.

Closely monitor model performance

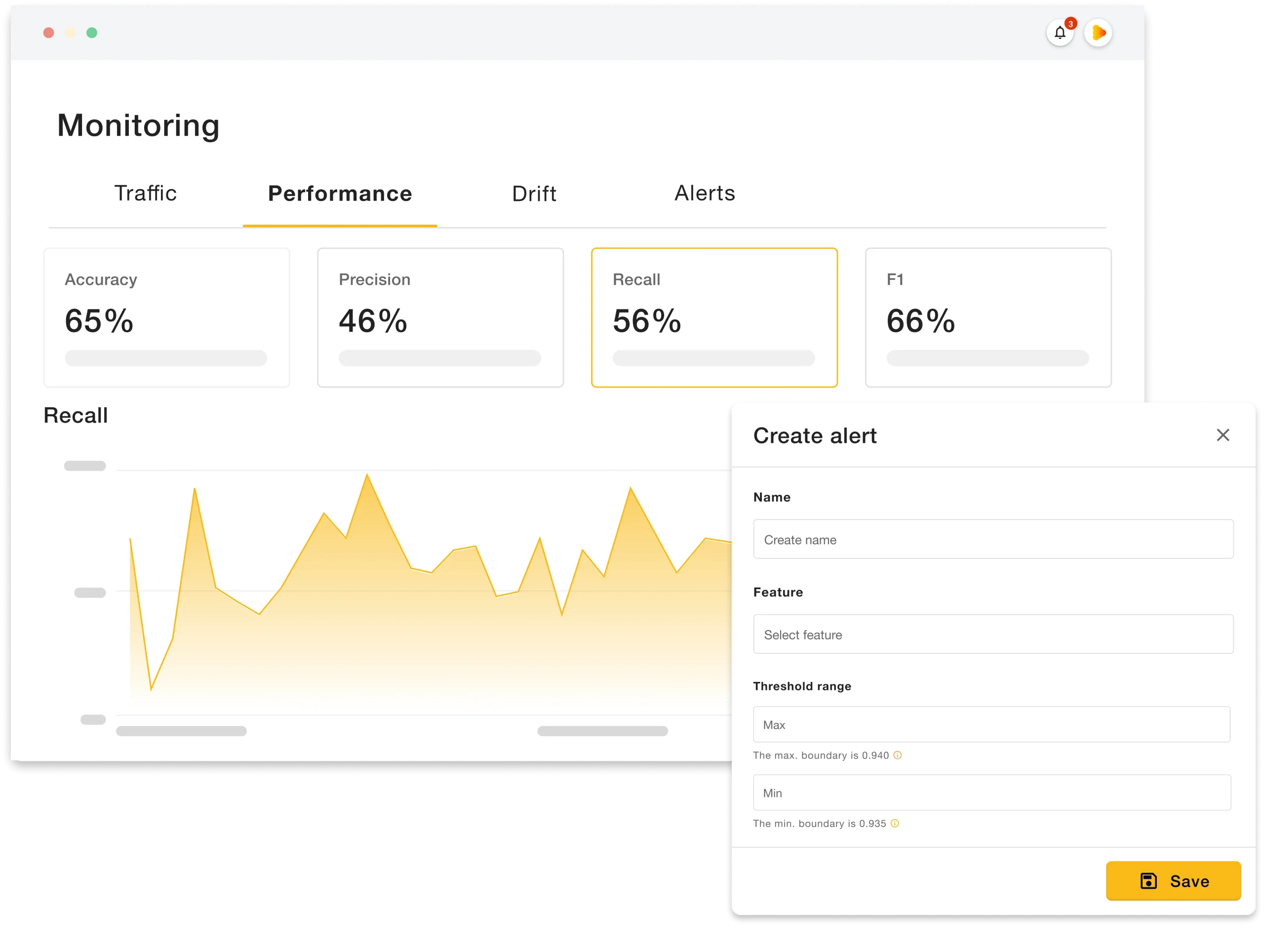

AI applications thus need to be solid and trustworthy. To achieve this models must be monitored over time for traffic, errors, performance, and drift, among other metrics.

Deeploy combines all these abilities in one AI management system, facilitating the work of data teams. Teams can also set alerts for all monitoring metrics, a handy feature to guarantee swift action in case of model degradation.

Ensure transparency & explainability

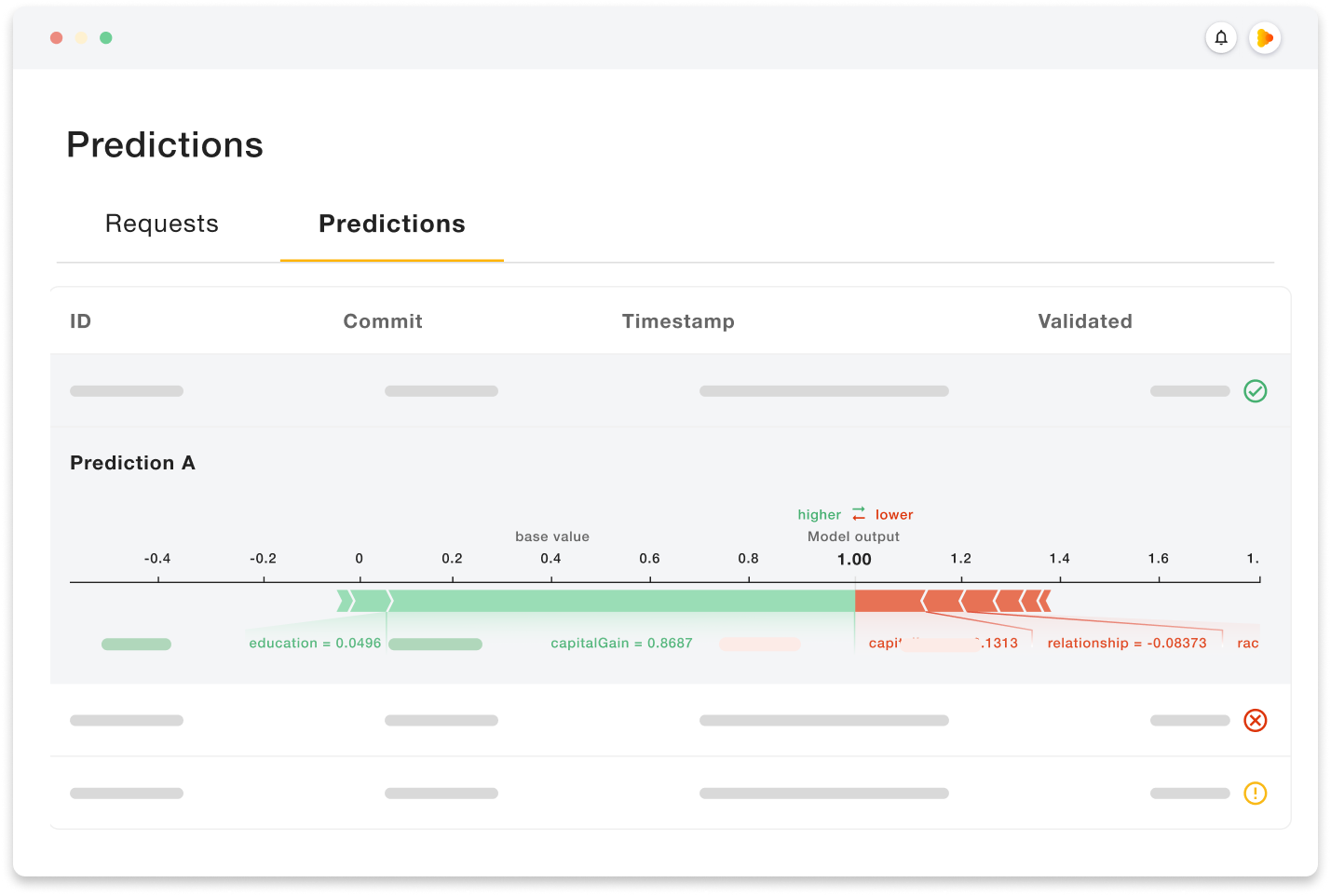

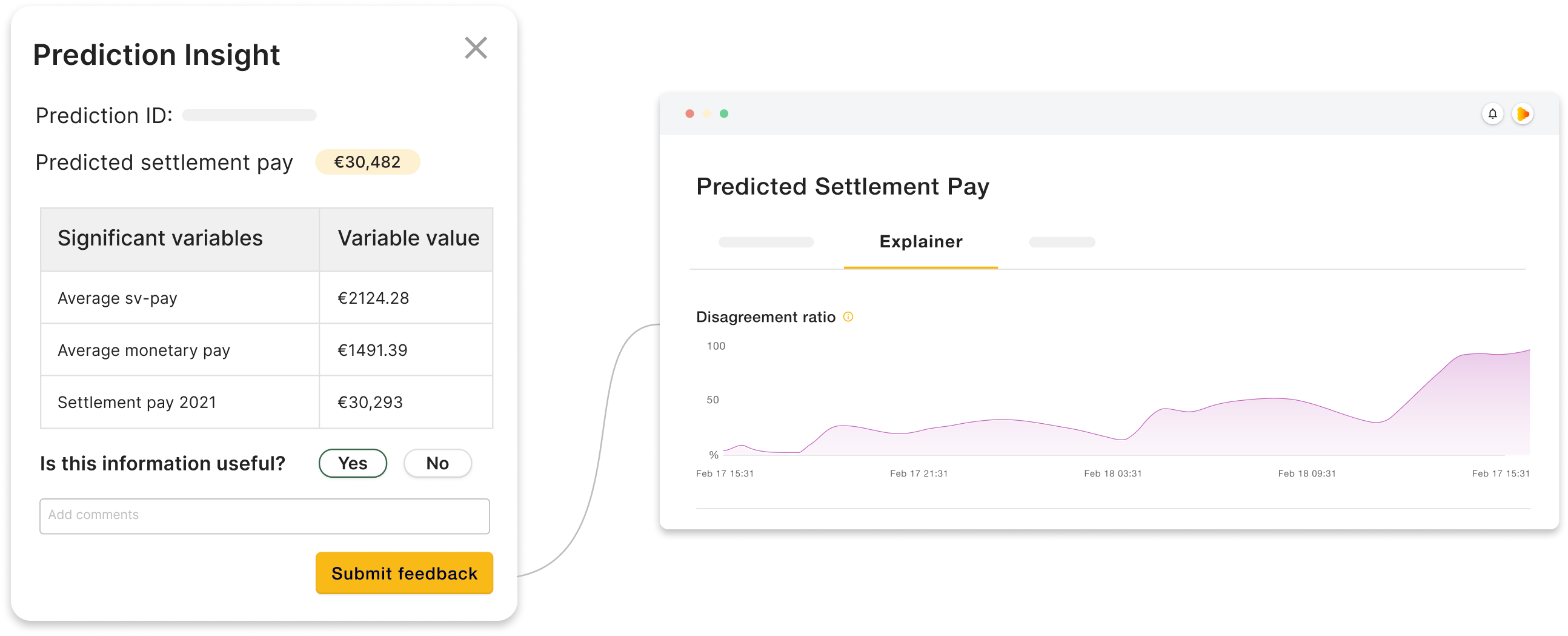

Pension providers must watch for and adress ethical concerns. This will ensure fair treatment of stakeholders and customers. Being able to explain how and why AI is applied in business processes and how these models come to their decisions minimizes this risk and increases confidence & trust in AI decisions.

Deeploy is tailored for explainability. Data teams can access a variety of explainability techniques & deployment options that cover a range of model frameworks. All predictions & explanations can also be tracedback and reproduced, ensuring full transparency and aiding in pin pointing any issues.

Continuously improve accuracy

Accuracy is vital for establishing trust in AI models. Here it’s beneficial to collect feedback from real human experts on model predictions and use it to evaluate the correctness and usefulness of a model.

As such, each model within Deeploy has an endpoint that enables the collection of feedback from experts or end-users. This feedback is solicited and recorded on a per-prediction basis, allowing for comprehensive evaluation.

The accuracy of a model can be evaluated by comparing predicted outcomes to real-world observations provided by feedback. This helps teams determine model effectiveness and identify areas for improvement.

Complying with legal requirements

AI in pension management brings regulatory compliance challenges, particularly concerning data privacy, transparency, and fairness. Pension managers must ensure that AI systems follow relevant regulations and standards, such as the EU AI Act, to avoid legal and reputational risks.

Proactively ensure human oversight & ownership

Ensuring human oversight & clear ownership over running models leads to better AI governance. In Deeploy, it is possible to assign model owners to models, who are responsible for maintaining control over its ongoing operation.

Moreover, the above-mentioned features on monitoring & transparency ensure that all facets of a model are visible to relevant stakeholders, facilitating pro-active governance actions.

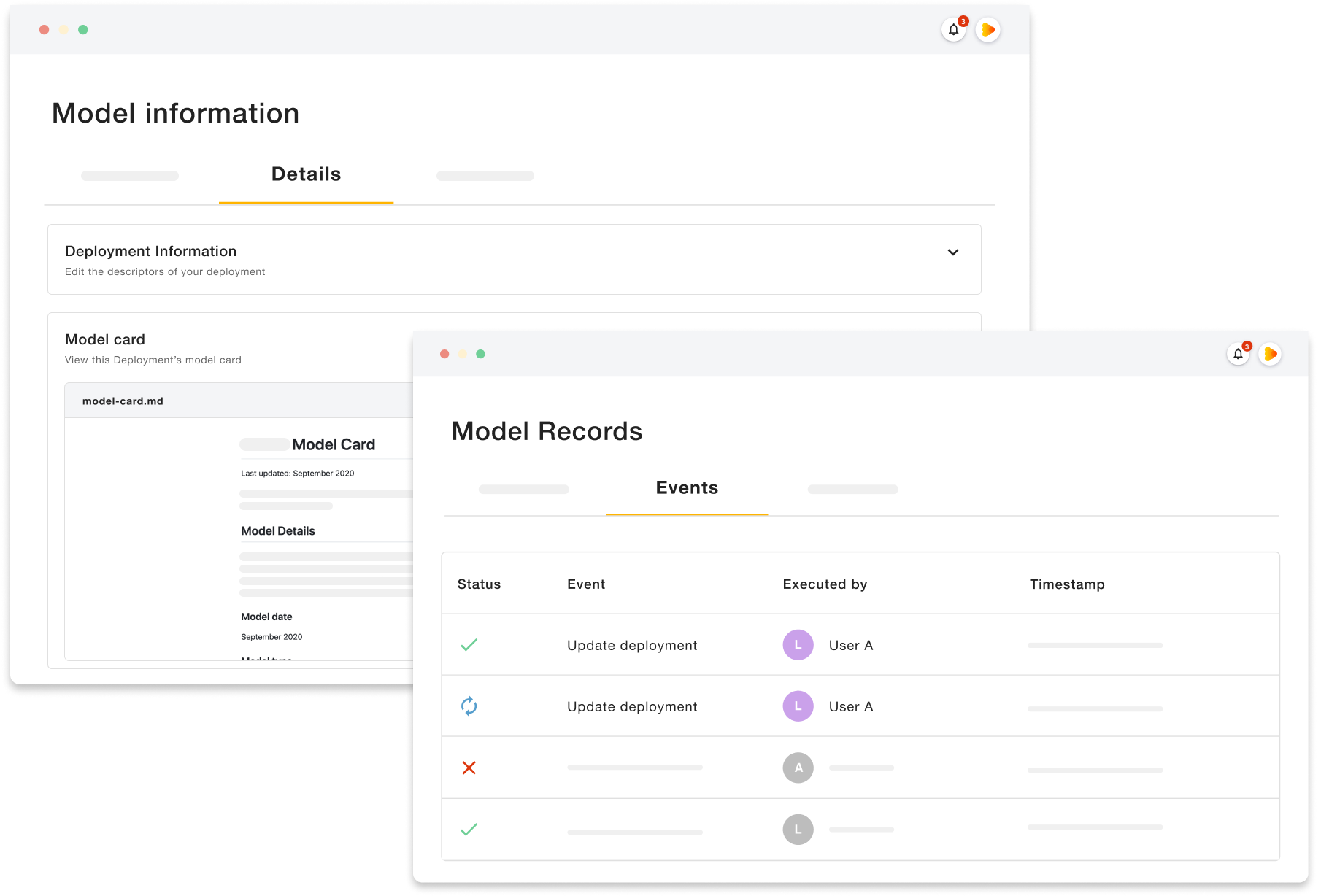

Keep model documentation & records readily available

Model documentation & record keeping is one of the main regulatory requirements for AI. Organizations must ensure that all information regarding each AI application is easily available – this includes model cards, deployment details, and audit trails for all predictions.

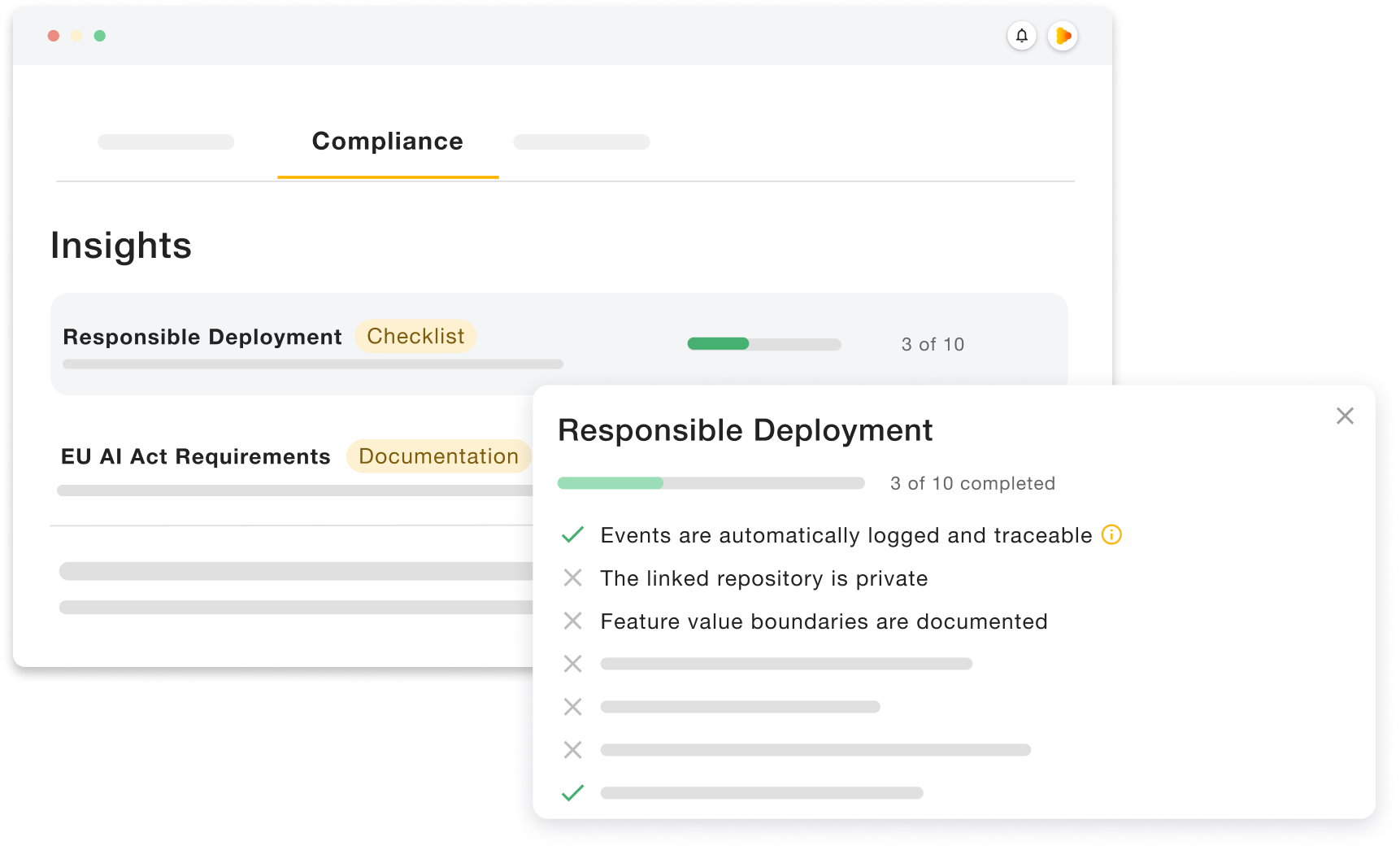

Stay on top of compliance processes

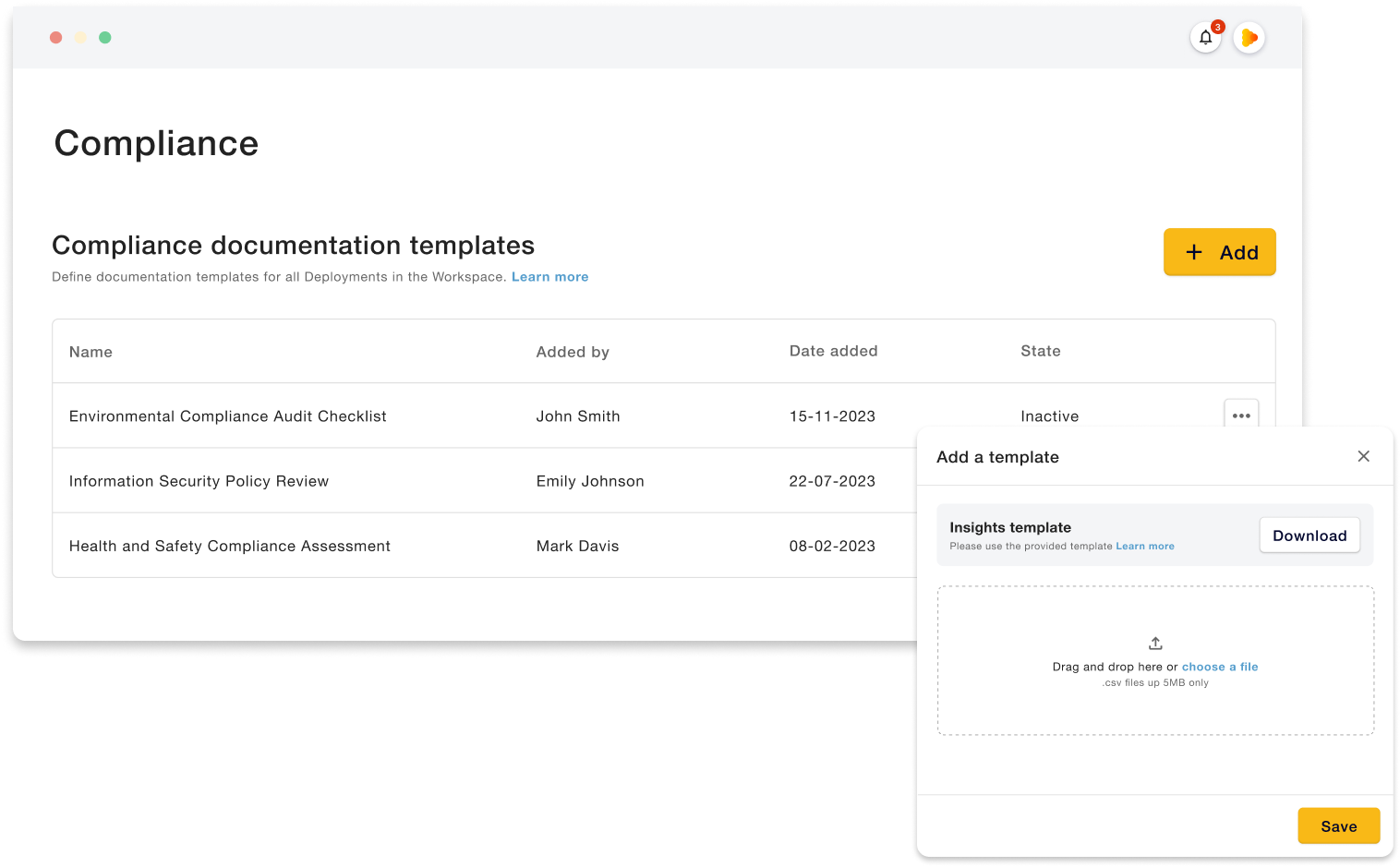

To ease compliance, teams should also be able to keep track of progress in fulfilling requirements. Standard and custom compliance templates available on Deeploy help teams ensure compliance efforts are being met for each AI application in the organization.

While standard templates offer general guidance on high-level regulation, the feature on custom compliance documentation, allows teams to upload checklists tailored to fit specific requirements and policies of the organization.

How to get started with Deeploy

Would you like to learn more about how you can take your first steps with Deeploy? Let one of our experts walk you through the platform and how it can be leveraged for your specific concerns or start with a trial of our SaaS solution.