Safety in AI for Credit Risk

AI is enhancing the accuracy, efficiency, and effectiveness of credit risk assessment and management processes in the financial industry. However, the high-risk nature of these use cases places financials under strict scrutiny from regulators and public trust. Financials that employ a responsible approach to AI usage from the get-go will benefit from public trust, gain competitive advantage, and stay ahead of regulatory requirements.

Learn more about Deeploy and how leading financials, such as Coeo, have used the platform to productionalize their AI use cases with transparency, trust, and control.

Financials using Deeploy

Easily manage & control models

Centralize model deployment & monitoring

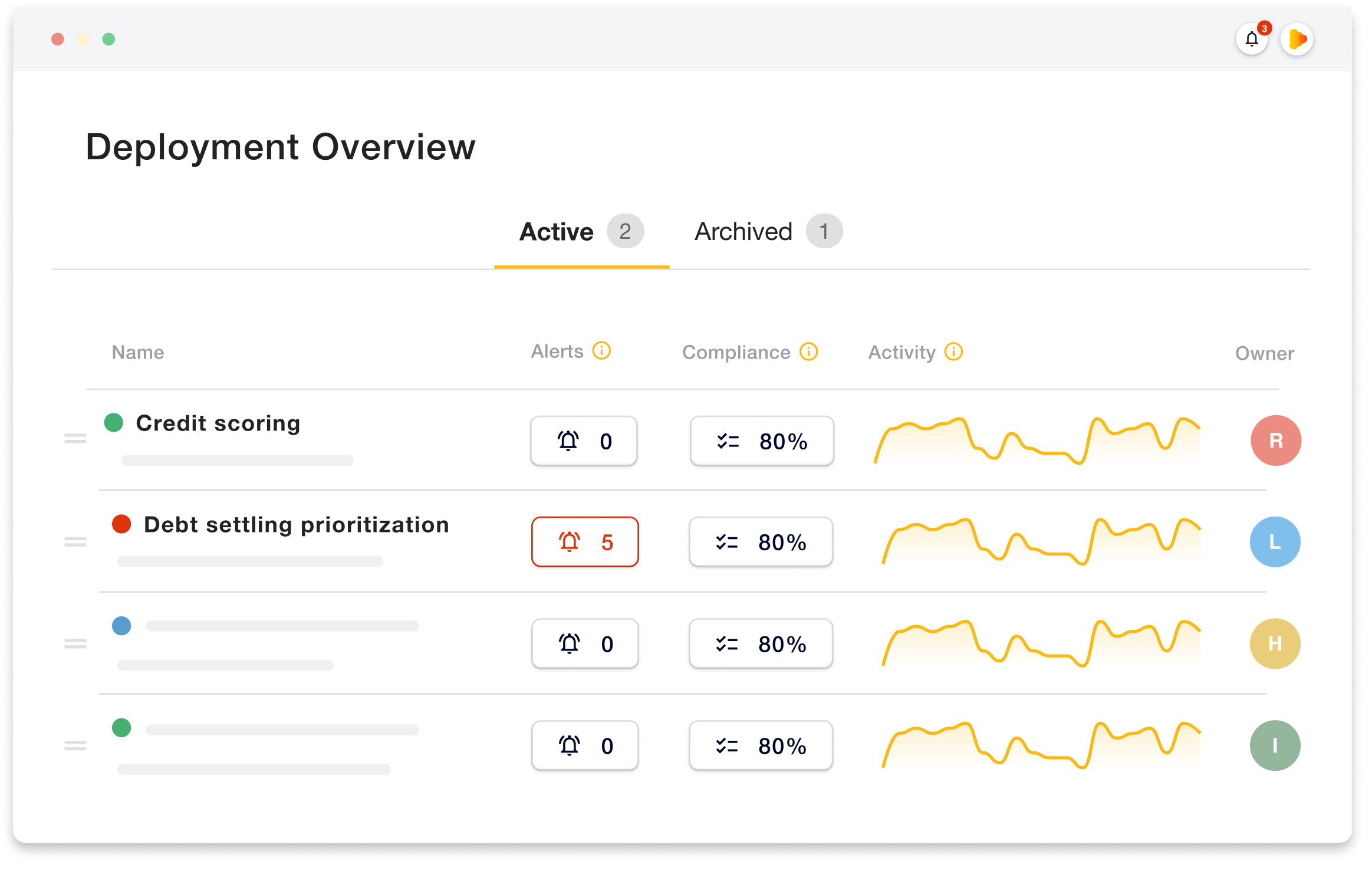

AI in credit risk management, lending, and debt collection applies to a great deal of use cases. However, this variety of use cases can make financials lose oversight of running models. This makes tasks like monitoring performance and ensuring compliance hard and inefficient for teams, leading to higher costs and increased governance risks.

Deeploy enables teams to deploy, serve, monitor, and manage AI models in one single platform, providing a unified view of all running models along the organization.

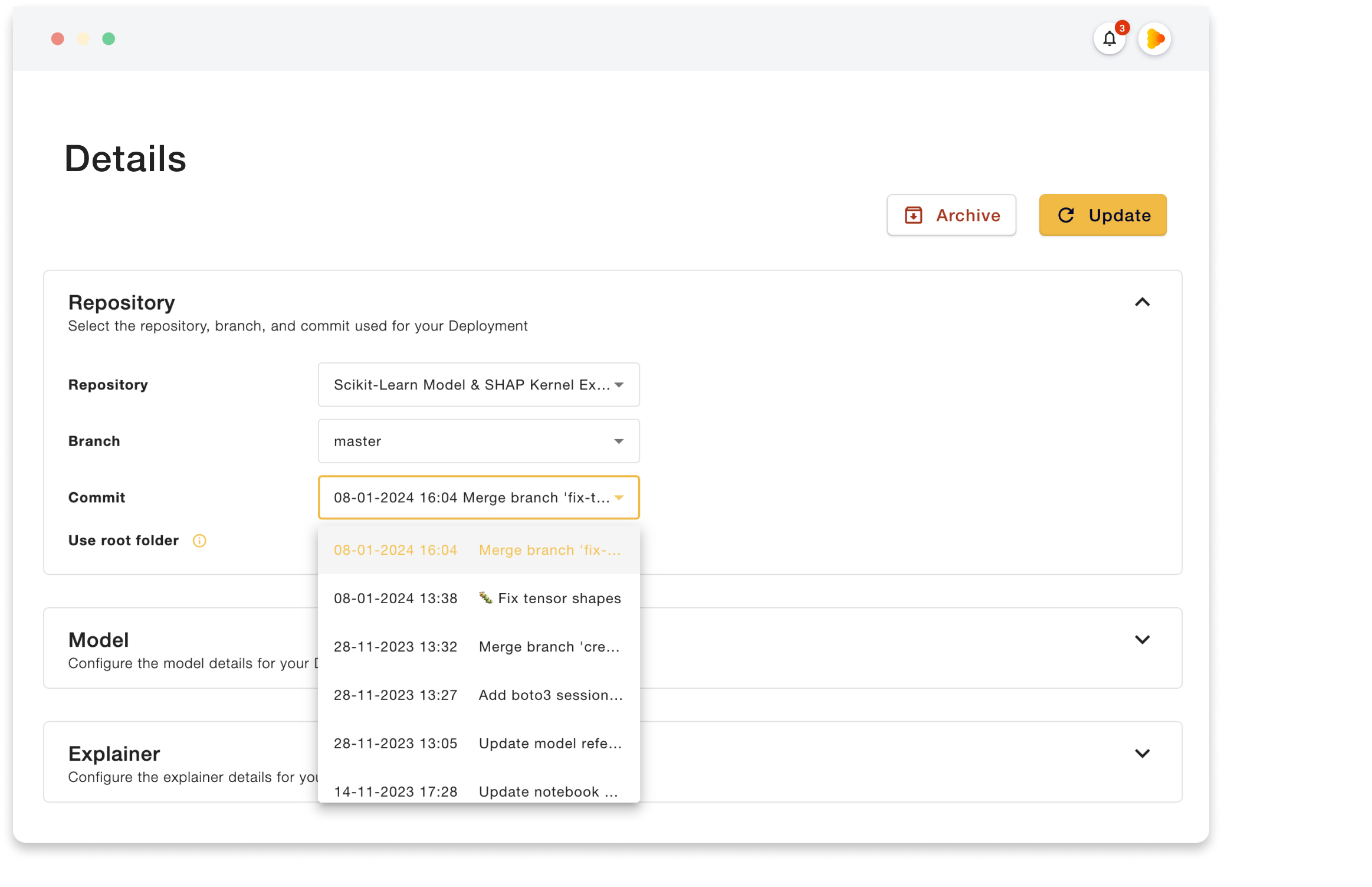

Moreover, Deeploy integrates with all major MLOps platforms and allows for simple model updates directly through the platform, facilitating adoption into the workflows of data teams.

Ensure ownership & accountability

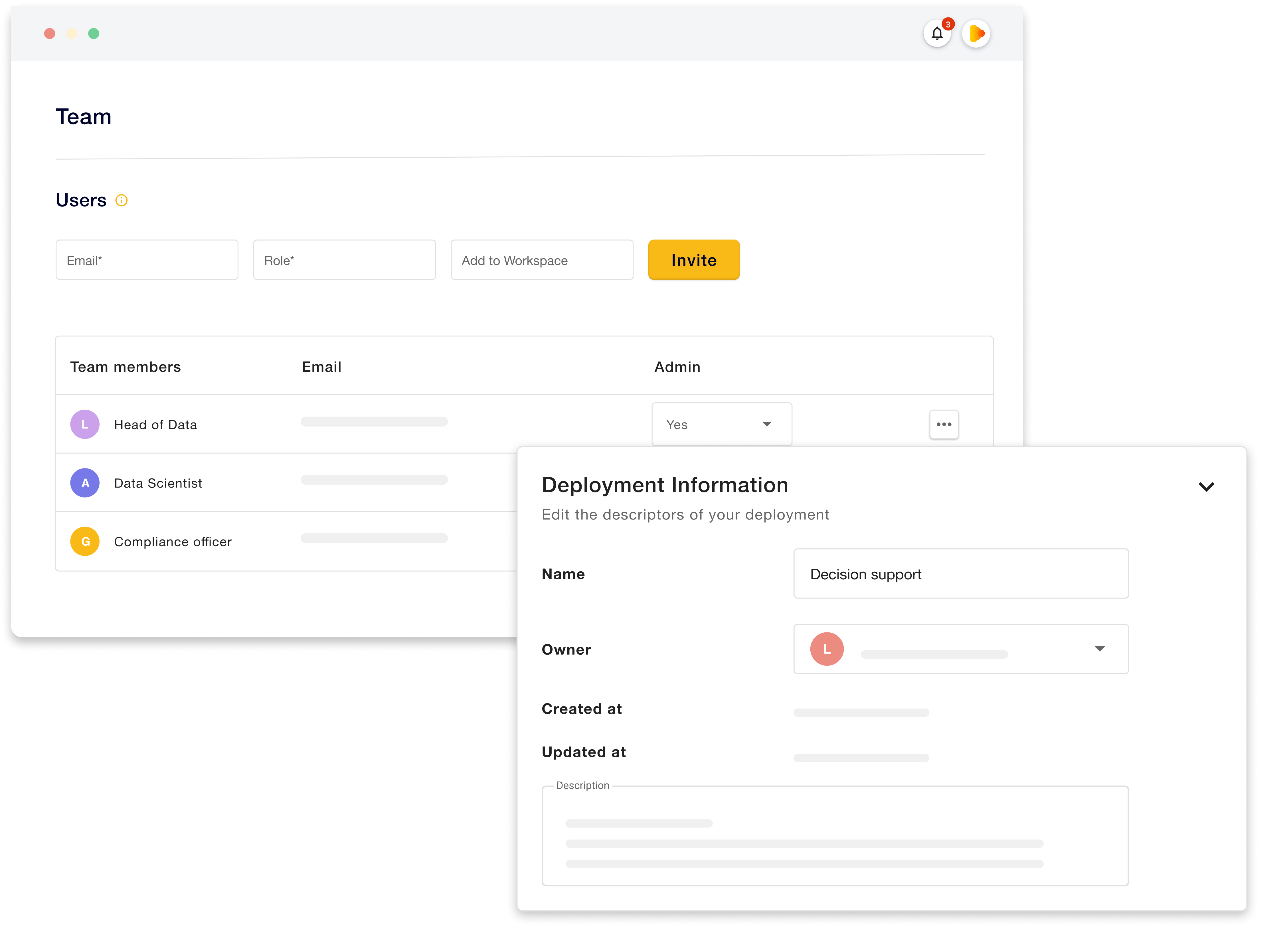

To better control and oversee running models, it is beneficial for organizations to delegate responsibility and ownership over use cases.

On Deeploy a clear distinction between teams and workspaces can be made to ensure the right oversight over different AI applications within the same organization. Teams can be created to differentiate between departments and types of applications, while workspaces can be used to further drill down model access.

Moreover, it is also possible to assign model owners to models, who are responsible and accountable for maintaining control over its ongoing operation.

Increase consumer & stakeholder trust

Ensure transparency and explainability

Consumer trust in AI heavily depends on how transparent financials are about their operations. A lack of consumer trust can quickly lead to reputational damages and customer churn.

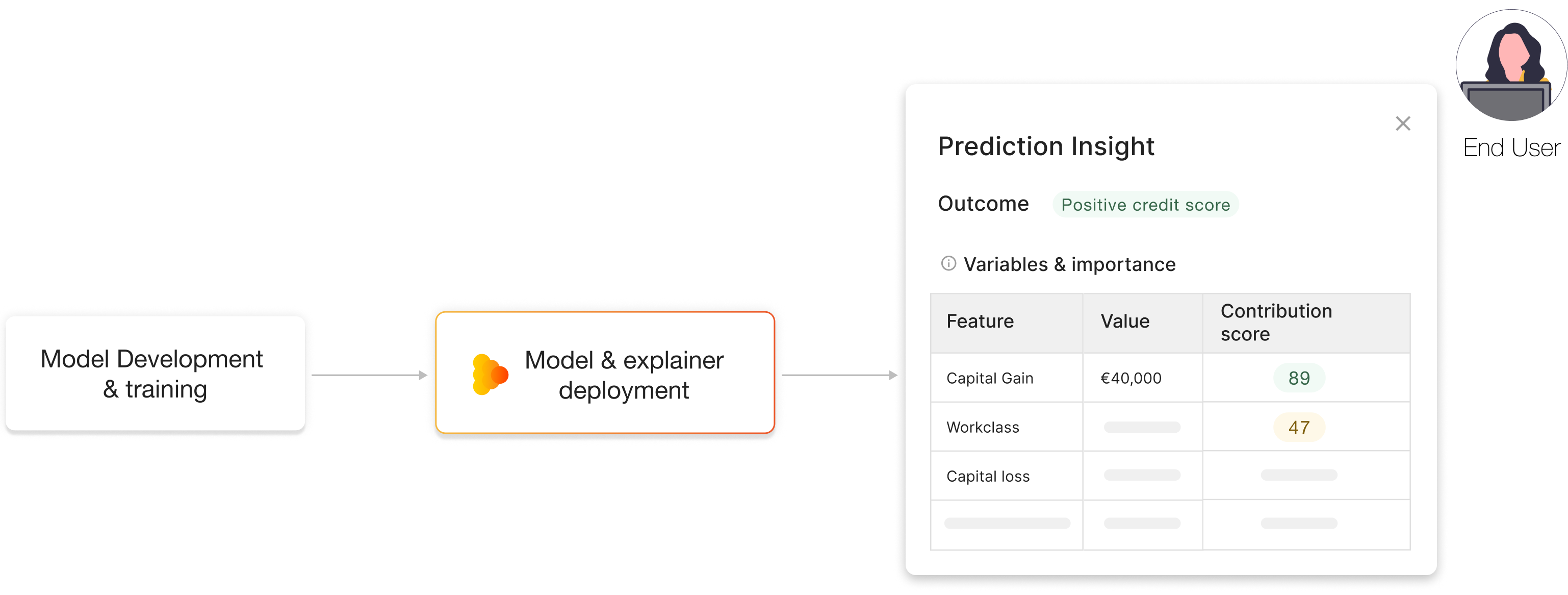

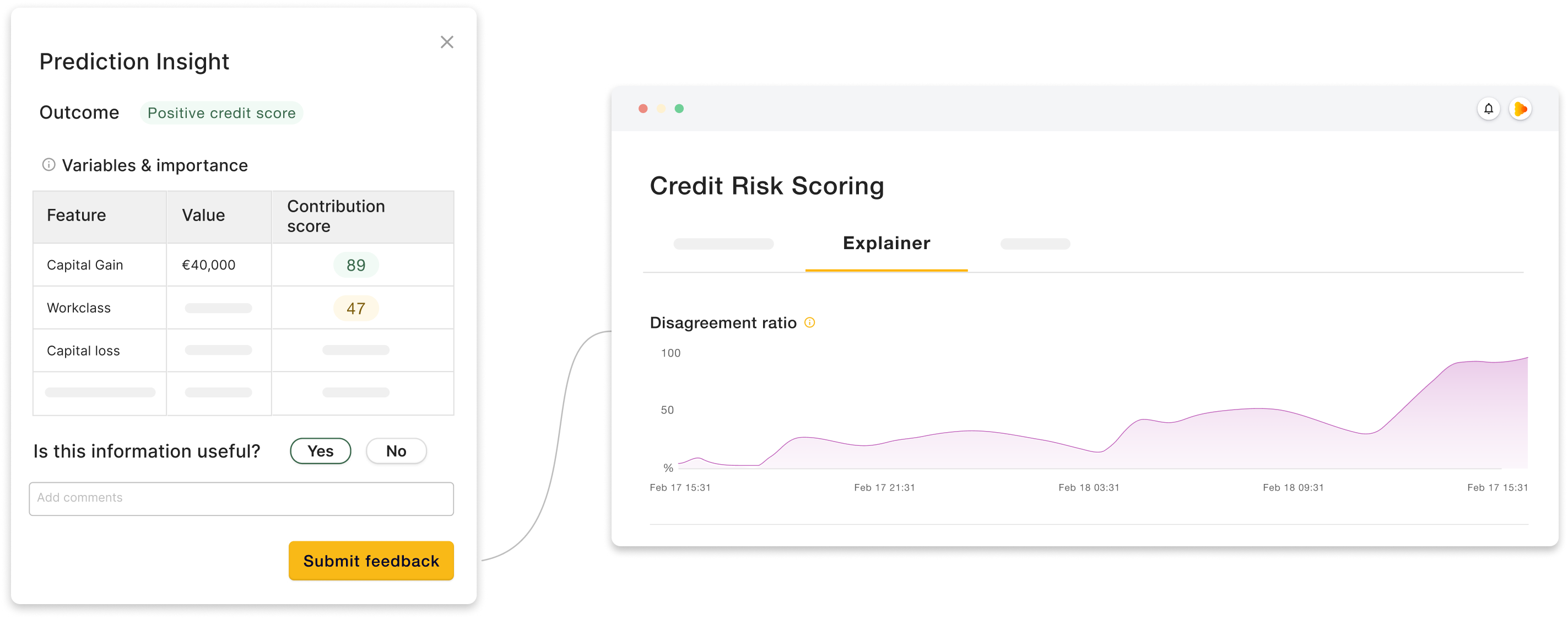

Explainability is a big focus of Deeploy’s platform. Data teams can deploy models with a variety of standard and custom explainer frameworks. This ensures the reasoning behind any AI decision is clear and can be easily provided.

This is valuable not only for consumers but also for employers who are the end users of models. Ensuring employees understand the recommendations or decisions given by AI models increases trust and places a protective layer between the model and the end decision.

Maintain human oversight

Having human experts overlook model decisions acts as a safeguard against potential errors and biases. Deeploy allows for end users of models to evaluate and give feedback on model decisions. This feedback can then be fed back into Deeploy where it is translated into the disagreement ratio metric.

The accuracy of a model can be evaluated by comparing predicted outcomes to real-world observations provided by feedback. Thus, being able to monitor the disagreement ratio helps data teams determine model effectiveness and identify areas for improvement.

Monitor performance & accuracy

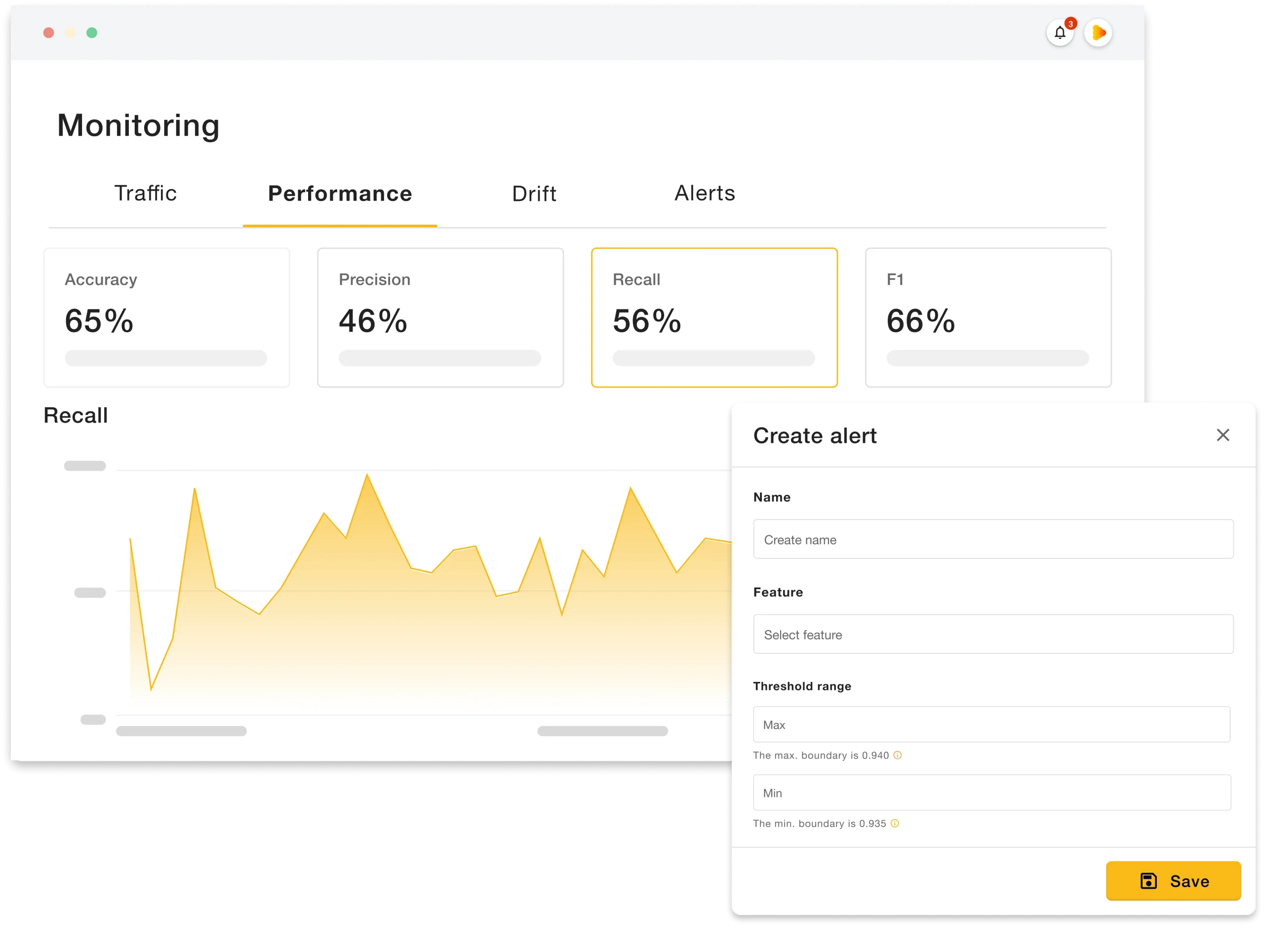

AI applications need to be solid and trustworthy. Apart from monitoring accuracy, models must also be closely monitored over time for traffic, errors, performance, and drift, among other metrics.

Deeploy combines all necessary monitoring metrics into one platform, facilitating the work of data teams. Moreover, alerts can also be set for all metrics, allowing teams swift action in case of model degradation.

Comply & report to regulators

The use of AI for use cases around credit risk is under increasing pressure from regulators and regulations. Namely, the recent EU AI Act defines AI systems intended to be used to evaluate the creditworthiness of natural persons or establish their credit score, to be high risk and thus subject to strict transparency, security, and fairness requirements.

Demonstrate transparency of operations

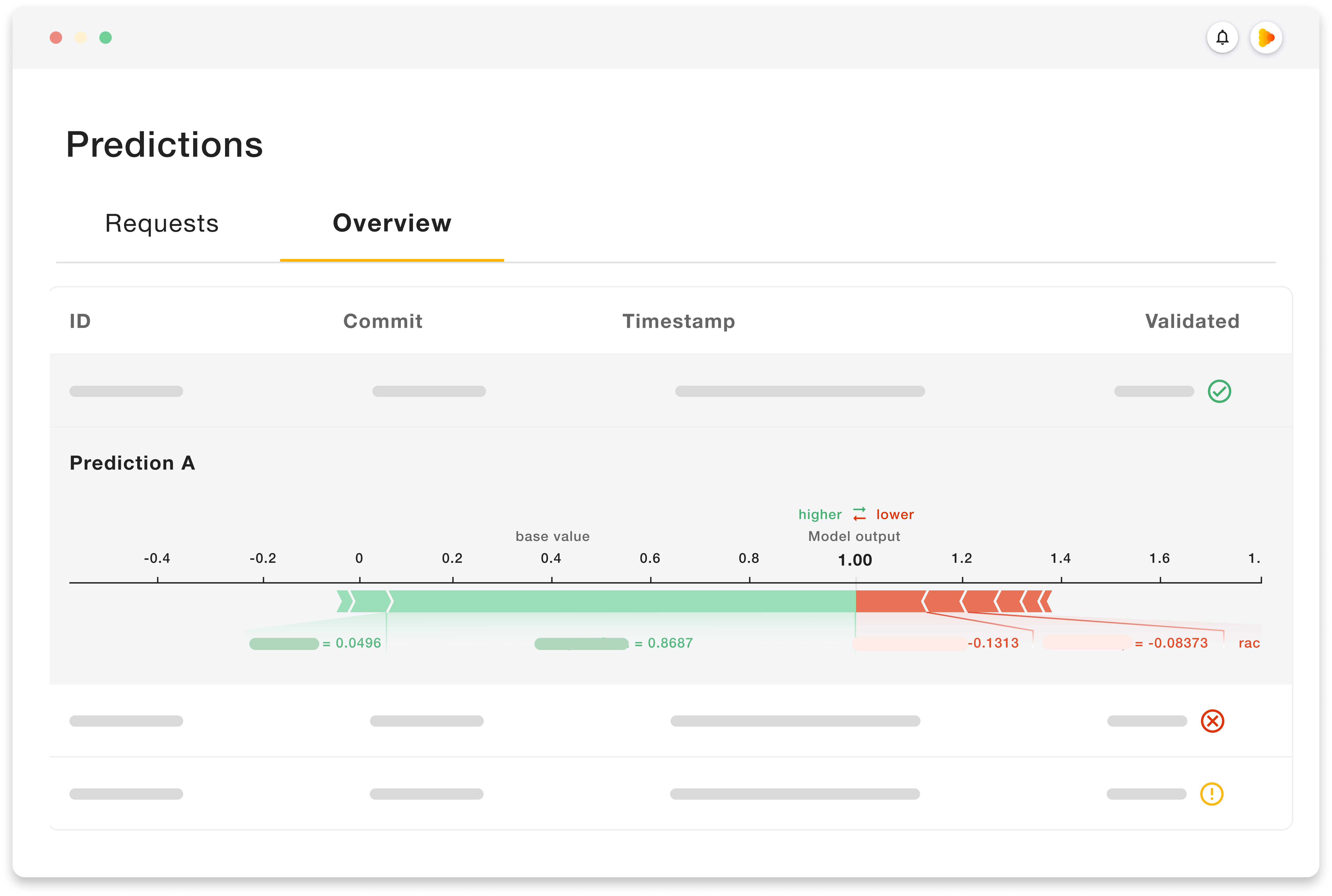

One of the biggest demands of regulators and regulations is that AI systems operate transparently. In Deeploy, all model predictions/recommendations and their accompanied explanations can be traced back and reproduced, creating a full audit trail over model decisions. The predictions log can also be filtered for specific periods, aiding in pinpointing any potential issues or incidents.

Record & store model operations logs

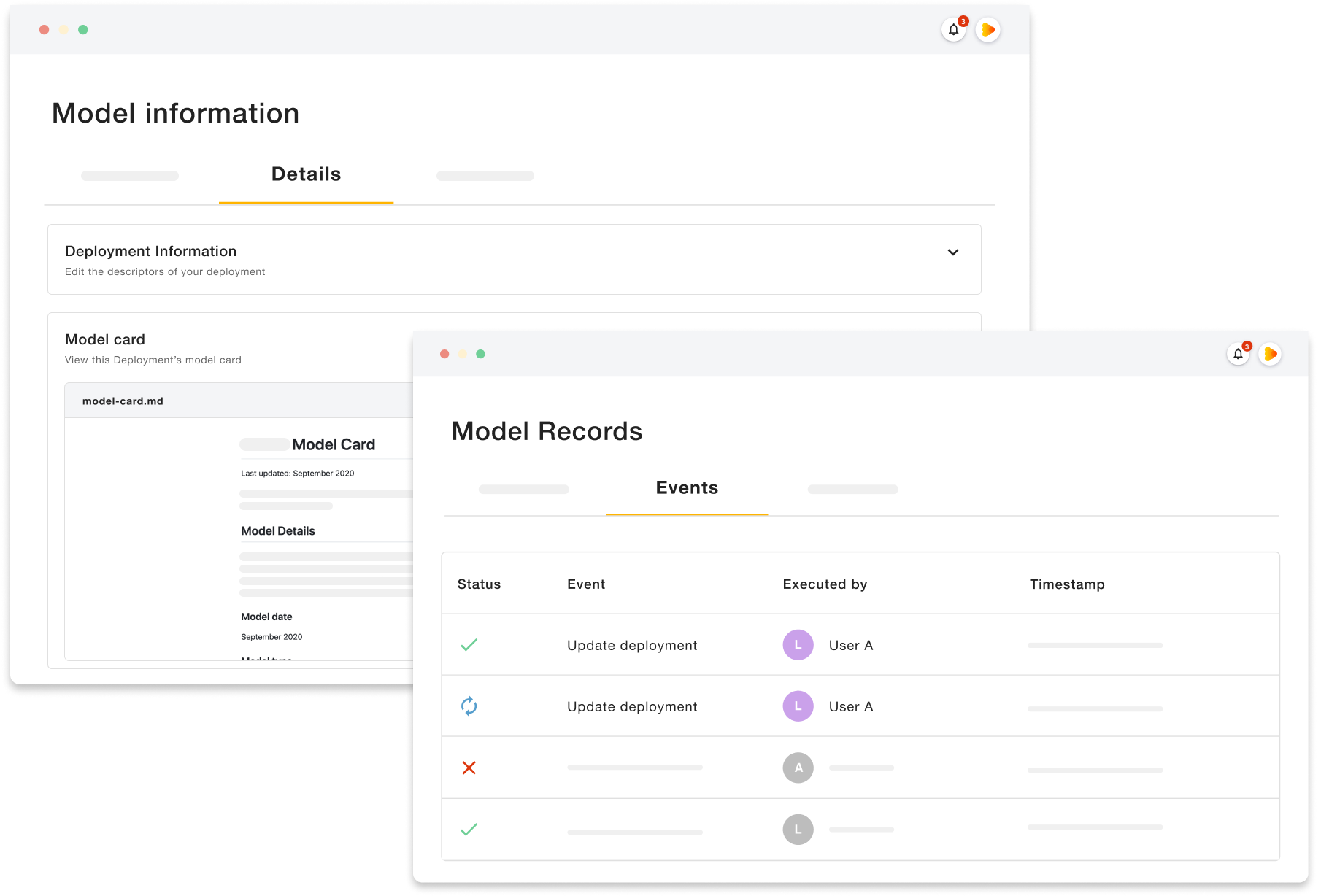

Regulations also underscore the need for information about each AI application to be easily available and for all events around the models to be logged for future consultation.

Deeploy facilitates these requirements by allowing teams to create and store model cards, with information on model capabilities, intended use, performance characteristics, and limitations. Additionally, all events for every model deployment are automatically recorded and stored, creating a full model registry that can be consulted when needed.

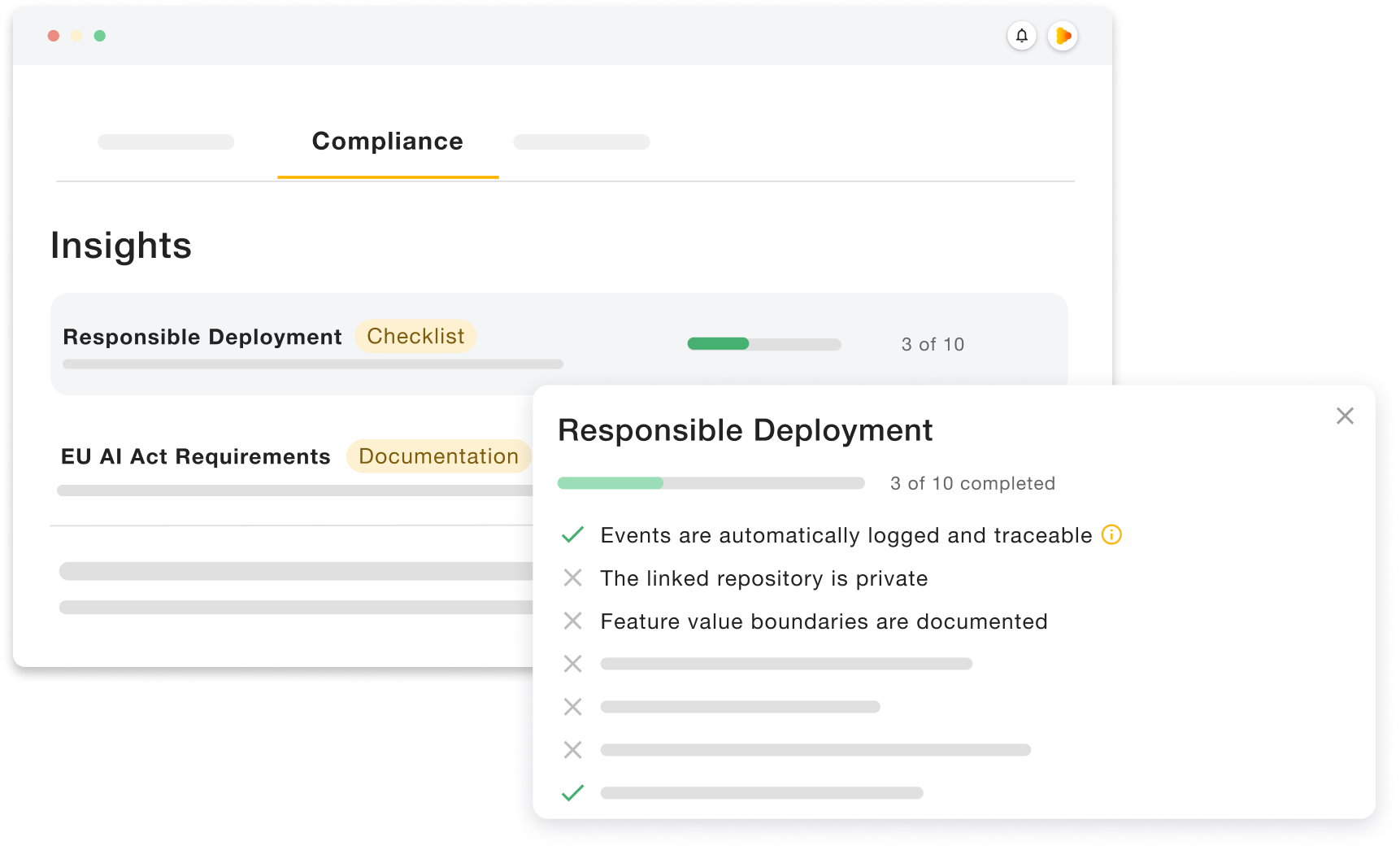

Stay on top of compliance

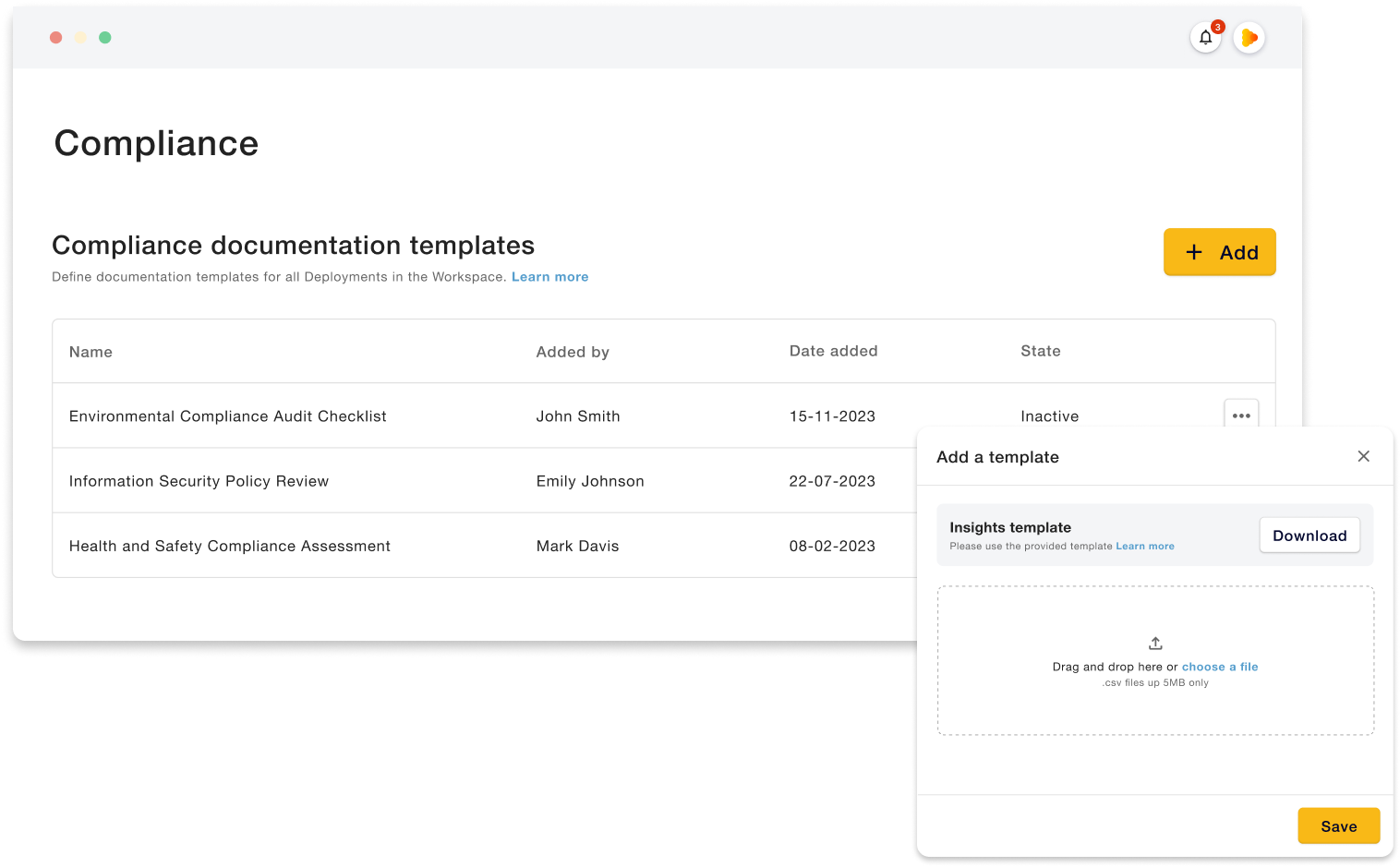

To ease compliance, it is also useful for teams to keep track of progress in fulfilling requirements. Deeploy offers both standard and customizable compliance templates, enabling teams to verify that compliance requirements are fulfilled for every AI application within the organization.

While standard templates offer general guidance on high-level regulation, the feature on custom compliance documentation, allows teams to upload checklists tailored to fit specific requirements and policies of the organization.

How to get started with Deeploy

Would you like to learn more about how you can take your first steps with Deeploy? Let one of our experts walk you through the platform and how it can be leveraged for your specific concerns or start with a trial of our SaaS solution.