Why AI regulation can foster innovation

About the authors:

An in-depth article about how regulation in AI can foster innovation.

Julia Reinhardt

Julia Reinhardt is currently a non-profit Mercator senior fellow focusing on AI governance for small AI companies at AI Campus Berlin. She’s a certified privacy professional and a former German diplomat working at the intersection of global politics and technology.

Providing guardrails to operationalize AI

Operationalizing AI has presented challenges over the years, from technical integration to stakeholder acceptance and legal bottlenecks. However, the past few years have seen a rise in the number of high-impact AI applications entering the market, particularly in industries such as fintech, insurance, healthcare, and government. Companies are using AI models to detect fraud, monitor transactions, assess risks, and streamline administrative tasks. It indeed assists repetitive decision-making actions.

Shifting attitudes towards AI

The use of AI often raises reservations among the general public. Especially in areas that have traditionally been dominated by human decisions like in Europe. Fortunately, people’s attitudes towards AI are slowly but surely shifting as they gain more understanding of its impact, opportunities, and risks. As public authorities realize the importance of AI and acknowledge that it is here to stay, their view on AI is maturing. A few years ago, public authorities like central banks hesitated to approve high-risk AI applications. However, judges have more recently ruled in favor of using AI. They considered that, in specific cases, it is already more reliable and less biased than humans.

The reason for undeployed AI projects

Still, the majority of projects never see the dawn of light. What’s usually the reason for that, besides technological immaturity or lack of funding? Is overregulation (in Europe) the scapegoat, as many say? Opposed to what we often read and see, it’s in our opinion, not the killer regulation that blocks AI innovations (or would block them, once more ambitious regulation takes effect), but rather the lack of regulation and hence standardization that leads to a series of undeployed AI projects. Here’s why:

Why AI doesn’t go to market

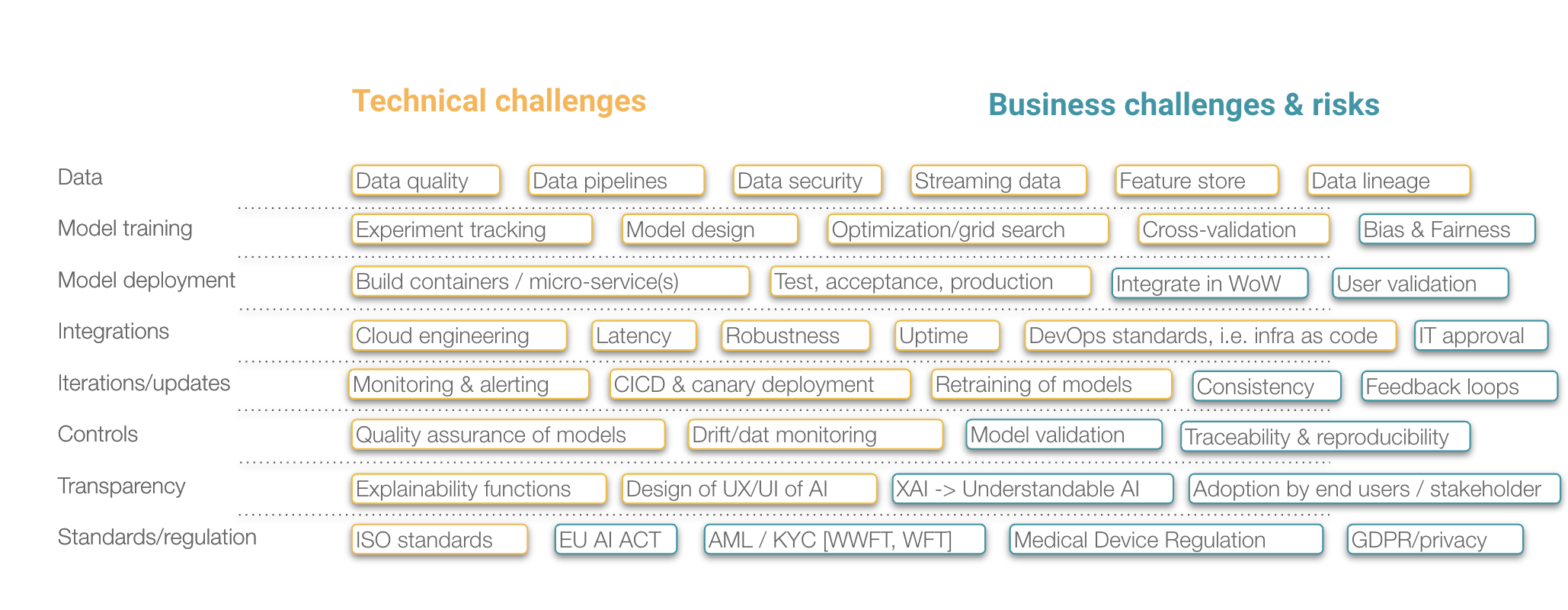

Traditionally, most AI projects followed a few iterative steps, including data exploration, modeling, and the deployment of models. Each step comes with new challenges. i.e. access and quality of data, the accuracy of predictions, or difficulties in integrating with legacy systems.

This has led to too much focus on model development, while not enough focus has been put on the (AI) system. Hence, while the model has high accuracy, it is often hard to integrate. Moreover, it doesn’t fit too well with the way of working of its end users. What commonly follows are long internal discussions, trying to convince stakeholders to use what was built and not always taking criticism seriously.

Furthermore, risks are often overlooked. Data Privacy Officers (DPOs) are not always involved right from the beginning, and mature software practices are skipped or postponed to a later stage. This means important aspects of DevOps, SecOps and MLOps are not included in the first versions of an AI system. Either, they thought about them in a too-late stadium when too much of the system has already been established. The important aspects include version control, documentation, monitoring, traceability of decisions, feedback loops, and explainability.

Embrace the design phase to build successful AI applications

We tend to underestimate the value of the design phase in AI projects. For example, it can be a design of the infrastructure. But it can also be a UX/UI design of the interface toward end users.

By applying design thinking, we push ourselves to think about the end product that we would like to realize. This way, we raise questions much earlier about the comprehensibility of model outcomes. We also ask ourselves about the traceability of decisions, alerts, monitoring, and processes. This approach also forces us to think about integration right away, thinking on a system level, compared to a model level previously.

It also means the length of development projects increases dramatically. The question is: Is that actually a problem? In our experience, it isn’t, as the probability of success also increases dramatically. Developers who reflect well on integration and risks are more likely to have their product accepted by users and officers. To put it differently: don’t start building whenever you can’t deal with the risks in the design phase.

The role of regulators

Process standardization can help us out, pushing us to properly design an AI system before it’s built. The European AI Act is currently in its final negotiation stage on EU level. It covers a full spectrum of criteria that are often all important, and easy to overlook or underestimate. Especially when you are at an early product stage. They are, in our experience, useful guardrails for AI developers to help incorporate useful functions during the design phase of an AI system.

For example, the requirement for effective human oversight forces us to think about ways to give humans the appropriate controls to work with AI for high-risk use cases.

Other important requests that are covered by the AI Act include:

Risk management systems

Data and AI governance

Technical documentation

Record-keeping and reproducibility, while adhering to privacy standards

Transparency and provision of information to understand the system

Accuracy, robustness and data protection

To be honest, these future requirements should be part of any development process for high-impact AI systems. Making these mandatory now – for high-risk applications of AI only – encourage developers to do their due diligence, as they would with any pharmaceutical product. And we believe in the case of a product in the high-risk category, the processes are and should be similar.

The European AI Act actually encourages companies to also use these as voluntary steps for non-high-risk applications of AI. By applying these criteria voluntarily, the question of whether a system is high-risk or not – which at this very moment is the “to be or not to be”-question a lot of AI start-ups are pondering in Europe, but also those overseas who sell AI products to Europe or want to invest – can be neglected. More focus must (or needs to) instead be put on a standardized process that authorities and customers can easily check upon.

The Potential of the EU-wide Database for Registered High-Risk AI Systems

The draft European AI Act sketches out another future requirement for high-risk systems. This draft has the potential to create an impact for the better. Companies must register all stand-alone high-risk systems (as defined in Title II of the regulation) that they make available, place on the market, or put in service in one of the countries of the European Union in a new EU-wide database.

Increased Visibility and Potential for Scrutiny

This brings more visibility, and therefore the potential for scrutiny. Anyone interested can search for registered AI systems in a centralized European Commission database. What looks like a mere bureaucratic step could promise interesting side-effects to the industry: The database as a place to show features of your system, to expose it to competitors and consumers alike, potentially to the media, if interest arises, and, above all, to researchers.

Benefits of Increased Visibility

Developers may not have the means to identify potentially harmful consequences of a system. But they should embrace the potential to receive feedback on such issues. Companies should not fear the risk and try to avoid it. But embrace increased visibility as a tool to leverage the optimization of the AI system. Also, vis-à-vis investors and other stakeholders pushing for fast success.

Voluntary Basis and Democratic Oversight

It might prove interesting, to use this register on a voluntary basis. Also, it would definitely facilitate processes, even for AI systems of the lower-risk categories. In the end, this might be a tiny but potentially mighty tool for AI companies to contribute to trust-building, and for smaller AI builders to show off the seriousness of their processes despite their lack of a podium in the general public. Some AI systems, due to their impact on society, should allow for democratic oversight, and this register could be a building block to enable it on a larger level.

The European AI Act: A Game-Changer

The AI Act follows a typical strategy of seemingly mere bureaucratic European Commission requirements. This strategy aims to become game-changers through the back door, at a relatively low price. Therefore, the potential gains for both well-intentioned industry and citizens could be high.

It is true that terminology in the draft European AI Act can be at times confusing. Furthermore, the determination of risk categories leads to sometimes unfair extra burdens. But in our experience, we stress that the regulation’s intended goal is good for the industry, especially for smaller AI companies, which it supports as well.

Is my investment future-proof? Am I running into shady areas with this application? What steps do I have to insert into my product-development process to avoid pitfalls? Small AI-developing companies cannot postpone these questions to a later stage. Once funding is spent, or when high-profile legal teams must figure out potential non-compliance, it may be too late. Furthermore, small AI companies do not have access to the same resources as big tech.

Secondly, we aim to demonstrate that it’s worth investing more time and effort in due diligence and reassuring users. By doing so, we can earn consumers’ trust and increase the likelihood of our system standing out positively in the market.

Europe regulates AI: Should we worry?

The EU AI Act: Guardrail or Disaster?

Some industry stakeholders believe the EU AI Act will have “disastrous consequences on European AI”. We do not agree. Those who are not prepared and those who refuse to be prepared might experience disastrous consequences from the EU AI Act. Additionally, those who deploy AI products in an irresponsible, “disrupt no matter what” way against better knowledge. For others, it may just be the guardrail they always wished for. And that, as imperfect as it may be in terms of its wording, especially after the inevitable search for, often unsatisfying, political compromise.

Regulation and Sustainable Development

We saw similar developments in other, highly regulated industries, like automotive, aviation, and the insurance market. While quick developments to “disrupt no matter what” is hard under (somewhat) strict regulation, sustainable development of new innovations is actually well stimulated, leading to strong markets and more sustainable business models.

Ongoing Discussions and Ambiguities in the EU AI Act

A lot of discussions are still ongoing. For example, the scope of “AI” is not yet clear. Some argue every form of an automated decision should be covered by this regulation, while others believe only complex algorithms should be in scope. Another discussion covers the cases which are considered “high risk”, or argues that not every aspect of the AI Act is relevant for specific cases. In the case of cybersecurity AI, companies need to update models so frequently that they cannot make model-specific documentation on accuracy and robustness. But rather lay out the general framework in which models are trained. In the case of models based on techniques like federated learning, companies should arrange governance on a system level.

In short, we believe that AI regulation will help standardize the requirements for AI projects. This will be lead to a better design of AI systems and hence a higher probability of operationalizing the product. On the other hand, there is still room for improvement. In fact, every use case is different. Where transparency is often crucial, it isn’t in other cases. Hence, the enforcement of the AI regulations should be done on industry or even case level. Moreover, what’s crucial is to include people who are involved in the development of AI systems to work towards a feasible and strong framework for responsible AI.