AI cannot thrive without humans

Adopting AI presents tough challenges, especially in high-risk cases like banking, insurance, and healthcare. Ethical concerns, combined with increasing regulatory pressure, make it vital for companies to go about their AI implementation the right way.

One way to mitigate these concerns is by ensuring human oversight over the implemented AI systems. Humans should not only be involved in the productionalization of ML models but also be kept in the loop throughout the MLop’s lifecycle, monitoring the model’s performance, checking on its predictions/decisions, and being able to provide feedback on the latter.

What is the difference between human oversight and human in the loop?

Human oversight can be broadly considered as the active involvement and supervision of humans in the decision-making processes and operations of automated systems, particularly those powered by artificial intelligence (AI). The extent of these oversight mechanisms can then vary based on the specific application and potential risks associated with the AI system.

Additionally, under this umbrella term, different forms of human oversight can be considered. One of the most relevant ones is Human-In-The-Loop (HITL), which is defined as the capability for human intervention throughout the whole ML lifecycle. In practice, this means that humans are not only involved with setting up the systems and training and testing the model but are also capable of giving direct feedback to the model on its predictions.

It might seem counterintuitive, as we race towards making AI as intelligent and autonomous as possible, that aiming for more human involvement will provide considerable benefits. However, the advantages are remarkable.

Optimizing for accuracy

As humans continue to fine-tune the model’s responses to various cases, the algorithm becomes more accurate and consistent. Every time a human provides feedback on an output, that feedback can be used to improve/re-train the model. The more collaborative and effective the feedback, the quicker a model can be improved, producing more accurate results from the datasets provided in the training process.

Enhancing data collection

Machine Learning models need extensive processing of training data and huge amounts of it in order to make informed decisions, potentially causing delays for businesses adopting it for the first time. However, having a human-in-the-loop gives AI software the chance to shortcut this process. In what is called active/incremental learning, the overseeing human is able to give feedback on the accuracy of model predictions, which can then be used to retrain the ML model. This way, it is possible to improve the model to deliver more accurate results despite a lack of data.

Reducing bias

The allure of AI decision-making lies in its potential to overcome human subjectivity, bias, and prejudice. However, it has become apparent that numerous algorithms replicate and perpetuate the biases already present in our society. When humans design AI programs using historical data, there is a risk of perpetuating inequalities. By incorporating a human-in-the-loop approach, biases can be detected and corrected at an early stage.

Complying with regulations

Besides the initiative companies and entities might have to avoid harmful repercussions stemming from AI systems, there is also now regulatory pressure to do so. The European Union, in particular, has been drafting regulations around AI for some time now, and a full fledge AI Act is expected in the near future, mandating various development and use requirements.

New regulations call for HITL/Human Oversight

The European Union has been working on the EU AI Act, a set of comprehensive AI regulations that will directly affect high-risk use cases of AI systems, like what is often the case in the fintech and healthcare industries.

Amongst the main requirements, the EU AI Act stresses that high-risk AI systems should be designed and developed in a way that makes it possible for natural persons to oversee them. It also stresses that this human oversight shall aim to prevent or minimize the risks to health, safety, or fundamental rights.

The significance and urgency of human involvement in AI systems is apparent, but implementing this approach raises important questions.

How do we integrate meaningful and beneficial human interaction into an AI system? When can we truly say that a human is actively involved in the decision-making process?

Implementing HITL – The Human Feedback Loop

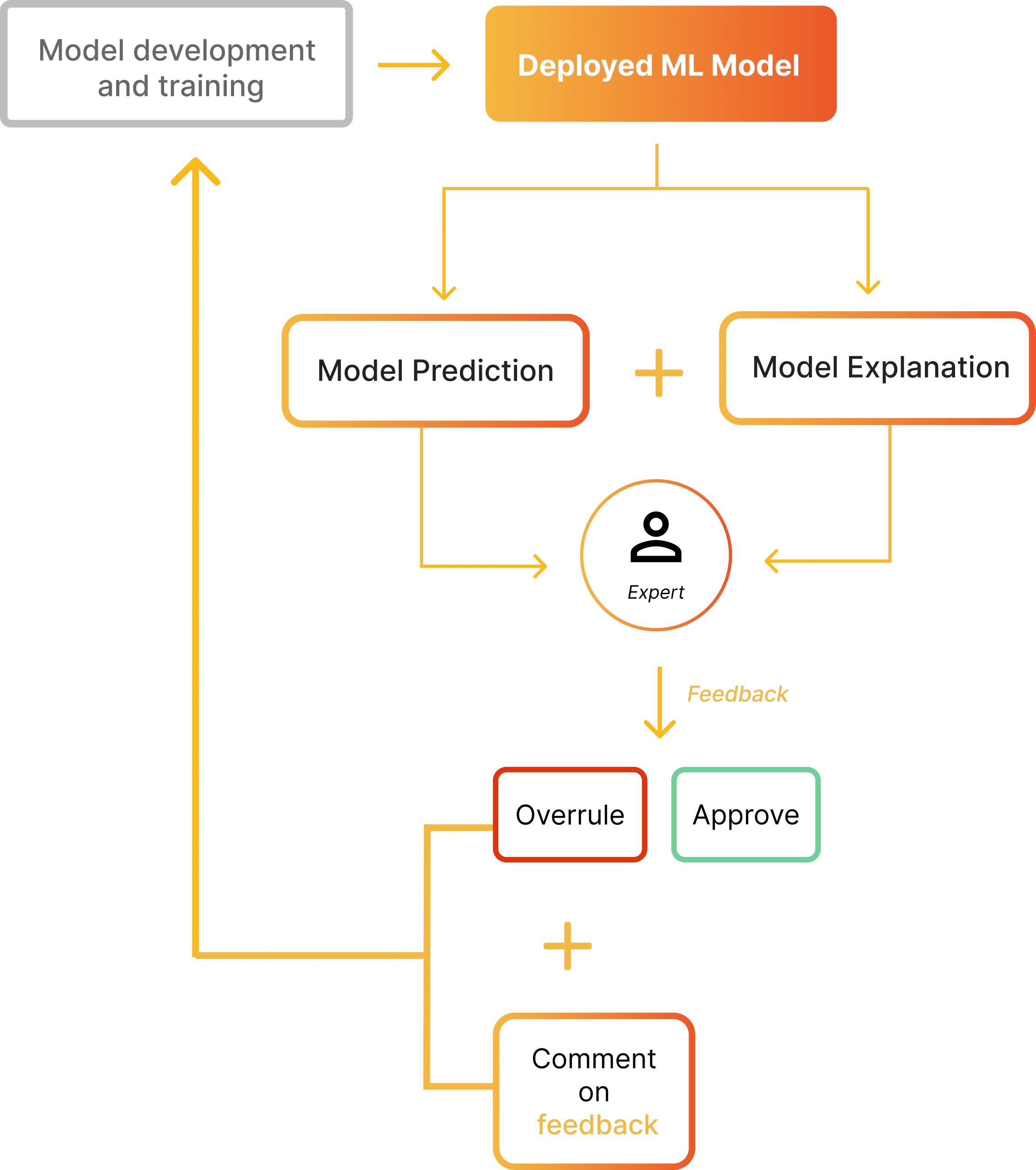

One of the most interesting forms of integrating human-in-the-loop practices is the possibility to give feedback back to the model, even after it’s out of the testing phase and fully deployed. This is commonly known as the human feedback loop.

Logically, in order for a human to give feedback on a prediction/decision, they need to understand why the model came to that conclusion. This is where explainability comes in. Explainability encompasses a range of different aspects, but, in the context of the human feedback loop, it refers to the explainability of predictions and decisions by the model.

Which general trends are represented by the model? Which feature contributed most to the prediction? What input would lead to a different decision?

Armed with this explanation, the human in the loop can then approve or overrule the model’s decision and provide comments on the reasoning behind this overruling. This data is then taken into account and utilized to better model performance, leading to better predictions.

Curious about how this looks in a real use case? Read on.

Deeploy + NiceDay: A collaboration between therapist, patient, and AI

The importance of AI is increasing in almost every industry, including healthcare, where the potential for more personalized and need-driven care is considerable.However, in order to implement AI in such a sensitive field, is it imperative to keep humans involved.

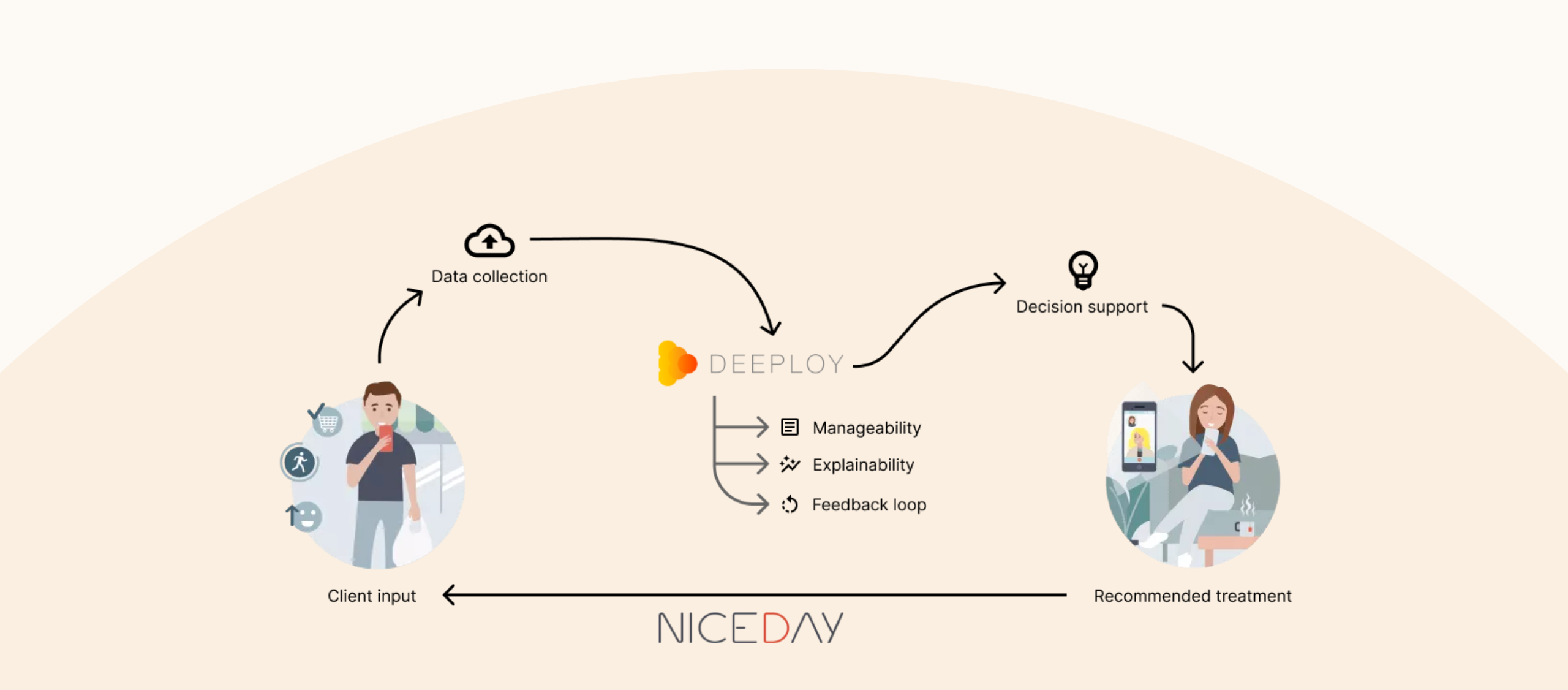

NiceDay is an online mental healthcare platform that encourages collaboration between therapists and clients, enabling the latter to share well-being updates between sessions. Informed by these registrations, therapists may then decide to check in with a client, providing need-driven, personalized mental health care.However, for therapists to decide which clients to reach out to at what moment in time is a challenge, given their limited time and the large amount of information to process.

In order to overcome this, NiceDay has an AI system in place that aids therapists in determining when and to which clients to reach out to. Nevertheless, while this can ensure that the care becomes more need-driven and personalized, it won’t be useful unless predictions are accurate. So how can accurate predictions be ensured? By keeping a human-in-the-loop (in this case the therapist) who can partake in the feedback loop.

When clients register information, data is collected to be fed into the machine learning model which then presents the therapists with recommendations for each client. Each recommendation is accompanied by an explainer so that the therapist can get fully understand the model output and, based on that, either accept it or overrule it and give feedback on this overruling.

This feedback is then used to improve the model, helping it make more accurate predictions. The feedback loop continues, allowing the model to become more and more accurate over time and, in turn, streamlining the work of the therapist more and more.

Read more about NiceDay’s journey in responsibly implementing AI into their healthcare services.

The way forward

Keeping a human in the loop allows us to harness the respective strengths of both humans and machines. Humans contribute contextual understanding, intuition, creativity, and ethical considerations to decision-making, while machines offer speed, scalability, and data processing capabilities. By combining these two elements, it becomes possible to achieve more precise and reliable results compared to relying solely on either approach.

Keeping a human-in-the-loop also acts as a safeguard against errors, biases, or unforeseen circumstances that automated systems may encounter. They provide a vital layer of accountability, responsibility, and judgment that machines may lack.

In general, while AI can help in decision-making, it is crucial to maintain a human intermediary between the model and the final decision. This arrangement involves an iterative feedback process, where humans continuously provide input and guidance to the AI system. This iterative feedback loop allows for ongoing refinement and improvement of the decision-making process, in turn, making AI more accurate, fair, and a natural extension of human intelligence, co-existing to help humans and organizations make wiser decisions.