What we mean with Explainability of AI

About the author

Maarten Stolk | CEO

Maarten Stolk is the co-founder of Deeploy, a platform focused on enabling responsible deployment and explainability of AI, particularly in high risk use cases. Previously a data scientist, Maarten experienced the struggles of getting AI to production first hand and how explainability and transparency are vital to the advancement of AI.

Explainability is a fascinating topic. It covers a research field where a wide variety of experts come together: mathematicians, engineers, psychologists, philosophers and regulators, which makes it one of the most interesting. I have been involved in quite some AI projects where explainability – or XAI – turned out to be crucial. So, I decided to gather and share my experiences, and the experiences of my colleagues at Deeploy.

In this article we cover:

- Why even bother about explainability?

- What are the different aspects of explainability

- Justification explainability

- Strategic explainability

- Understandable, not explainable

- What’s next?

- How we make AI explainable

Why even bother about explainability?

AI is one of the biggest innovations of our time. It can change the way we live, work, care, teach and interact with each other. We can automate most of our work – being able to spend more time on family – or find treatments for diseases which used to be lethal. Even the biggest skeptics have to admit: AI is changing the way we live and has huge potential.

But just like humans, AI has its shortcomings. People make noisy, inconsistent decisions based on assumptions that are sometimes hard to check, and so does AI. Especially when AI models become more complex, it becomes harder to align AI with our goals, norms and values. Even though accuracy can (or seems to be) higher than humans would achieve, decisions can turn out worse than those humans would make if the underlying values are not aligned.

This wouldn’t all be so bad if AI models could be held accountable. But that’s exactly where the biggest difference lies. As a society, we have developed a system of rules, norms and values to control human decisions. It helps us steer people and technology in the right direction. We often demand that both people and systems support important decisions by some kind of explanation and store those decisions and explanations such that others can validate.

We strive to do the same with AI systems. That system of rules, norms and values for AI is on the horizon. The European Commission has recently published a proposal for what is going to be the first attempt ever to insert AI systems and their use in a coherent legal framework. The proposal explicitly refers to the risk of AI systems not being explainable and possibly being biased as a result. The proposal is being drafted further, most terms are still rather ambiguous and with no consensus on the normative criteria for solving the challenges of AI.

Critics of the “non-responsible” employment of AI systems and the possible harms for people and society have also reached a broader audience thanks to popular books, such as “The Black Box Society” by Frank Pasquale and The Alignment Problem” by Brian Christian.

To me (and the people at Deeploy), explainability of AI means that everyone involved in algorithmic decision-making should be able to understand well enough how decisions have been made. This goes beyond applying some post-hoc XAI methods or applying a certain fairness framework on an AI system. It also includes traceability of decisions, ownership of models, accountability and transparency in the deployment process. In this article, we try to explain our definition of explainability, based on our experiences and research.

The different aspects of explainability

Whenever you talk about or search for explainability you quickly end up on the term “XAI”, or eXplainable AI: an AI subfield that focuses on methods to make AI predictions or models explainable. This field is sometimes described as justification explainability. These kinds of explainability methods support predictions made about or by algorithms and hence are tied closely together with models.

But other public values like accountability, transparency, equality, privacy, security, sustainability, and interoperability should be given attention when designing AI for public use. This field is sometimes covered by the term strategic explainability. It is a broader field of which justification explainability forms a subset, and a partial solution to the challenge of making AI explainable.

The field of explainability, therefore, covers a wide variety of challenges. Hans de Bruijn, Marijn Janssen and Martijn Warnier give an overview of the challenges of explainability, and “The perils and pitfalls of explainable AI: Strategies for explaining algorithmic decision-making”.

Justification Explainability

Explainability is an intuitively interesting concept but is often hard to realize in practice. My colleague Sophia Zitman wrote an article about hear learnings around explainability in practice. In her article, Sophia describes how she applied explainability in real life, including the challenges she faced when applying explainability methods on an AI model in production.

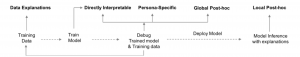

Explanations are required in different stages of developing and applying AI in practice, as described below. During training and debugging, it’s important for internal stakeholders and the developer of the models to understand its dynamics. During and after deployment, explainability shifts towards explainability of predictions and decisions (”local post-hoc”) for end-users (”subjects”).

Justification XAI methods and when to use which

The field of XAI is growing rapidly, with over 100 methods currently available (a large overview of methods can be found here). The XAI methods are clustered by the goal of the XAI methods, like trustworthiness, fairness and informativeness.

There are a million different ways of classifying explanations methods (a discussion started far before AI). In general, we find four types of explanations (in AI):

- Simplification: which general trends are represented by the model?

- By feature importance: which feature contributed most to the prediction?

- Local explanations: what happens if you zoom in and then generalize?

- Graphical visualizations: how can we visually represent the dynamics of a model or prediction?

Although these general methods of explainability sound appealing, all subsets of methods have their shortcomings. Simplifications are often not correct and are sometimes far off or even counter-factual, features can be interrelated, local explanations can fail to provide the complete picture, and graphical visualization requires assumptions about data that might not necessarily be true (Hans de Bruijn, Martijn Warnier en Marijn Janssen).

Furthermore, explanations (and interpretability) are very personal (I highly recommend this youtube series where this is illustrated perfectly), and hence multiple methods need to be in place in order to make an AI model explainable.

Strategic Explainability

Besides Justification Explainability, it’s important to make AI systems explainable to the public. Strategic explainability often has less to do with the algorithms, but more with the systems, organizations and people being involved. It covers the manageability, accountability, transparency and sustainability of AI. Below is an illustration we used with one of our cases to describe the explainability space in their context. It covers the explainability of models, decisions and predictions, but also the manageability of models, being able to explain to your peers what happens, and the accountability of AI systems.

Strategic explainability of AI in practice

Understandable, not explainable?

Lastly, it’s important to keep in mind that explainability is not about the explainer or developer, it’s about the person receiving the explanation. They determine what makes an explanation good. It’s important to design the explanation together with the subject of the explanation, to make sure they get the information they need, and hence they understand what they need to understand.

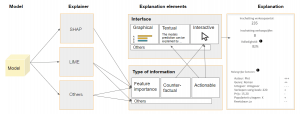

Design of explainability methods (XAI)

Above you find a diagram that illustrates the role of XAI methods like SHAP and LIME to transform a prediction into an explanation. While information and data moves from left to right, the design process should start at the end of the equation at the user, not the model or XAI method.

What’s next?

As the article shows, making AI explainable can be quite a big challenge. Explaining AI-based decisions is not a neutral process, both algorithms and context are not static. Furthermore, explainability covers a large subset of methods and research areas.

To us, a few things are important to always keep in mind:

- Who is demanding explainability? In the end, the users are determining what is covered under explainability, what is of importance, and what isn’t. It might very well be that collaboration among developers is not yet the highest priority, while explaining decisions to the public is. Focus on the fields which are important to explain, don’t try to be complete (you won’t be anyway).

- Make predictions traceable and reproducible. Context, organizations, models and explanations are dynamic. It’s therefore important to keep track of your history. Things shouldn’t just be explainable today, but also in a year from now.

- Balance explainability and impact. explainability is not one-dimensional and can even be competing (page 21). I often experienced situations where too much attention was put on preventing bias or the ethical side of XAI. Although often important, it’s only a subset of explainability. Don’t make the mistake to fixate on just one aspect of explainability, and ignore others. Furthermore, please do deploy things, even if it is just a first proof of concept. Only then will organizations be able to make impact and progress, even if not everything is fully explainable.

- Take regulation into account. There is a lot of regulation coming up on different playing fields. The European Commission is working on quite some legislation, like the European AI act, the Data Governance Act, the Digital Markets Act, etc. Furthermore, national governments, like the Danish (company legislation for AI and Data Ethics) and the Dutch (AI waakhond) are putting more focus on keeping control of AI.

- Include experts, using feedback loops. Experts can improve algorithms, and should therefore have the opportunity to overrule decisions, provide feedback and argument against decisions. This is only possible when decisions and predictions are explained to them.

- Update your frameworks, rules, guidelines and methods. The world is not static, neither is your model. Furthermore, explainability is not a neutral or fully quantitative science field. Keep your frameworks up to date, and keep updating based on (any kind of) feedback.

How we make AI explainable to everyone

The impact of AI will complement and augment human capabilities, not replace them. It is up to us, as a society, to steer this in the right direction, such that we can all benefit from the upsides of AI. By doing so, AI will always be an explainable asset for humanity and not become an intransparent liability.

At Deeploy, we aim to make AI as transparent as possible to everyone. We made a promise to:

- Make every AI prediction and decision explainable to everyone. We give people the power to understand how decisions are made, which assumptions are used and let people give feedback and overrule decisions. Together we can steer, iterate and improve AI models.

- Make and keep AI manageable: people can collaborate on and with AI. Every change in an AI algorithm is made transparent, such that people understand what happens. This results in durable, understandable and trustworthy AI solutions, which ensures organizations that energy is well spent.

- Keep AI accountable, for every type of model or framework. Every decision, explanation or update is traceable and can be reproduced. We help to comply AI with regulation, by providing the methods to keep control and use AI in a responsible way.