Transparency under the EU AI Act

On the 22nd of January, 2024, the unofficial consolidated text of the latest version of the EU AI Act was distributed online. One of the main pillars of the EU AI regulations revolves around transparency. But what does transparency under the EU AI Act entail? And how can organizations comply with the requirements? We dove into the details in this article.

Who is responsible under the EU AI Act?

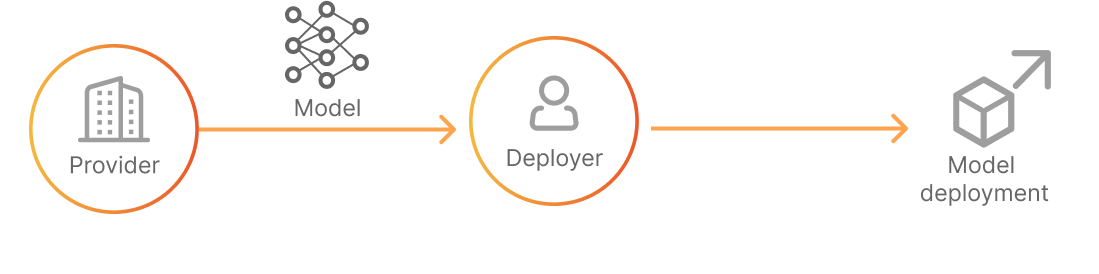

Before we dive into the details, it’s important to notice that the EU AI Act distinguishes between requirements for different actors involved in the AI life cycle. The most important distinction between actors and their roles is defined as follows:

‘Provider’ means a natural or legal person, public authority, agency or other body that develops an AI system or a general-purpose AI model (eg. an LLM or other large-scale generative AI model) or that has an AI system or a general purpose AI model developed and places them on the market or puts the system into service under its name or trademark, whether for payment or free of charge;

‘Deployer’ means any natural or legal person, public authority, agency, or other body using an AI system under its authority except where the AI system is used in the course of a personal non-professional activity;

To understand how the distinction between those actors plays out in practice, we sketched two different scenarios:

Use case 1: Companies like OpenAI and Google are providers of the Large Language Models they place on the market. A bank that implements an LLM from a provider in its customer service chatbot is a deployer of an AI system.

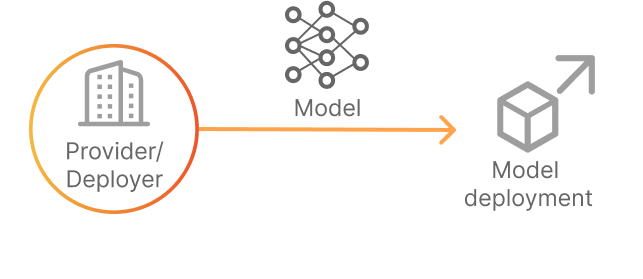

Use case 2: Companies can develop AI systems in house, for their own use. For example, a bank can develop its own credit risk AI systems, and put them into service. In that case, the bank is both a provider and a deployer of an AI system.

In the remainder of this article, we will dive deeper into the requirements for the second use case, and show how organizations that are both provider and deployer can comply.

EU AI Act requirements for high risk AI systems

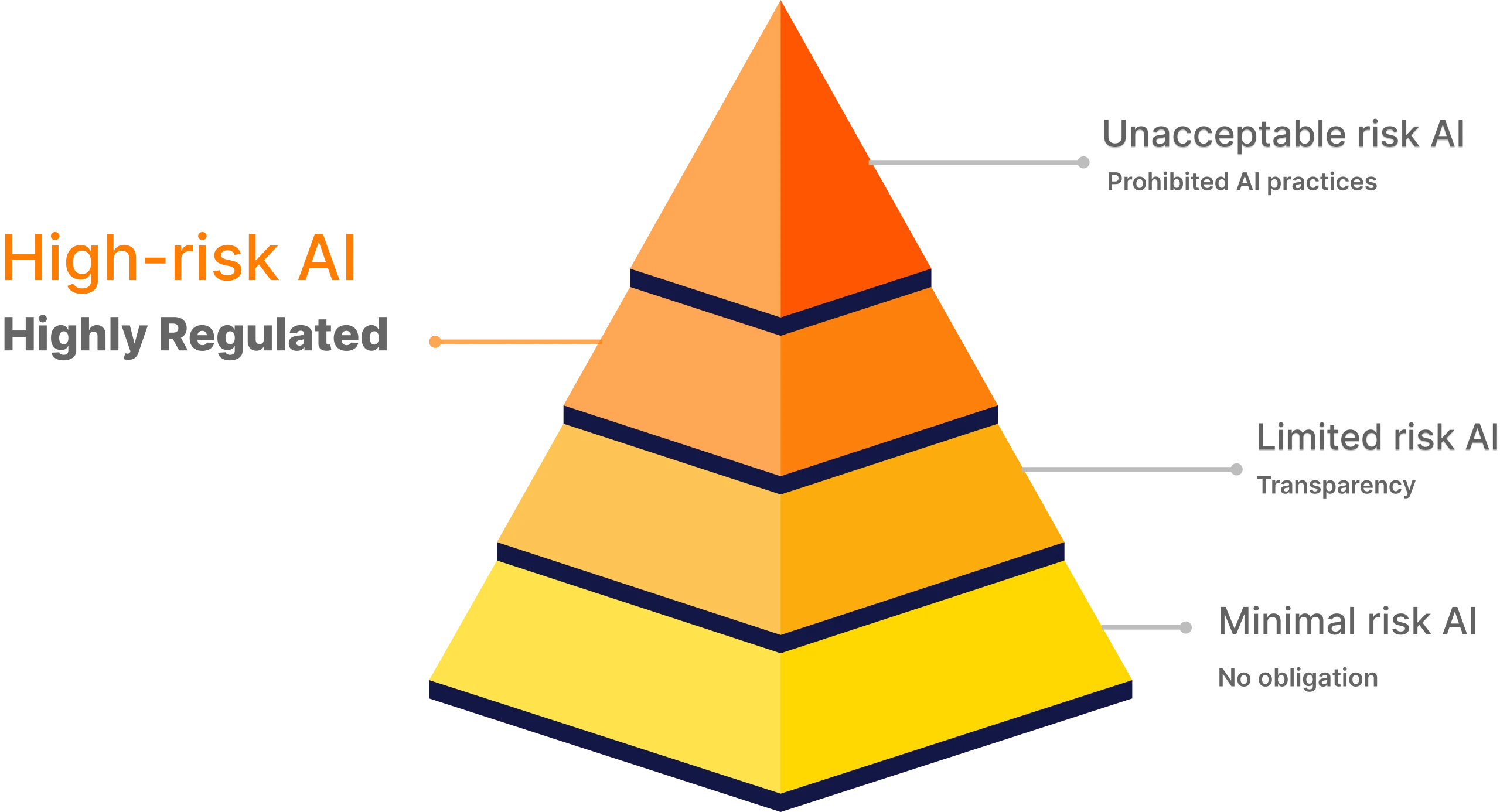

The EU AI Act specifies requirements for AI systems based on risk levels. The risk levels are “Unacceptable risk”, “High risk”, “Limited risk” and “Minimal risk”. While only AI systems classified as an unacceptable risk are prohibited, high-risk systems, which include many of the AI use cases being applied by today’s organizations, are under heavy restrictions.

According to the AI Act, High-risk systems can be any of the following:

The AI system is intended to be used as a safety component of a product or is a product in itself, that is covered by the Union harmonization legislation (Annex II) and required to undergo a third-party conformity assessment under those Annex II laws. These include, for example, AI systems used in vitro diagnostic medical devices.

The AI system falls into one of the areas in Annex III (this list can be changed or expanded over time. These include AI systems used to evaluate the creditworthiness of natural persons, perform risk assessments in health insurance, or analyze job applications.

Non-compliance with the rules can lead to fines ranging from 7.5 million euros or 1.5 % of turnover to 35 million euros or 7% of global turnover, depending on the violation and the size of the company. This is why it is vital for organizations to fully understand the EU AI regulation and have the right tools in place that ensure compliance.

Zoom in transparency requirements

In the EU AI Act, transparency is defined as follows:

Transparency means that AI systems are developed and used in a way that allows appropriate traceability and explainability while making humans aware that they communicate or interact with an AI system, as well as duly informing deployers of the capabilities and limitations of that AI system and affected persons about their rights.

The above definition of transparency underlines different requirements that organizations must understand and apply to their AI systems to be compliant with the EU AI Act. Below, we will dive into those requirements in detail.

Explainability of AI systems

Article 13 of the EU AI act specifies that high-risk AI systems should be designed and developed

“…in such a way to ensure that their operation is sufficiently transparent to enable deployers to interpret the system’s output and use it appropriately. An appropriate type and degree of transparency shall be ensured with a view to achieving compliance with the relevant obligations of the provider and deployer.”

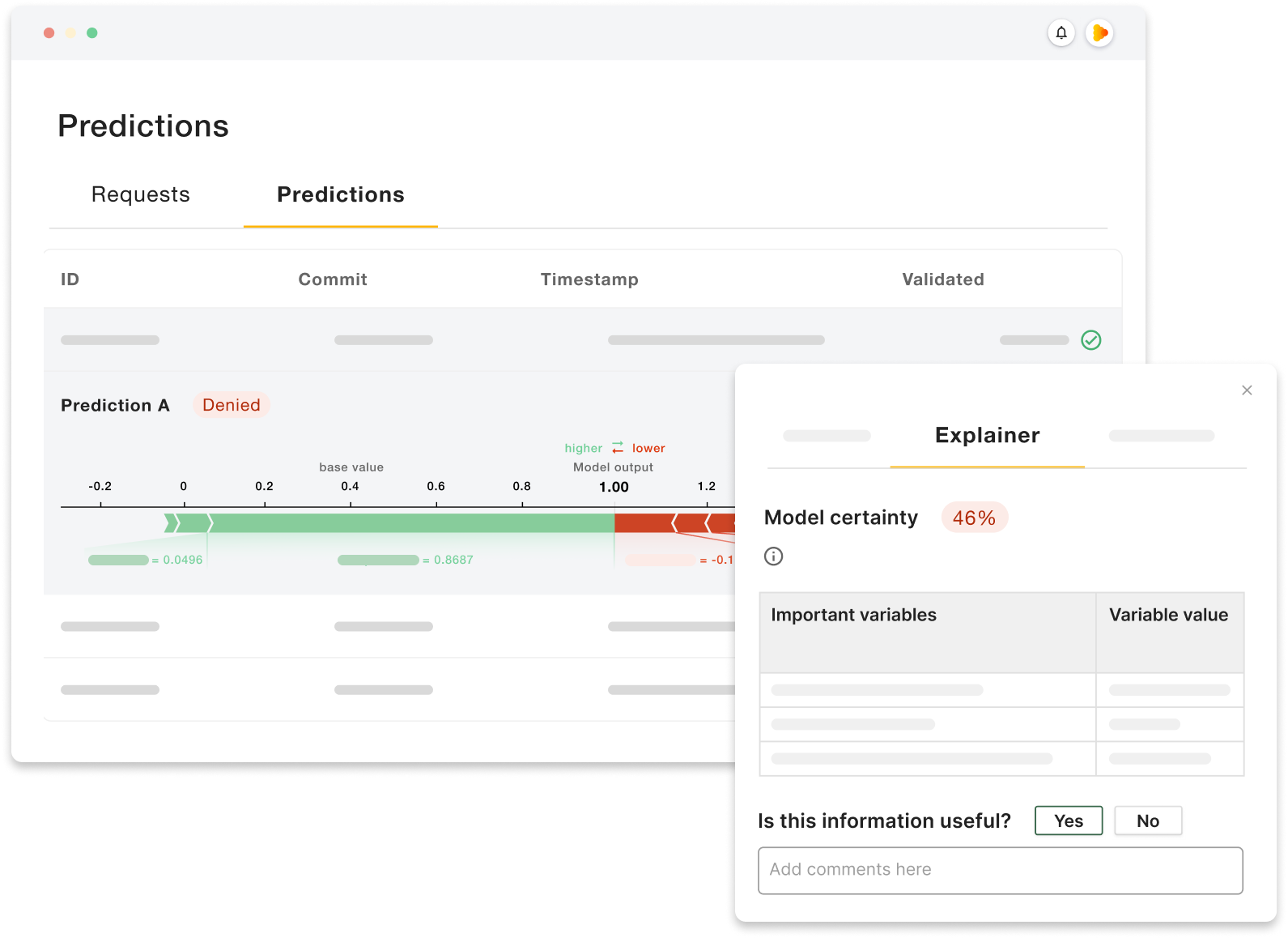

To enable deployers to interpret the system’s output and use it appropriately, the explainability of AI, is vital. The explainability of AI systems can be accomplished by utilizing explainability techniques (XAI); a set of processes and methods that allows human users to comprehend and trust the results and output created by AI models.

Explainability must also be targeted to the stakeholder for which the explanation is meant. Within the definition of the deployer, this could be the management of the business unit in which the AI system is to be implemented within a bank or it could be the employees within the business units ( e.g. financial advisors for loans) who utilize the AI system as decision support. The latter will require a different explanation than the explanation for management, highlighting the importance of tailoring explainability.

Deeploy supports organizations to implement explainability, by offering standardized as well as custom explainers. For example, standardized feature importance explanations for AI model predictions are available. Furthermore, Deeploy also offers support for the deployment of Shap Kernel, Anchor, MACE, and PDP explainers, as well as support for custom explainer Docker images.

Read a real case on how bunq utilized Deeploy to implement explainability in their transaction monitoring processes.

Traceability of AI systems

Article 12 of the EU AI Act specifies that high-risk AI systems shall “technically allow for the automatic recording of events (‘logs’) over the duration of the lifetime of the system.”

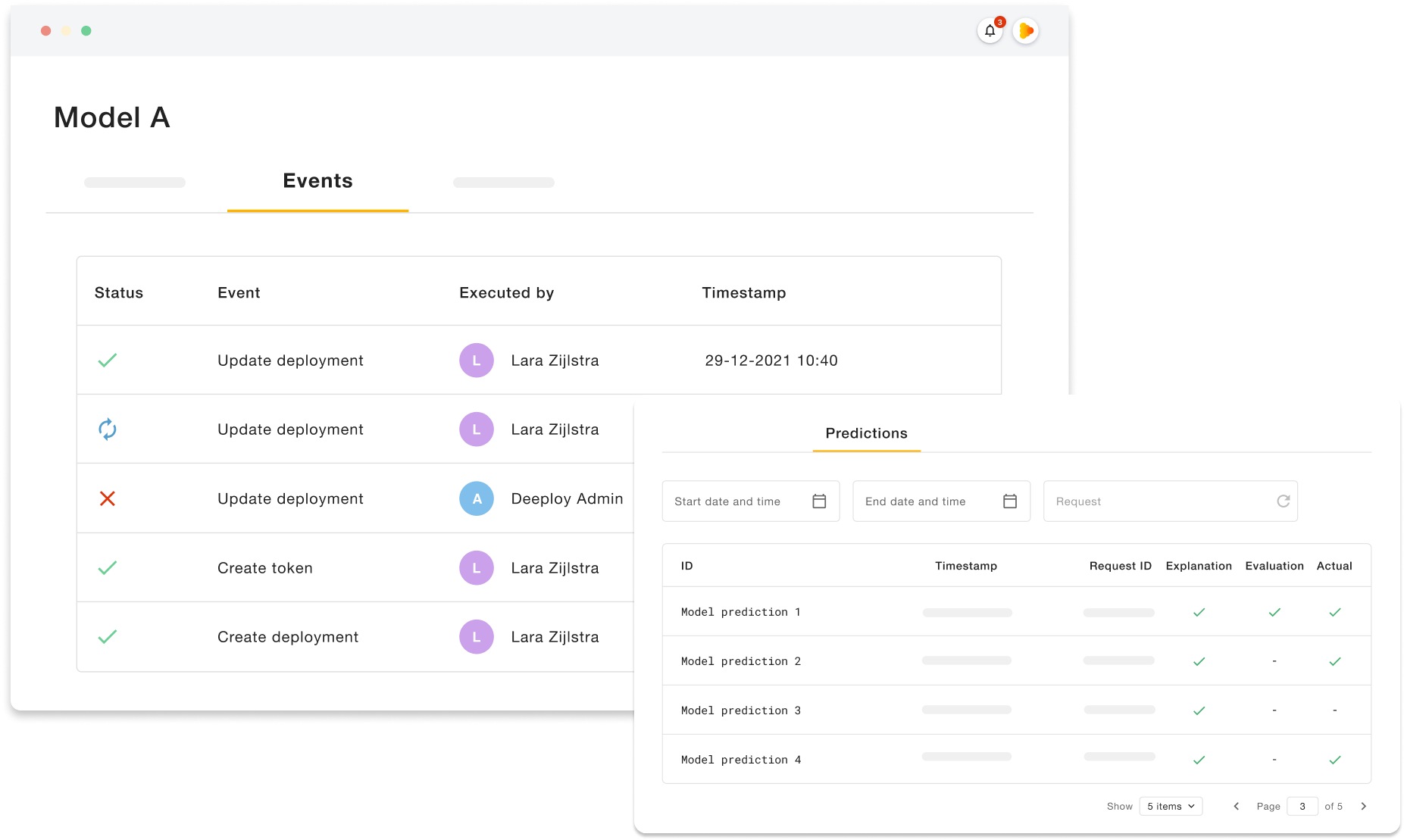

Automatic recording of events is essential to keep full control of an AI system during its lifetime. When implementing automatic recording of events when the AI system is used in operation, it is key to track all input data, predictions, human feedback, actuals and modifications, and updates to model deployment.

If implemented correctly, deployers of AI systems should be able to always trace back a prediction or decision made based on an AI system. This includes tracing back the model version used to make the prediction, the input data used for the prediction, human validation/overruling of the prediction, and the actual if applicable.

By providing automatic end-to-end traceability for AI systems, Deeploy enables organizations to implement automatic recording of events for their AI systems.

Mandatory technical documentation

Article 11 of the EU AI Act specifies that “the technical documentation of a high-risk AI system shall be drawn up before that system is placed on the market or put into service and shall be kept up-to-date.”

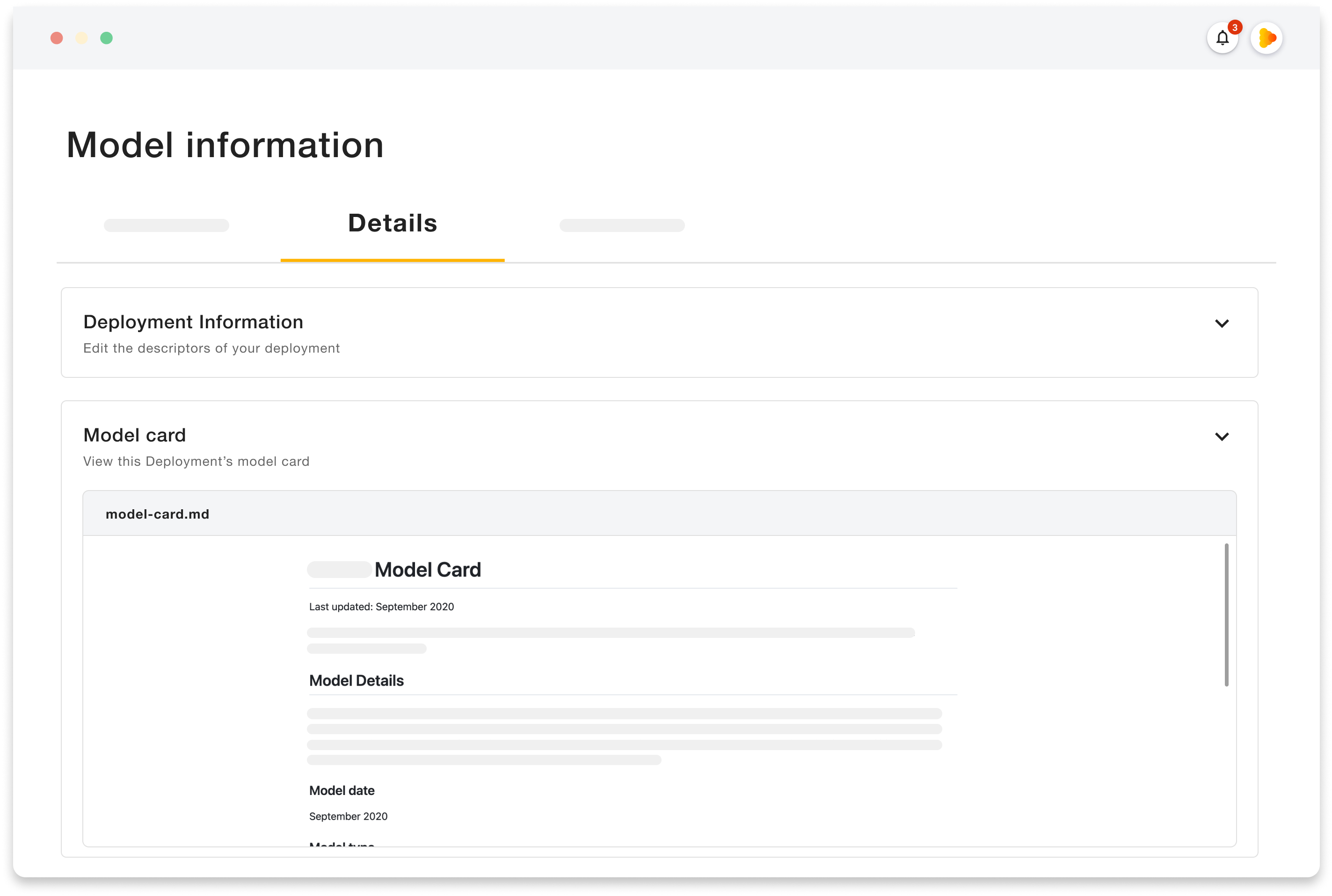

The technical documentation needs to contain, among others, a general description of the AI system, a detailed description of the elements of the AI system and the process for its development, and detailed information about the monitoring, functioning, and control of the AI system.

To keep the technical documentation alive and up-to-date, it is recommended to store it close to the actual environment in which the monitoring, functioning, and control of the AI system during its life cycle takes place. To do so, organizations can use a central AI management system that brings together model serving, monitoring, and documentation in one place.

Deeploy brings together those capabilities in one platform, by integrating with Git version control, to align technical documentation with the correct model version. Deeploy displays all up-to-date information available about the model in one place. This way, (technical) documentation and assessments around each AI system are available for all stakeholders.

Read more about how PGGM, one of the largest pension administrators and asset managers in Europe, utilized Deeploy to ensure governance and compliance by bringing documentation and model serving together in one central place.

Complying with the EU AI Act

Under the threat of heavy fines and the certainty of the enforcement of regulations on AI, organizations must take a proactive approach. By doing so, and adequately preparing to comply, more efforts can be concentrated on innovation and staying at the forefront of AI applications.

Deeploy aims to take that burden out of organizations, by providing a best-of-breed solution to the responsible deployment of AI. All the tools that allow data scientists to deploy and monitor models while providing tools to provide stakeholders with explainability, traceability, and governance.

Curious to see it in practice? Book a demo with one of our experts.