What is Responsible AI?

Responsible AI is the practice of designing, developing, operating, and monitoring AI systems whilst considering and minimizing their potential harm to individuals and society. This means AI should be human-centered, fair, inclusive, and respectful of human rights and democracy while aiming at contributing positively to the public good.

The importance and benefits of Responsible AI

While AI systems have immense potential, their use also raises concerns. They can perpetuate biases due to biased training data or algorithmic design and, instilling ethical values in these systems is a challenge. This is especially concerning for high-risk use cases, such as credit scoring, where AI decisions have more impactful consequences.

In addition to this, and since AI systems frequently utilize personal data, there are also concerns about how to guarantee the privacy and security of said data.

Moreover, while AI becomes more advanced, security risks related to its potential for misuse also increase, as the power of AI can be used to develop more advanced cyber-attacks, bypass security measures and exploit vulnerabilities.

A responsible approach to AI gives organizations the possibility to manage advanced risks while increasing stakeholder trust in the implementation of AI systems.

And, finally, implementing responsible AI also supports organizations in staying on top of the latest developments in terms of regulations.

The principles of Responsible AI

There is not one universal consensus on what exactly constitutes a responsible approach to AI. However, reputable organizations like the OECD, have put forth a set of broad principles:

- AI should benefit people and the planet

- AI systems should be designed in a way that respects the rule of law, human rights, democratic values and diversity

- AI systems should be transparent in order to ensure that its outcomes are understood by relevant stakeholders.

- AI systems must function in a robust, secure and safe way throughout their life cycle and potential risks should be continually assessed and managed.

- Organizations and individuals developing, deploying or operating AI systems should be held accountable for their proper functioning

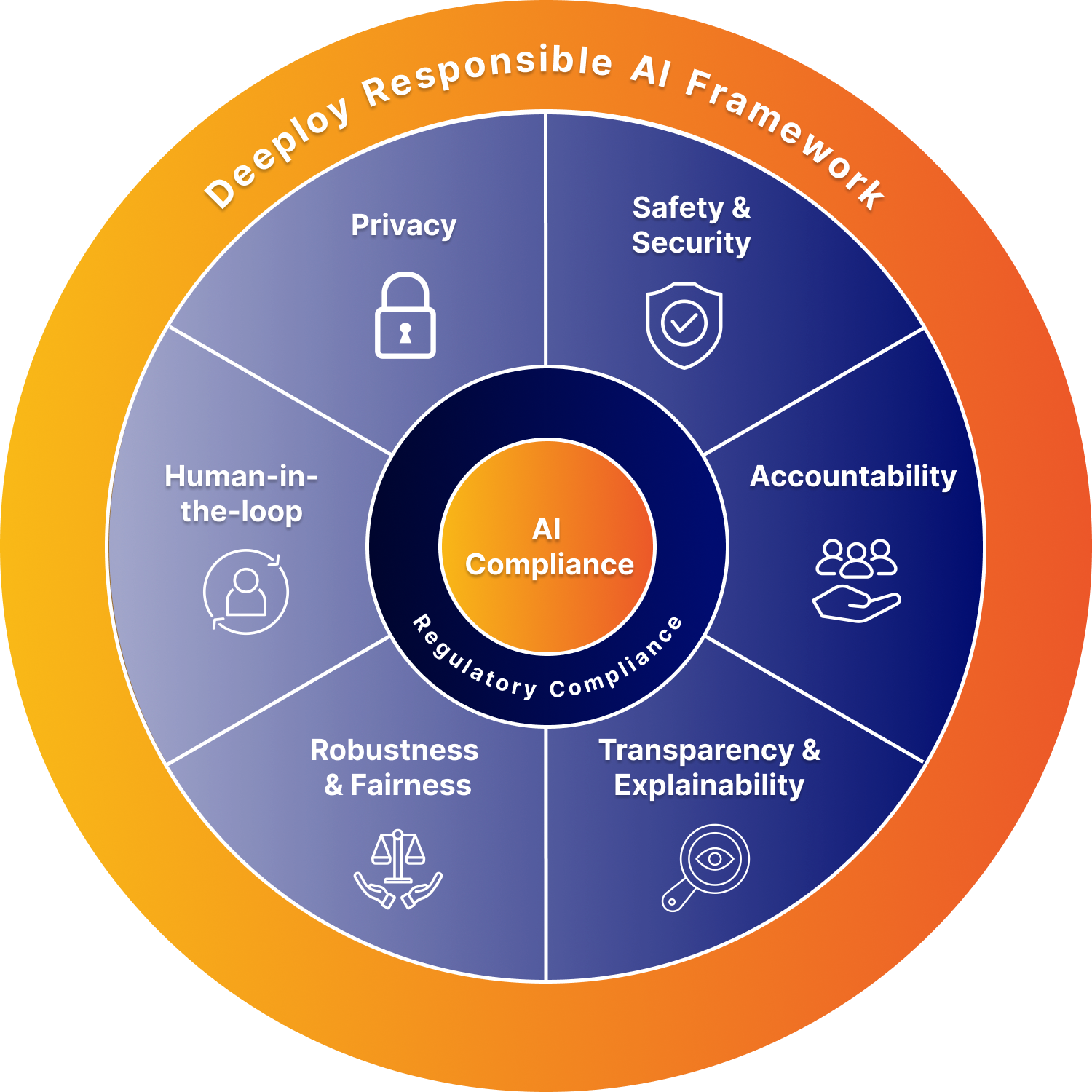

Based on these principles and our own expertise we have put forward the following Responsible AI framework to help organizations guide their AI efforts.

Machine learning models are built on data and, especially in high-risk use cases, they are built on personal data. As such, it is vital to ensure privacy while processing and storing any of this data. Besides implementing access control and secure data encryption, organizations must also make sure model predictions, input data, and features are stored securely.

Similarly, when dealing with sensitive data, it is also crucial to have a good safety and security system in place. This starts with the organization’s own procedures and policies but also extends to the operationalization of machine learning models.

There has to be appointed responsibility over an AI system. The responsible individuals for an AI system should be accountable for the proper functioning of said system but also for its operation in accordance with regulatory frameworks and company policies.

Transparency involves disclosing when an AI system is used and how said system was trained, developed, and deployed. Besides that, transparency also involves stakeholders being able to understand model predictions and decisions. For this to be possible, the decisions and predictions these systems generate should be explainable and understandable.

Which general trends are represented by the model? Which feature contributed most to the prediction/decision?

Robustness and fairness both aim at fixing the inevitable flaws of real-world data. While fairness ensures that a model’s predictions do not unethically discriminate against individuals or groups, model robustness refers to the ability of a machine learning model to withstand or overcome adverse conditions like, for example, errors, drift and inconsistencies in the system or security risks.

Human oversight is defined as the capability for human intervention throughout the complete ML lifecycle. In practice, this means that humans are not only involved with setting up and training and testing of the models but are also capable of giving feedback on model predictions and decisions. Having a human in the loop also provides a vital layer of responsibility and judgment that machines may lack.

Responsible AI + Compliance & Regulation

To address concerns on ethical usage, regulations around AI, such as the comprehensive EU AI Act, are being implemented across nations.

Compliance with upcoming legislation is crucial, and, as it is not immutable, companies and institutions must adopt a proactive stance and anticipate forthcoming rules.

This translates into looking at implementation of AI through the lens of responsibility, ensuring AI systems are utilized for the good and not the harm of society and individuals.

All in all, responsible AI is not about checking off certain requirements but it is about taking a well rounded approach to the implementation of AI, considereing legal, internal and ethical boundaries.

Implementing Responsible AI

While the majority of companies and organizations are experimenting with AI, the level of maturity of these AI developments differs greatly. This, in turn, makes the extent of responsible AI practices also vary vastly from organization to organization. For example, less mature companies might be more focused on getting something in production and not so much on the ethical implications of this implementation.

However, even if AI implementation is still at a very early stage, responsible AI should not be ignored. Doing this will only bring challenges in the future. Instead, organizations should take Responsible AI practices and considerations as a pre requisite to start any machine learning model implementation.

Leveraging the resources and structure they have, organizations should then adapt existing responsible AI frameworks to their needs and responsibilities and make sure those principles are followed. Additionally, organizations should also be transparent and inform stakeholders of how and to which extent Responsible AI is being implemented.

Navigating the Future with Responsible AI

Responsible AI allows organizations to harness the potential of artificial intelligence while safeguarding individuals and society from harm. It strives to contribute positively to the public good, recognizing the challenges posed by biases, privacy concerns, and security risks associated with AI systems.

Furthermore, in a rapidly evolving landscape of AI regulations, adopting responsible AI practices keeps organizations in compliance and ensures they remain at the forefront of ethical and legal developments.

All in all, responsible AI is not merely a checkbox but a comprehensive framework that organizations must integrate into their AI strategies. It serves as a foundation for building AI systems that benefit society while upholding ethical standards, ensuring that the promise of AI is realized responsibly and ethically.