How do we keep control of generative AI?

Probably your timelines have been as filled as mine with the amazing and sometimes scary world of ChatGPT (Generative AI). With the arrival of ChatGPT, a broader audience starts to realize that AI is becoming more and more part of our work and day-to-day life.

For those who missed it; ChatGPT is a prototype artificial intelligence chatbot developed by OpenAI. ChatGPT is trained based on a huge database that consists of millions of articles and other sources of information, fine-tuned by human feedback with methods called Reinforcement Learning. It’s able to produce information in tight sentences and is able to explain concepts in ways that people can understand. And it can deliver facts, while also generating business plans, term paper topics, and other new ideas from scratch.

It’s easy to underestimate how much the world can change within a lifetime. The exact effects of AI on our future are hard to predict and vary per industry. Every technology comes with both positive and negative consequences, and its believed that those of AI can become particularly wild. It’s important to think early on about both the wild range of possibilities, as well as the controls needed to work responsibly with these technologies. A technology that has such a large impact on our society needs to be of interest to people across our entire society, however, it is currently steered by a small group of techies, entrepreneurs, and investors.

According to Reid Blackman, author of the book “Ethical Machines”, there are a number of ethical risks and opportunities related to ChatGPT. For me, it underlines the importance of thinking about ChatGPT’s ethical and societal implications, also realizing that we just don’t know which technologies will arrive in the near future. Every organization needs proactive and flexible approaches to ethical risk assessment. An organization cannot just do “ethical risks of social media” or “ethical risks of data privacy” or “ethical risks of discriminatory models.” That’s too narrow. Difficult to tell how technologies will emerge and how they will interact and impact your organization risks.

A robust digital ethical risk program should accommodate our ‘’ignorance of the future’’ and the necessity of responding to its unpredictability. Without that, we’re just passively waiting to see how society gets reshaped and people get harmed without interference.

Some context about ChatGPT and generative AI

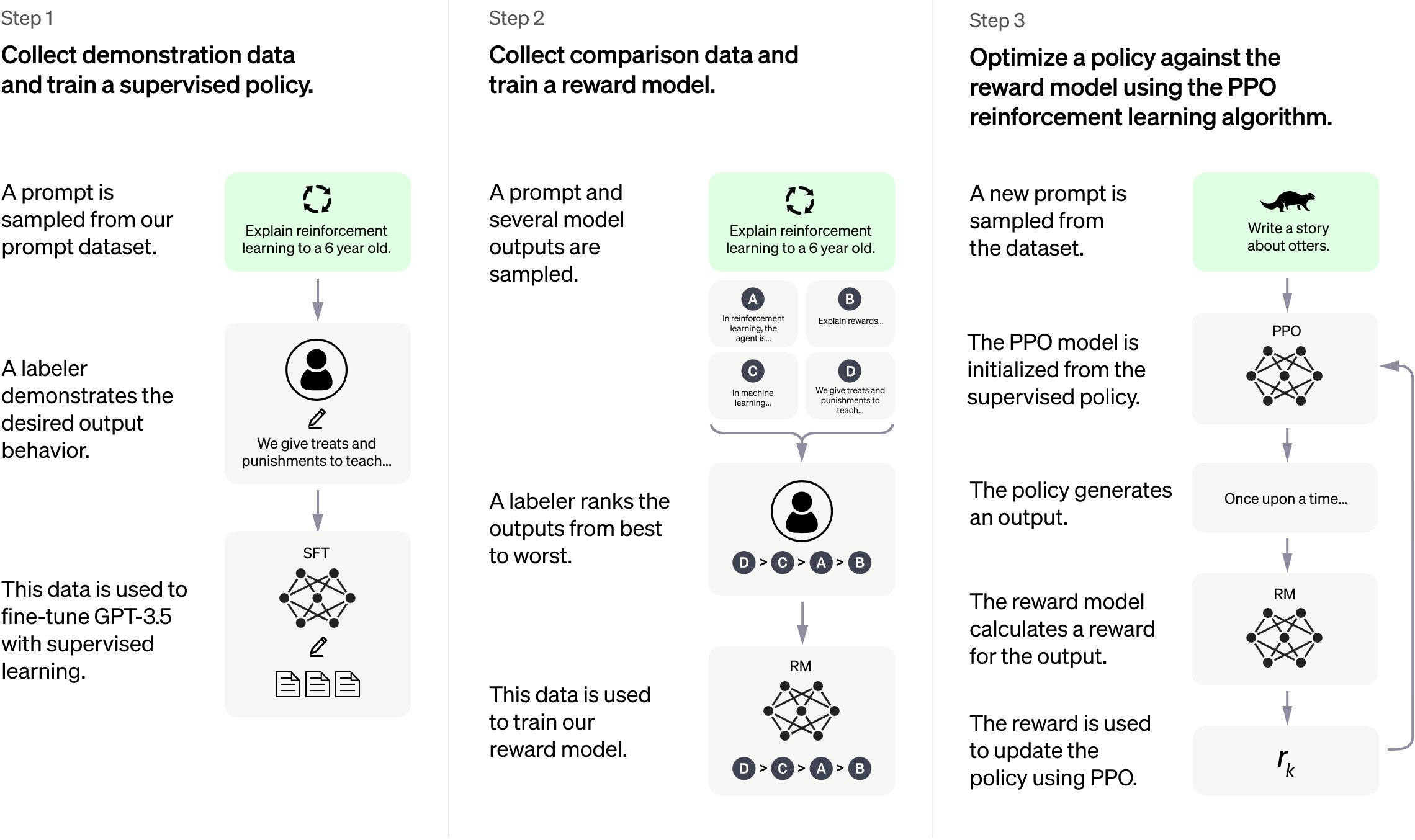

ChatGPT is trained by using Reinforcement Learning from Human Feedback (RLHF). This means an initial model is trained using supervised fine-tuning: based on conversations the algorithm learns from the feedback it got. Below is an illustrative overview of the methods used to train and fine-tune chatGPT. ChatGPT is relatively small, compared to, e.g. GPT3, and is, therefore, better suited for real-time applications, like chatbots.

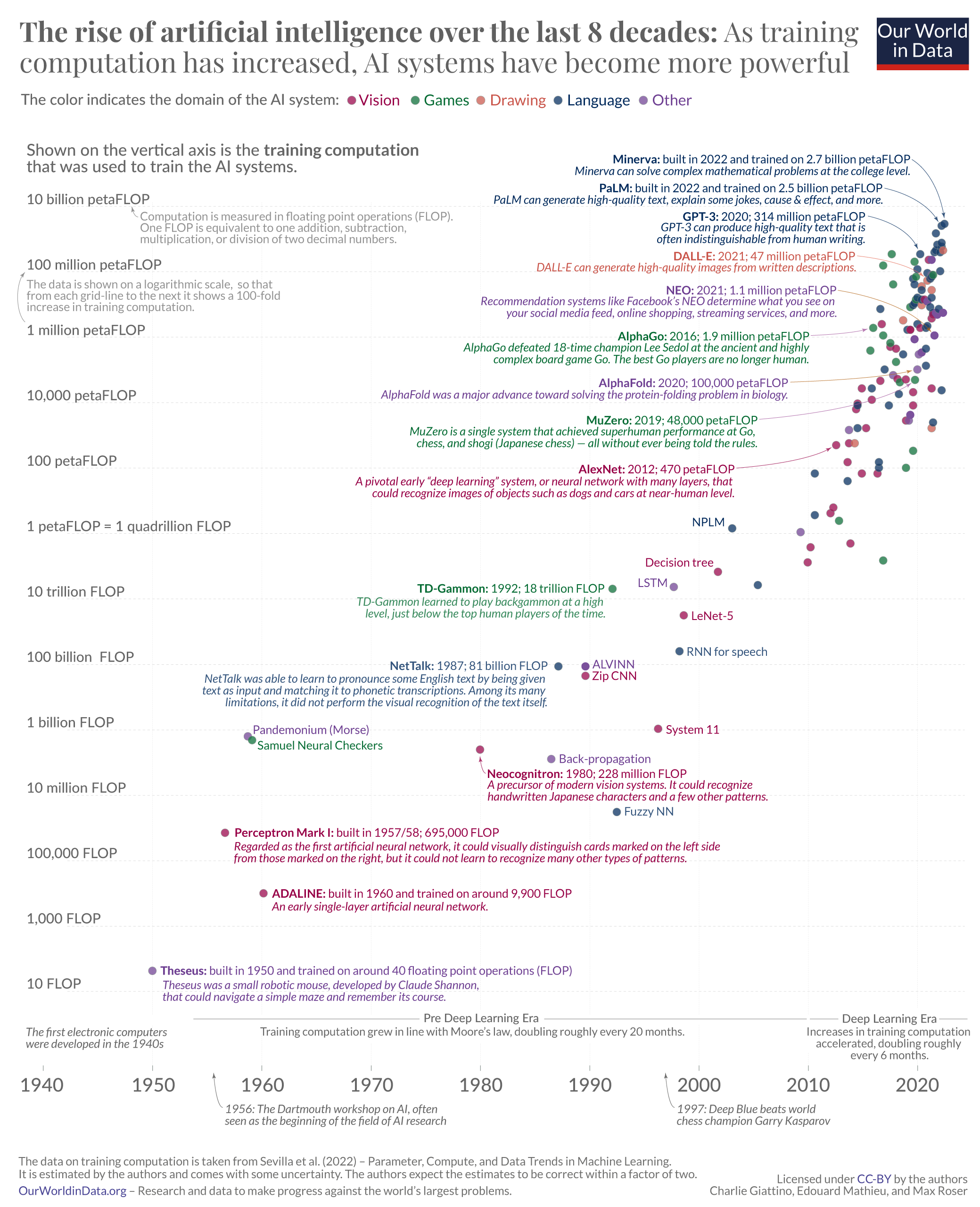

Over the past 10 years, several spectecular generative AI models made it to the market, including Dall-e2, PaLM, alphaGo and AlexNet. A lot can be contributed to the rise in computational power, which is becoming increasingly cheaper. The language and image recognition capabilities of AI systems have been developed rapidly, both outperforming humans in repetitive tasks now.

Generative AI gets more established

AI is already quite a big part of our day-to-day lives. AI increasingly shapes what we see, what we believe, and what we do. Based on the steady advances in AI technology and the large recent increases in investment, we should expect AI technology to become even more powerful and impactful in the years and decades to come.

A few examples that already improve our (quality of) life are Grammarly, Jasper.AI and WordTune. Grammarly actively helps to avoid typos and improve readability while typing, Jasper.AI provides an AI Content Platform that helps you to break through those creative blocks whereas WordTune gives you suggestions to write better when writing blog posts, articles, or even emails. It also helps students with their study work, which is arguably a good or a bad thing.

All these developments foster innovation and creativity but also come with a big challenge for us. As we face new and upcoming challenges as individuals, we also see companies take action to protect us from misusage or misinformation. Stack Overflow recently published a temporary policy ban on ChatGPT to slow down the influx of answers and other content created with ChatGPT. Stack Overflow is stating that the average rate of getting correct answers from ChatGPT is too low, resulting in harmful misled posts affecting their ‘’volunteer-based quality curation infrastructure’’. Stack Overflow still needs to decide what the final policy will be regarding the use of ChatGPT and other similar tools. Apparently, ChatGPT agrees with Stack Overflow 😉

How do we keep control of generative AI?

The question is though: how to steer, orchestrate, integrate and validate such technologies in a responsible way? How do we keep control of these technologies, and for example provide audit trails of data generated by humans, machines, or a combination to distinguish fake from real? And how do we make sure, that you cannot just solely rely on AI as human involvement is required with high-risk applications.

Here are some first ideas to distinguish reality from generative AI content, and methods to keep control of decision-making, especially in high-impact situations: