Explainability: the continuous balance between control and complexity

Introduction

More and more organizations, people, and regulators demand control and transparency to face the complexity of ML systems. Below we highlight three different markets in which the balance between control and complexity clearly manifests itself, starting with the government, then the financial markets, ending with business owners in less regulated markets. The mechanics are different, but the conclusion remains the same. Control is gaining weight in the discussions and regulations, so we need to find ways to gain control in order to keep innovating! Starting off with a brief introduction.

The beauty of simplicity

In 2020, Sir Roger Penrose received the Nobel Prize in Physics for his attributions to the field of the theory of relativity for the discovery that black holes are a logical and robust prediction of the general theorem of relativity. Like many other brilliant scientists, there is much more to share about his life and work. His fascination for geometry and his view on complex systems would be part of the list.

In the early 50’s, Penrose got to know the famous Dutch artist Maurits Cornelis Escher. Penrose, fascinated by the beauty of geometry, came familiar with the work by Escher during the International Mathematical congress in 1954. The two exchanged multiple letters, sharing their interest in tessellations. For ages, mathematicians tried to solve the following simple puzzle: form a non-repeating tessellation – both horizontally and vertically – with the minimum amount of tiles.

In 1962, Penrose visited Escher in his house in Baarn, the Netherlands. Penrose gave Escher a wooden puzzle of a series of identical geometric shapes. The shapes fitted together in many ways, but there was only one unique way in which they could be combined to create a tessellation containing all the puzzle pieces. The mathematician challenged Escher to solve the puzzle and after several weeks, Escher was able to solve the puzzle and sent Penrose a letter outlining the solution. Escher was astonished; he couldn’t find any repetitive patterns in the puzzle. It seemed like Penrose was on the right track to solve the famous puzzle, which he would, eventually.

Penrose continued trying to devise a tessellation that would never repeat itself horizontally or vertically. Many peers believed it to be impossible; in 1958, Roger and his father Lionel even wrote an article in the New Scientist to encourage people to puzzle along. In 1974, 16 years after the article and 12 years after the first visit to Escher, Penrose invented his famous aperiodic tilings, consisting of only 2 basic shapes, a kite (yellow) and a dart (red). The two shapes formed a non-repeating plane. Even more fascinating, the ratio between the kites and darts converges to the golden ratio.

The Emperor’s New Mind

If you survived this article up to here, I understand you wonder; when are we going to talk about explainable AI? Hold on, you will find the logic very soon.

Above’s story illustrates the beauty, the simplicity of complex problems, the art of geometry, and the creativity of mankind. It’s quite the opposite from the world of brute force GPU trained models with millions of billions of small rules. Models are often accurate at finding complex patterns by a very large and unexplainable set of rules. We, as humans, are often more creative in finding explainable and direct patterns, which are understandable by others.

Consciousness can’t be modelled by complex systems

Penrose believes in the creativity of people, not only led to the Penrose tilings, but also to one of his most famous books in the late 80’s: “The Emperor’s New Mind: Concerning Computers, Minds and The Laws of Physics”. In his book, Penrose argues that consciousness is non-algorithmic. Therefore, it can not be modelled by complex systems. In other words: stuff we would nowadays probably call Artificial Intelligence, can not be conscious. Hence, it wouldn’t be Artificial Intelligence, but Artificial Cleverness.

His claim was followed by quite some critics by physicians, computer scientists and psychologists who not agree with him and his theorem. It can be seen as signs of the battle between unlimited faith in the complexity of AI on the one hand, and the faith in us humans, who are in the end still the developers of these models and algorithms, on the other end. Penrose believes we represent the consciousness of systems, not systems themselves. According to him, those systems can sometimes still be pretty stupid, even though it contains an extensive network of small rules.

Algorithms don’t understand

Penrose illustrates his theory with a simple example: he shows how several algorithms try to solve a simple Chess position. The position is obviously a draw, and as humans, we would easily admit the draw, and start a new round of chess. On the contrary, most algorithms continue struggling and trying, until the moment they actually lose. It shows the weakness of AI: systems don’t understand the game. They only follow a set of rules of the world they were was trained on. They don’t understand they are stuck. They don’t understand at all, of course.

For the perfervid Netflix bench watchers: sound familiar right, a computer making very “unlogic” decisions in a game? Most of us probably watched Alpha Go on Netflix. The documentary shows how scientists and engineers of the British company Deepmind. During most of the documentary, the algorithm performs quite logical steps. That is; steps we as humans find logical. At one critical point, the system decides to make a wildly “strange” step. One which was unexplainable by its creators. Even the smartest scientist can’t explain why their very own system makes its decisions.

So what? Just a game of chess and go right? We all lose sometimes and do unlogic stuff right? We’re not perfect either. I totally agree with all of it, and I (also) believe systems are much better at doing many jobs than we humans are. The struggle has nothing to do with the quality of these systems, but the lack of accountability if they fail and the lack of control we have on these systems.

Control is gaining traction in the market

The story above illustrates to me the continuous balance between control and complexity. On the one hand, we believe in the power of complex systems and technology. For some, it almost becomes a religion; technology is going to solve all problems. But for many others, the unlimited faith in black box AI is a scary thought. We expect accountability from the (makers of) AI systems, and demand a certain form of transparency, explainability and control. We see this back from civilians, regulators but also business owners, who weigh the additional value and control.

Below we highlight three different markets in which the balance between control and complexity clearly manifests itself, starting with the government, ending with financial markets. The mechanics are different, the conclusion the same. Control is gaining weight in the discussions and regulations, we need to find ways to gain control in order to keep innovating!

The government: untapping huge potentials in a controlled way

In 2021, the Dutch Court of Audit (Algemene Rekenkamer) made a far-reaching publication, urging the government to use a strong set of instruments to guarantee the safe use of (self-learning, complex) algorithms, like Artificial Intelligence and Machine Learning models.

The Court of Audit recommends:

- A uniform set of checks and balances on the quality of algorithms

- Continuous monitoring of algorithms and their decisions

- Transparency and insight to people into algorithms and their decisions

The court of audit didn’t find any strong violations yet, but did recognize the pattern of models become more complex and a government slowly (well, like governments…) moving towards automated decision making. The court didn’t rule against more complex systems, but proposed a framework to keep control on the possible negative side effects of these systems, to avoid “dumb chess moves” when playing with more than just the life of a chess piece.

It’s a landmark example of how governments struggle with the balance (or battle?) between control and complexity. On the one end, we all admit that AI has many possible advantages to society. McKinsey estimated that our economy could grow with an additional 1.4% per year, attributed to AI. On the other end, the government fears discrimination, exclusion and mistakes.

The example above does not stand on its own. In 2020, judges ruled that SyRI, a fraud detection system used by the Dutch government, is not transparent and can therefore not be used for automated decisions. Judges even ruled that the fraud detection system is a breach of the European Convention on Human Rights. It has a wide range impact on all AI initiatives undertaken by the Dutch and European governments.

Fortunately, we also see hopeful signs: at the end of 2020, the municipality of Amsterdam gave insights in their algorithms, using their AI register. It’s not the perfect solution, but I believe a step in the right direction to make AI models possible in daily decision making.

Fintech, banks and insurers: who is accountable?

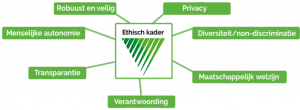

In January 2021, the Dutch association of insurers (Verbond van Verzekeraars) published a framework for ethical AI. It contains the following 7 topics to which Dutch insurers need to comply:

- Human autonomy and control: What is the risk of a complex model, compared to traditional methods? Can we guarantee human autonomy and control?

- Technical robustness and safety: Is the system secure and technically robust? Do we keep control, for example in relation to automated learning? Can we monitor the behavior of the system, and fall back to more controlled methods?

- Privacy and data governance: Do we comply with GDPR rules and regulations, and do we limit the use of personal data as much as possible?

- Transparency: Can we explain systems and predictions? Is there a human in the loop to validate the system?

- Diversity, non-discrimination and fairness: Can non-discrimination and compliance to human rights be guaranteed?

- Social welfare: Can we monitor the social impact of systems, and intervene early enough when needed?

- Accountability: Do we have control over the system? Can we assess the risks? Do we know who is accountable when things go wrong?

To me, when things become really complex, there is no way we can perfectly guarantee the first six topics. There is always a probability that one of the first 6 topics gets violated in a rather exotic scenario. And I believe we should weigh the positive and negative effects when using complex AI systems.

The last point, accountability, is maybe the hardest. In a world where models and systems are built on top of each other, with compliance officers, risk analysts, auditors, etc., it seems impossible to determine who is accountable for the decisions of a system. It’s illustrative for the balance between the added value of AI and the control we want to keep, in order to keep people accountable for the actions systems make on their behave.

In my opinion, a framework is just the first step. We need tools to help us comply to these 7 topics as defined by the association of insurers and other associations and regulators in the financial market.

What’s next? The demand for control increases. We need tools and methods!

There are many more examples of regulators demanding control over AI. One of them is the Medical Device Directive (MDR), basically demanding clinical testing before using a medical device with containing AI models. The MDR clearly obstructs the introduction of continuous learning systems onto the market, as Leon Doorn points out in his article. And we could go on for hours. Clear is: regulators start to demand more control on AI systems.

There is one thing I want to point out, though. It’s not just regulators. For us, data scientists and engineers, it sometimes feels like regulators don’t want to innovate. “They don’t get what we do”, is what I often hear. To be honest, I don’t agree.

The clearest demand for control doesn’t come from regulators who want to bully data scientists, it comes from people and business owners. In the past 10 years, I worked for quite some smaller businesses, from traditional family businesses to innovative start-ups; business owners want control over their processes and are often quite reserved when it comes to AI models doing business critical processes. It’s a long journey of gaining trust in the system, and keeping control by constant monitoring and explainability of decisions.

For me, the last reason is actually the strongest to keep exploring, researching, and developing methods and tools for explainable, accountable, transparent, controllable, and technically robust AI systems. It’s to us, the data community, to come up with a set of instruments to further support the demand for transparent, explainable, and controllable algorithms. The current instruments are far from perfect, from explainability methods (which not explain algorithms, just patterns in data) to methods to detect drift.