Transparency under the DSA – What, why, and how

The EU Digital Service Act (DSA), fully effective as of 17 February 2024, aims to ensure a safe, fair, and transparent EU online environment. Amongst others, the scope of the legislation demands online platforms to be transparent about the how and why of moderation of content, targeted advertising, and recommender systems.

Although large organizations such as Google Search, Instagram, and TikTok, are under bigger scrutiny, smaller online platforms are also subjected to strict transparency requirements while often possessing fewer resources to comply. But what does that mean; transparency? How can you ensure enough transparency on user-specific and algorithmically generated feeds like recommender systems and on targeted advertising? We start by explaining how the DSA works, and then dive deeper into AI transparency solutions.

Who will be affected?

The rules specified in the DSA regarding transparency primarily concern online intermediaries and platforms, such as online marketplaces, social networks, content-sharing platforms, app stores, and online travel and accommodation platforms.

This affects not only big players (Facebook, TikTok, Instagram), the so called “very large online platforms” (VLOPs) and “very large online search engines” (VLOSEs), but also smaller platforms (e.g. Vinted, Funda, or Bol.com).

To stimulate innovation and create a level playing field, micro and small companies are mostly exempted from the DSA requirements. This concerns companies with less than 50 annual headcount and less than EUR 10 million annual turnover/annual balance sheet total. The reasoning behind the exemption can be found throughout the DSA – the bigger your digital impact and possible consequences for humans and society, the more comprehensive your DSA obligations.

What rules are in place for transparency?

For online platforms and intermediaries other than VLOPS and VLOSEs, the DSA requirements on transparency can be divided into four categories:

Static transparency requirements require organizations to publish contact details for users and authorities and to clearly describe notice-and-action and complaint mechanisms in the applicable terms & conditions. This includes information on algorithmic decision-making, human oversight, and complaint-handling. Similarly, for recommender systems such as feeds and rankings, mostly static transparency requirements apply – more on that below. When there are more options of recommender systems available (e.g. ranking per price, customer satisfaction), a functionality which allows switching between recommender systems should be available in the same interface as the system itself.

Yearly transparency requirements require the publication of a yearly report describing the number of on-authority orders to take down content or give information, the number of notices on illegal content or content that infringes the rights of others, the number of complaints, and aggregated information on content moderation.

Re-active transparency requirements demand that organizations provide statements of reason to either accept or refuse notices relating to illegal content. Both the complainant and the user who published the content should receive such a statement. The statement should include information on measures and tools used for the decision-making, including algorithmic decision-making.

Dynamic, real-time transparency requirements for online targeted advertising require organizations to include meaningful information about the main parameters used to determine that a specific advertisement was shown to that specific user. Platforms that include recommender systems must explain the main parameters used in their recommender systems, and give users the option to modify these parameters.

Recommender system transparency – static or dynamic?

Interestingly, and as mentioned above, mostly static transparency requirements apply to recommender systems such as news and social feeds and rankings based on search queries. Platforms that use such recommender systems should use their static terms & conditions to explain the main parameters for determining which information is shown to the user and the reasoning for the respective importance/ranking of that information.

In our view, only requiring platforms to keep this information static and ‘hidden’ in terms & conditions does not do credit to the transparent and fair online society the DSA aims to promote. Of course, information disclosed to users should not violate the rights of the platform or the businesses whose products or services are presented through the recommender system. Trade secrets around algorithms should remain protected and the transparent information should not lead to manipulation of search results. But, when that risk is gone, allowing users to clearly understand items like their search result rankings or news feeds is preferable to reach fair online business.

Explaining recommender systems in practice

How can recommender algorithms become explainable and transparent?

This is where eXplainable Artificial Intelligence (XAI) comes into play. XAI is a set of processes and methods that allows human users to comprehend and trust the results and output created by machine learning models. In practice, this explainability refers to the ability of platforms to provide clear and understandable explanations to end users about why a particular recommendation, such as a product, news article, or video, was made.

There are several explainability techniques available, of which most are post-hoc explainers (used to generate explanations after the model was created and made a prediction). In addition, explanations can also take various forms, such as textual descriptions, visual representations, or even interactive interfaces.

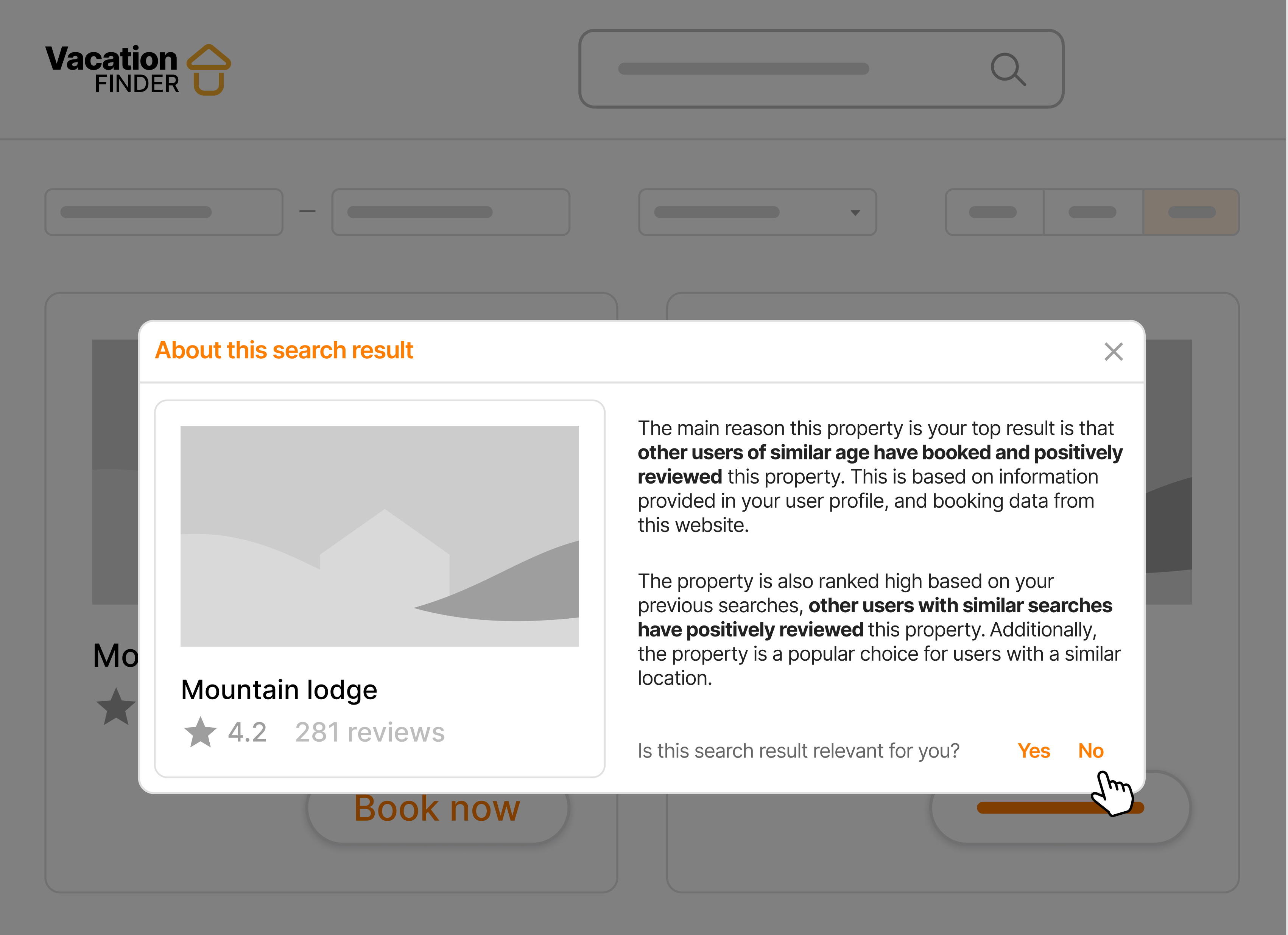

An example of how an explainer can look to the end user is shown below.

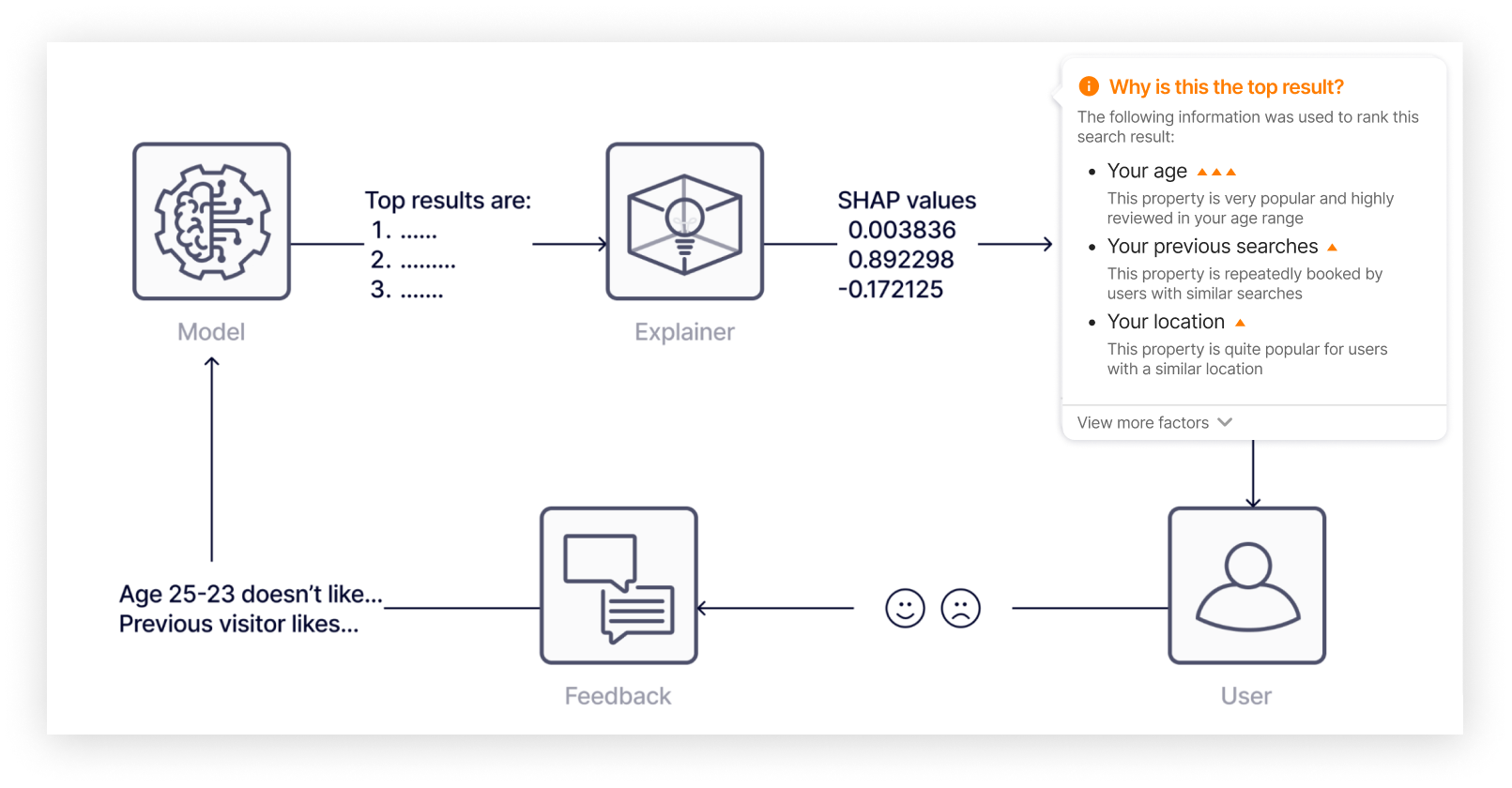

In this mock online vacation booking platform, offerings are tailored to end users based on a recommender system. In order to meaningfully inform the user, for every given listing, explainability is used to share the most important variables in the ranking algorithm.

In the background, the explainability method determines what type of information has the largest weight, and returns that weight as output. That output is translated to a UI element, as shared in the visual above. As such, even if the platform chooses to only be transparent about the workings of the recommender systems through its static terms & conditions, the platform can still use the explainability to proof it complies with these terms & conditions. This is not only relevant towards the users who are presented with the recommender system, but also towards the businesses whose products or services are presented through the recommender system. Under the already applicable <span style=”font-weight: 400;”>Platform to Business Regulation</span>, platforms are required to describe, and adhere to, the ranking parameters as included in their terms & conditions towards these businesses.

By incorporating XAI methods, platforms can bridge the gap between complex algorithmic processes and user comprehension, helping users make informed decisions and maintain trust in the platform’s recommendations while ensuring compliance with regulations.

How are explainers developed?

To understand the process of building explainers, it’s important to first differentiate between “local” and “global” explainability methods. Local explainers focus on providing insights into individual predictions, helping users understand why a specific outcome was produced. Global explainers, on the other hand, offer a broader perspective, shedding light on the overall behavior and trends of the model. This versatility ensures that explanations are tailored to different needs.

In general, we take the following steps to develop an explainer method:

- Define the Objective and design the Explainer Output: Identify the specific explanations that would be valuable for end users without disclosing any information that might be seen as a trade secret or can be used for manipulation of search results. For instance, is the goal to clarify why a particular piece of content received a higher or lower ranking? Or identify the requirements to test the recommender system for proof to business owners whose products or services are ranked.

- Choose an Explainability Method: Evaluate various explainability methods and frameworks such as SHAP, LIME, Anchors, Partial Dependency Plots (PDP), MACE, and many others. Depending on the objective, opt for a method that suits your needs. In this case, we may choose SHAP to determine the most significant attributes influencing content ranking.

- Train or Configure the Explainability Method: While some methods require training on (a subset of) the same data as the AI model, others, like integrated gradients, are configurable and don’t require training. Deeploy supports data scientists with templates on a wide range of explainability methods. This way, training or configuring an explainer is made easier.

- Deploy and Integrate the Explainer: Deploy the explainer as a micro-service, and integrate it with the backend of your application. This can be done using software like Deeploy, which supports easy deployment of lots of explainer methods. After deployment, utilize the explainer API endpoint in the application backend to request explainer output. Consider the orchestration strategy—whether to calculate explanations in real-time or in advance. Additionally, decide whether to provide explanations for every ranking or only upon user request, bearing in mind computational costs and application performance.

Implementing explainability methods helps to comply with the DSA and take responsibility with respect to your role in the fair online economy and society. Furthermore, by enabling a feedback loop, users can actively steer and improve ranking algorithms, increasing the ROI of such models, and correcting unwanted behaviour. It’s crucial to have a human in the loop and monitor the feedback loop to decide on the best possible way to incorporate feedback in your machine learning systems.

What’s next?

In this article we shortly touched upon explainability and transparency of AI models. There are already good examples in the market of companies applying explainability to ensure safe use of their AI models.

We believe explainability and transparency are the only way forward in AI. Ethical considerations have long underscored this need and, now, regulations like the DSA are following and calling for those same standards to be applied.

Curious to learn more about how this can be applied to your organization? Feel free to contact us!

De Roos Advocaten

De Roos Advocaten is one of the leading law firms in the Netherlands, working together with leading platforms to guide them through new regulations, like the DSA.

Deeploy

Deeploy is a platform for data teams to ensure transparency of AI models, helping to comply with regulations like the DSA and the upcoming EU AI Act.