Tackle AI Compliance with Deeploy

The growing adoption of AI raises concerns about its ethical usage, especially in high-risk applications like credit scoring. To address this, regulations around AI, like the comprehensive EU AI Act, are being implemented.

Compliance with these rules is crucial, and organizations should address them through a proactive approach rather than a reactive one. This translates into looking at AI implementation through the lens of responsibility and human involvement, ensuring AI systems are utilized for the good and not the harm of society and individuals.

Regulation, Responsibility and Compliance

As AI systems become more prevalent in organizations, societal concern also grows. Its normal for citizens to have concerns about how AI systems might utilize their information, especially in high risk use cases such as machine learning models for credit scoring or fraud detection.

In line with these concerns, various nations have now also started drafting guidelines and regulations to safeguard fundamental rights while still allowing for AI innovation. Namely, the European Union has been putting forward the EU AI Act, a set of regulations that categorizes AI systems into risk tiers and imposes regulatory requirements accordingly to limit the potential impact on individuals and society.

Compliance with such regulations will be imperative and, as they are not immutable, companies and institutions must take a pro active approach and think ahead of regulations. Instead of merely scramble to comply, organizations should take initiative and make it a principle to deploy their AI systems responsibly.

But what does this mean in practice?

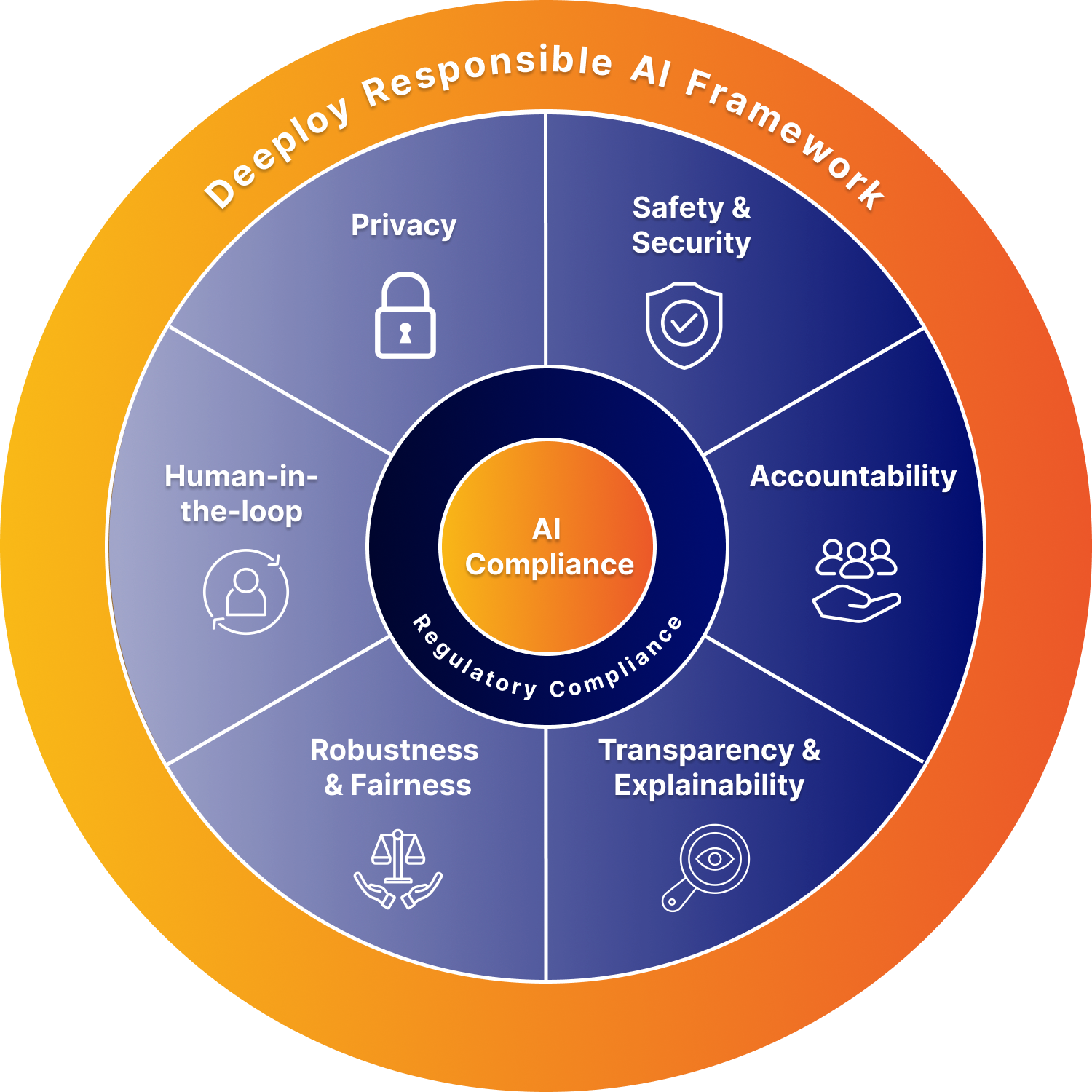

A framework for deploying Responsible AI

Implementing responsible AI can be looked at trough the lens of a framework of six pillars that aims to ensure machine learning models are deployed and monitored responsibly.

Following the pillars of this framework sets out a solid foundation for companies and institutions to ensure their AI efforts comply with current and future regulations.

Privacy

Machine learning models are built on data and, especially in high risk uses cases, they are built on personal data. As such, it is vital to ensure privacy while processing and storing any of this data. Besides implementing access control and secure data encryption, organizations must also make sure model predictions, input data, and features are stored securely.

Another way to ensure data privacy is to install any AI systems in the organization’s own cloud and premises, so that the organizations stay in control of the data they are responsible for.

Safety and Security

Similarly, when dealing with sensitive data, it is also crucial to have a good safety and security system in place. This starts with the organization’s own procedures and policies but also extends to the operationalization of machine learning models.

Organizations should perform comprehensive model monitoring to identify and address potential safety risks. Features like drift monitoring and alerts, for example, greatly help organizations keep on top of any deterioration their models might suffer and be able to quickly correct and avoid adverse effects.

Accountability

An AI system and its stakeholders should always be accountable for the predictions and decisions the system produces.

In practice, this translates into implementing features like model tracking, audit trails, and documentation capabilities, enabling users to understand and explain the decision-making process behind the AI system. Every decision, explanation or update should be traceable and reproducible.

Transparency & Explainability

In order to keep AI systems fair and transparent, it is crucial that decisions and predictions these systems generate are explainable and understandable.

Which general trends are represented by the model? Which feature contributed most to the prediction/decision? how can we visually represent the dynamics of a model or prediction/decision?

Moreover, this explainability is not only about the explainer, it is also about the person receiving the explanation. It’s important to tailor the explanation to its end-userd, making the model interpretable to the relevant stakeholders and allowing them to make informed decisions.

Robustness & Fairness

Robustness and fairness are essential, as both of them aim at fixing the inevitable flaws of real-world data.

While fairness ensures that a model’s predictions do not unethically discriminate against individuals or groups, model robustness refers to the ability of a machine learning model to maintain its performance and accuracy even when faced with unexpected or adversarial data inputs. AI systems should be resilient regarding errors, faults and inconsistencies in the system or the environment it is used as well as be resilient to attempts by unauthorised third parties to alter their use or performance by exploiting the system vulnerabilities.

Human-in-the-loop

Human-In-The-Loop (HITL) is defined as the capability for human intervention throughout the whole ML lifecycle. In practice, this means that humans are not only involved with setting up the systems and training and testing the models but are also capable of giving direct feedback to the models on their predictions.

By keeping a human-in-the-loop organizations can safeguard against errors, biases, or unforeseen circumstances that automated systems may encounter. Moreover, having a human in the loop also provides a vital layer of accountability, responsibility, and judgment that machines may lack.

How Deeploy ensures AI Compliance

Deeploy tackles AI compliance by providing a robust deployment platform based around the responsible AI pillars. This ensures that the deployed models stay manageable, explainable and accountable.

Our priority is to make it easy for organizations to productionalize their ML models in a responsible and safe environment, allowing for stakeholders to focus on generating innovative insights and solution from their machine learning models.

Explore our responsible AI features

Monitor models, predictions and interactions

- Constantly monitor model drift, bias detection, and performance- Define custom alerts for the metrics that matter to you

- Easily discover the root cause of model degradation

Explain model decisions/predictions

- Support deployments with local and global explainers- Add custom explainers tailored to your end-users

- Traceback explanations of historical predictions

Reproduce and traceback decisions & explanations

- Trace and reproduce every change, prediction, explanation, and deployment- Ensure clear ownership of Deployments and Workspaces

Improve model performance with human feedback

- Facilitate interactions between AI and humans- Enable domain experts to evaluate predictions

- Receive real-time feedback on models and predictions

The right path to AI Compliance

AI implementation and compliance go hand in hand. In order to benefit from the former, organizations must make sure they comply with the regulations in place. However, as regulations can change over time, it is important to go beyond being compliant with current requirements. Instead, organizations should take a proactive approach and always look at AI trought the lens of responsibility.

This encompasses many aspects of the MLops lifecyle but, it is especially vital when putting models into production. Wether the deployment is done in house or with an external provider, it is crucial to ensure the main pillars of responsible AI are respected and followed.