Learn

|

Articles

|

Nick

|

4 May 2021

Original posted on Medium

On the journey to hold Machine Learning (ML) models accountable for their predictions, Deeploy is engaged in making visualisations of predictions more accessible. Popular visualisation tools like SHAP are on the rise of providing these insights, but are not yet translated to work well with all front-end frameworks.

Over the years, we experienced the importance of human involvement in ML systems. This means that every developer or every end-user understands how the decision by a ML system has been made. This allows to detect mistakes or signs of unfairness that in originate in models or training data.

This article focuses on how to integrate the SHAP force-additive and force-additive-array visualisers in Angular, using an open source contribution of Deeploy.

SHAP (SHapley Additive exPlanations) is one of the most influential explainability algorithms available for developers today. SHAP is a game theoretic approach to explain the output of any machine learning model. It uses local explanations using the classic Shapley values from game theory (original paper form 1953!). We expect that one of the reasons why SHAP gained so much attention is that it includes rich ways to visualize the explanations in the original Python library. Of course it would be a pity if those nice graphs stayed hidden in Data Science notebooks that’s why we made this open source NPM library, allowing Angular developers to integrate SHAP visualisations in their applications.

To get started, you will need to install a NPM package into you Angular project:

npm install shap-explainers

This package currently contains two components: shap-additive-force and shap-additive-force-array. By importing the ShapExplainerModule you will be able to use these components in your angular application:

import { ShapExplainersModule } from 'shap-explainers';@NgModule({ imports: [ShapExplainersModule] })

In order to add the component to the frontend, add one of the following component selectors:

<shap-additive-force></shap-additive-force>

<shap-additive-force-array</shap-additive-force-array>

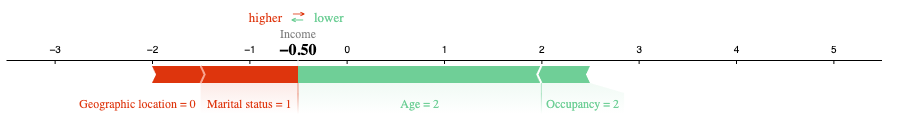

These components have their own initial placeholder data, so it is not required to input any data to display the visualizers:

But of course it will be much more useful to provide the data from your predictions in order to render te graph useful. The components accept an [data] as input in the following format. Let’s create a new additive force plot with the following data:

{

featureNames: {

'0': 'Marital status',

'1': 'Geographic location',

'2': 'Age',

'3': 'Occupancy',

},

features: {

'0': { value: 1.0, effect: 1.0 },

'1': { value: 0.0, effect: 0.5 },

'2': { value: 2.0, effect: -2.5 },

'3': { value: 2.0, effect: -0.5 },

}

}

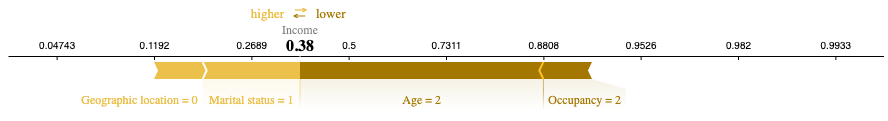

To ensure that the graphs also fit with your Angular application, we have also built in parameters that you can use to further define the looks of the graph you wish to render. Have a look in this repository for more information about these parameter. For now, let’s create a different looking graph with the same data as before, by providing the following parameters:

[plotColors]="['rgb(235, 192, 75)', 'rgb(111, 207, 151)']"

[link]="'logit'"

[hideBaseValueLabel]="true"

[outNames]="['Income']"

Which will show up as follows:

Congratulations! You have now added SHAP visualisers into your Angular frontend.

The open source contribution by Deeploy is maintained in this repository. Contributions are welcome, as we are actively developing the current SHAP visualisations but also extending the library for other explainability frameworks.

This is just the beginning. At Deeploy we are passionate to make ML more explainable. We believe that using well designed visualisers to explain ML predictions is an essential step in ML adoption. Although we think that making SHAP explainers available for Angular developers is a good first step we are planning to support: