Deployment to Monitoring: Deeploy’s fit into the MLOps Lifecycle

Machine Learning Operations (MLOps) is a set of practices and tools that automates the process of developing, deploying, and managing Machine Learning models, making them more scalable, repeatable, and manageable. Without MLOps in place, it is difficult for organizations to develop and maintain large-scale machine learning models without incurring immense costs, delays in implementation, and potential poor model performance.

There are many different ways of maintaining Machine Learning Operations, but organizations usually make use of external tooling that either covers the whole cycle or tooling that answers specific needs within the ML life cycle.

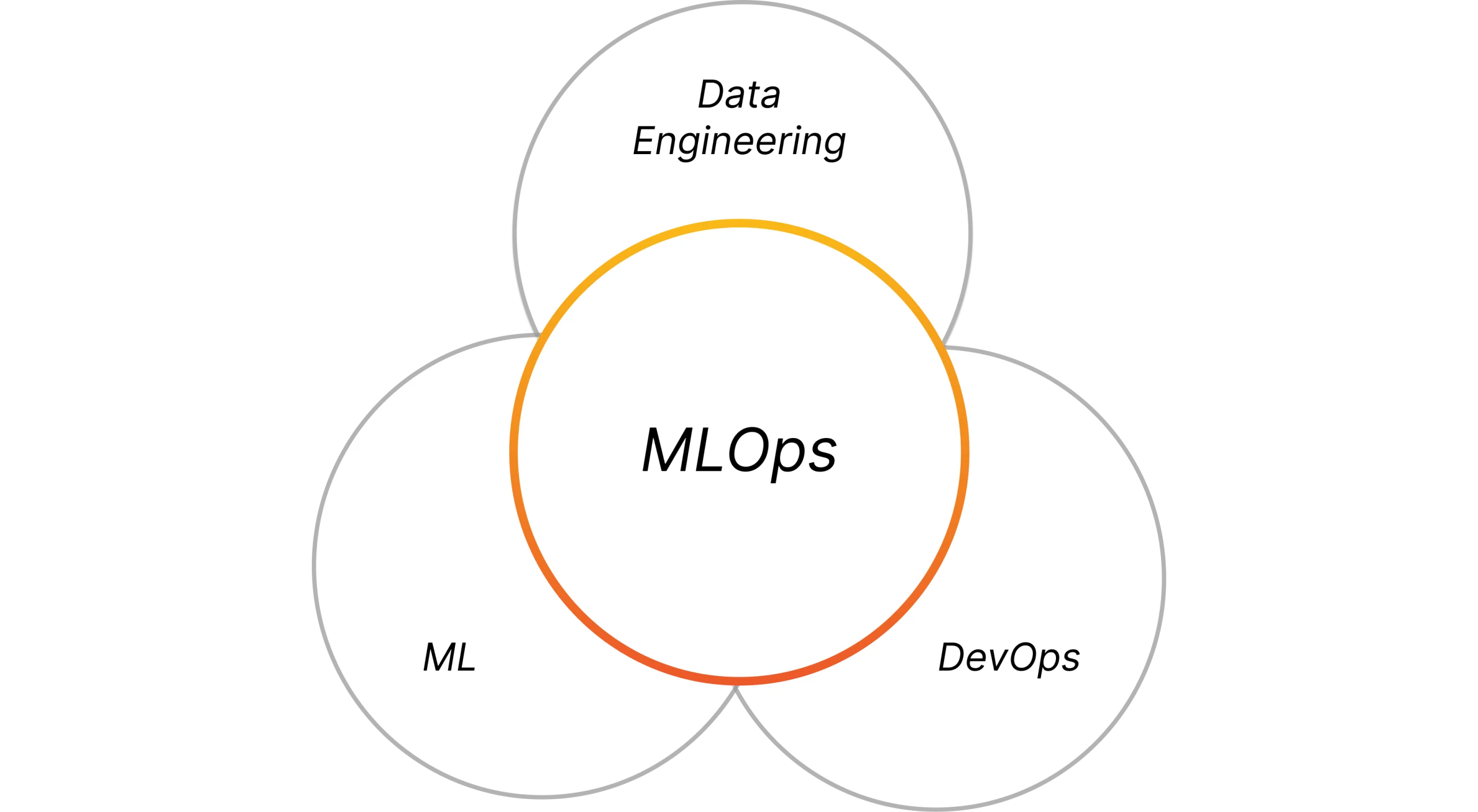

The Premises of MLOps

MLOps is a field that brings together three important domains: Data Engineering, DevOps, and Machine Learning. By combining these domains, MLOps ensures a streamlined and automated process that allows organizations to leverage the power of Machine Learning and generate value for their businesses.

More often than not, setting up MLOps in organizations involves the use of external tools. These tools can either be end-to-end , which cover the entire lifecycle of a model, from development and training to deployment and monitoring, or best-of-breed, which are specialized in one or more specific phases of the MLOps process.

MLOps is vast and complex and, therefore, a solution that covers all aspects of the cycle, although simpler to implement, can feel shallower in some areas. On the other hand, although best-of-breed solutions can be more complex to coordinate and integrate, they also allow for greater flexibility when assembling a MLOps stack, allowing organizations to choose different products for different needs.

In the end, the better solution will vastly depend on the organizations objectives and resources.

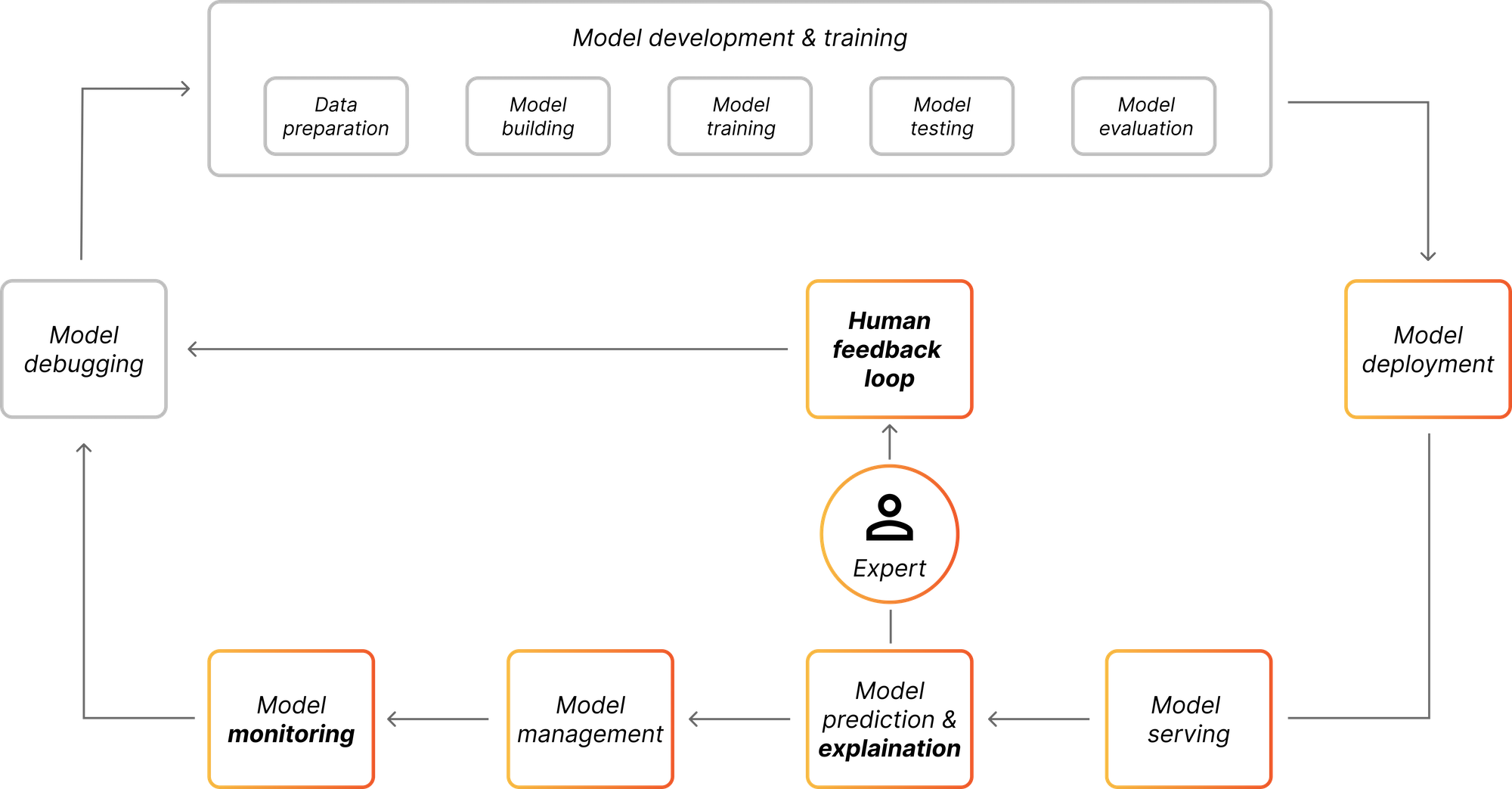

How Deeploy fits into the MLOps process

Deeploy focuses on offering a best-of-breed solution for productionizing ML models, covering from model deployment to model monitoring and offering features that ensure deployed models stay within a framework of responsibility.

As machine learning models are being put into production across industries, concerns about the impact of these applications also grow and regulating bodies are coming forward with guidelines and regulations.

This has put the pressure on organizations to place their MLOps implementation within a Responsible AI framework, which refers to the practice of utilizing AI systems whilst considering and minimizing their potential harm on individuals and society.

This is especially vital in high-risk use cases, such as credit scoring and loan evaluation, where ML models decisions have a significant impact on individuals and society.

In line with Responsible AI principles, Deeploy focuses on helping organizations ensure that their deployed ML models stay manageable, accountable and explainable.

1. Model Deployment

The deployment process is straightforward and intuitive and can be completed in a few simple steps. Deployments always include a model, and can optionally also host an explainer, transformer, or both. Each deployment is equipped with an endpoint, enabling communication with the model to retrieve predictions and explanations.

Supported model frameworks include:

- Tensorflow

- PyTorch

- Scikit-learn

- XGBoost

- Custom Docker Images

In addition, during model deployment, Deeploy’s platform offers compliance insights that take teams through a checklist of best practices to make sure the deployment of the model is aligned with Responsible AI principles.

2. Model Serving

Both non-serverless and serverless deployment methods offer automatic scaling to handle incoming traffic. This means that as the usage of your model increases, additional instances of your model will be deployed to accommodate the growing traffic. Conversely, if there are more instances than required to serve the incoming traffic, your deployment will scale down accordingly.

3. Model Prediction and Explanations

A big part of responsibility when employing AI and machine learning models is making sure model predictions are transparent and explainable. Which general trends are represented by the model? Which feature contributed most to the prediction? What input would lead to a different decision?

Deeploy supports the main explainability frameworks, such as SHapley Additive exPlanations (SHAP), Anchors, Partial Dependence Plot (PDP), and Higher Order Model-Agnostic Counterfactual Explanation (MACE), as well as a large set of tailored explainability methods for specific use cases such as transaction monitoring. Teams can then easily traceback predictions and review explanations on the factors that led to said decisions.

4. Human Feedback Loop

Deeploy is built on the fundamental belief that providing clear explanations of deployed models’ prediction processes is crucial for both present understanding and future reference. As such, each deployment within Deeploy is equipped with an endpoint that enables the collection of feedback from experts or end-users. This feedback is solicited and recorded on a per-prediction basis, allowing for comprehensive evaluation.

From these evaluations, metrics such as disagreement ratio are available for analysis, which assesses the extent to which evaluators are in disagreement with the predictions made by the model. Finally, the collected feedback can also be accessed by data science (teams) for retraining purposes, helping further develop the models.

5. Model Management & Monitoring

A big facet of Responsible AI is making sure that model performance remains consistent overtime. This is crucial since model degradation easily leads to a drop in prediction accuracy which, in turn, affects those which model predictions impact.

Deeploy offers a range of monitoring features like errors , response time, accuracy and drift. In addition, users can set alerts for all metrics based on desired thresholds. This allows users and teams to monitor and take corrective actions quickly, saving time and resources. Moreover, all predictions and events are logged, creating audit trails that are indispensable for accountability.

At last, just as during the deployment phase, teams can also monitor compliance. Available checklists are continuously updated to reflect latest standards and regulations to help companies ensure their ML efforts stay within compliance constraints. The human feedback loop also plays a part here, as having a human give input on model decisions helps monitor the fairness and accuracy of models.

Go about MLOps responsibly

Deeploy offers a comprehensive solution for organizations looking to productionalize their ML models responsibly. Through an intuitive platform, individuals and teams can easily manage, monitor and make sure models stay transparent and explainable.

In the ever evolving and complex regulatory landscape, Deeploy helps organizations ensure transparent, accurate, fair, and reliable ML models over time, making sure practices are aligned with Responsible AI principles and, therefore, compliant with regulations and internal policies.