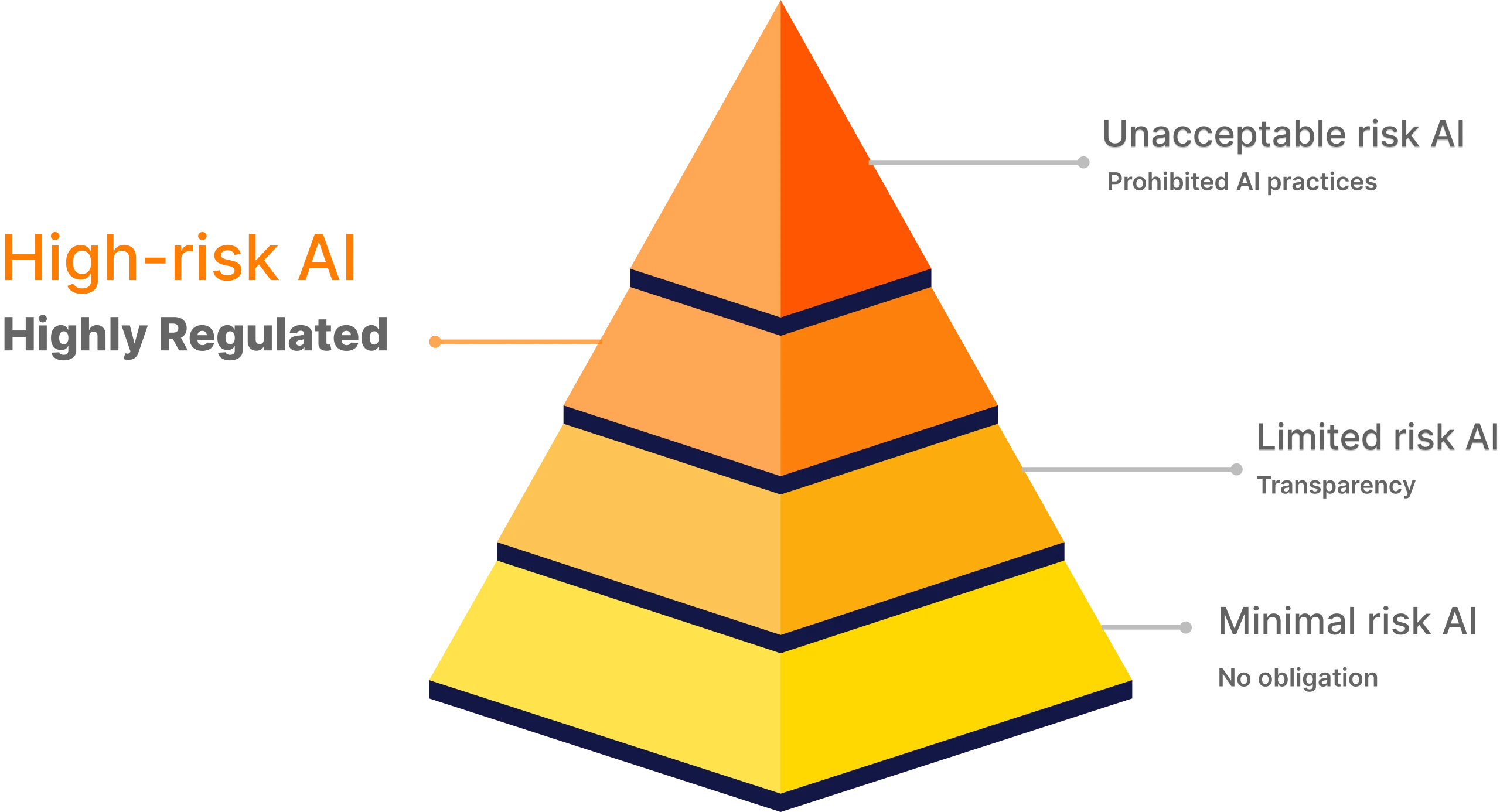

Laws on Artificial Intelligence, such as the EU AI Act, are putting AI under scrutiny. To comply, AI systems must be fair, transparent, and secure.

A platform to ensure your AI models stay within regulatory requirements

Learn how high-risk AI systems are affected by the EU AI Act and how using Deeploy can help you comply.

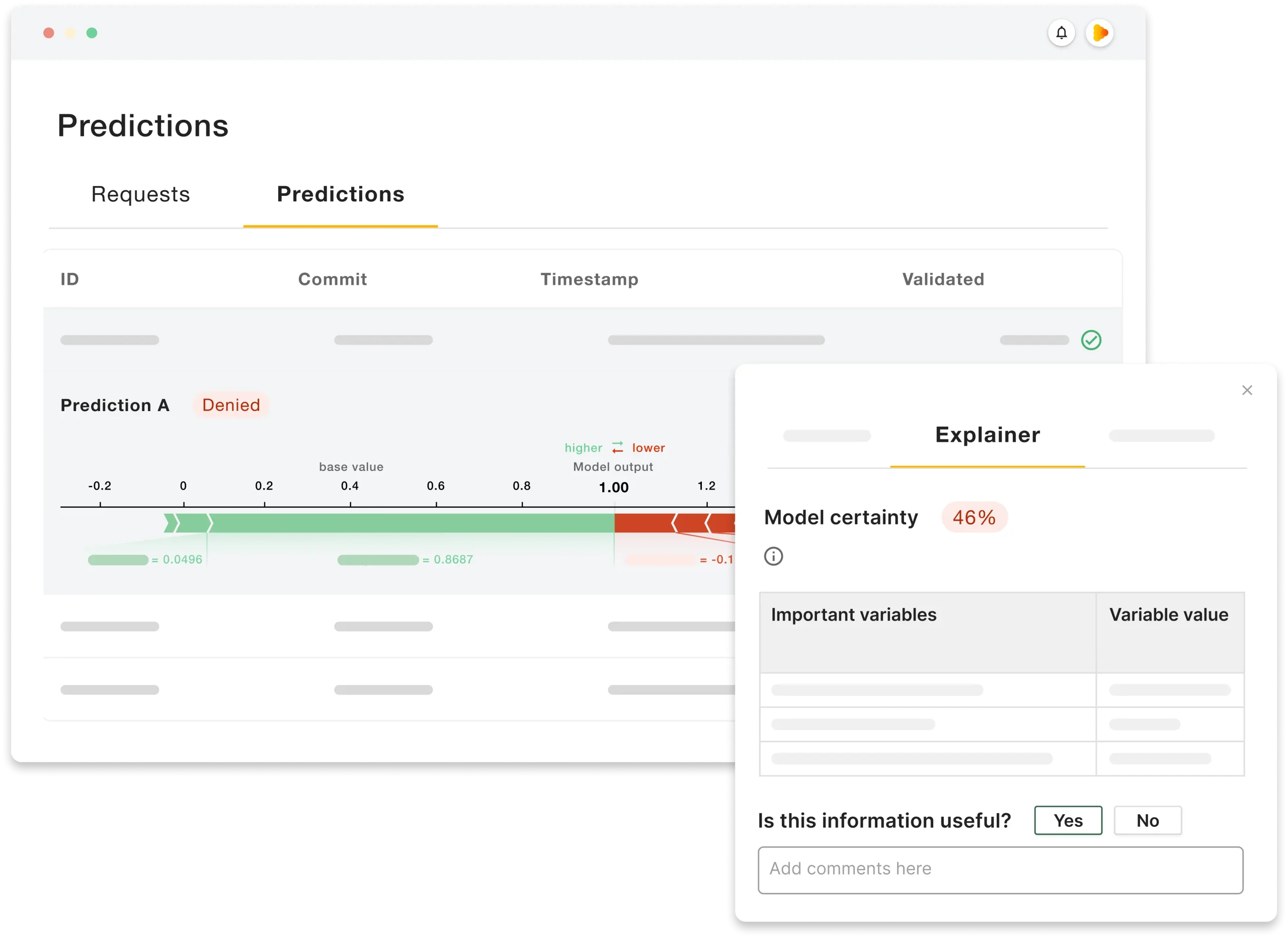

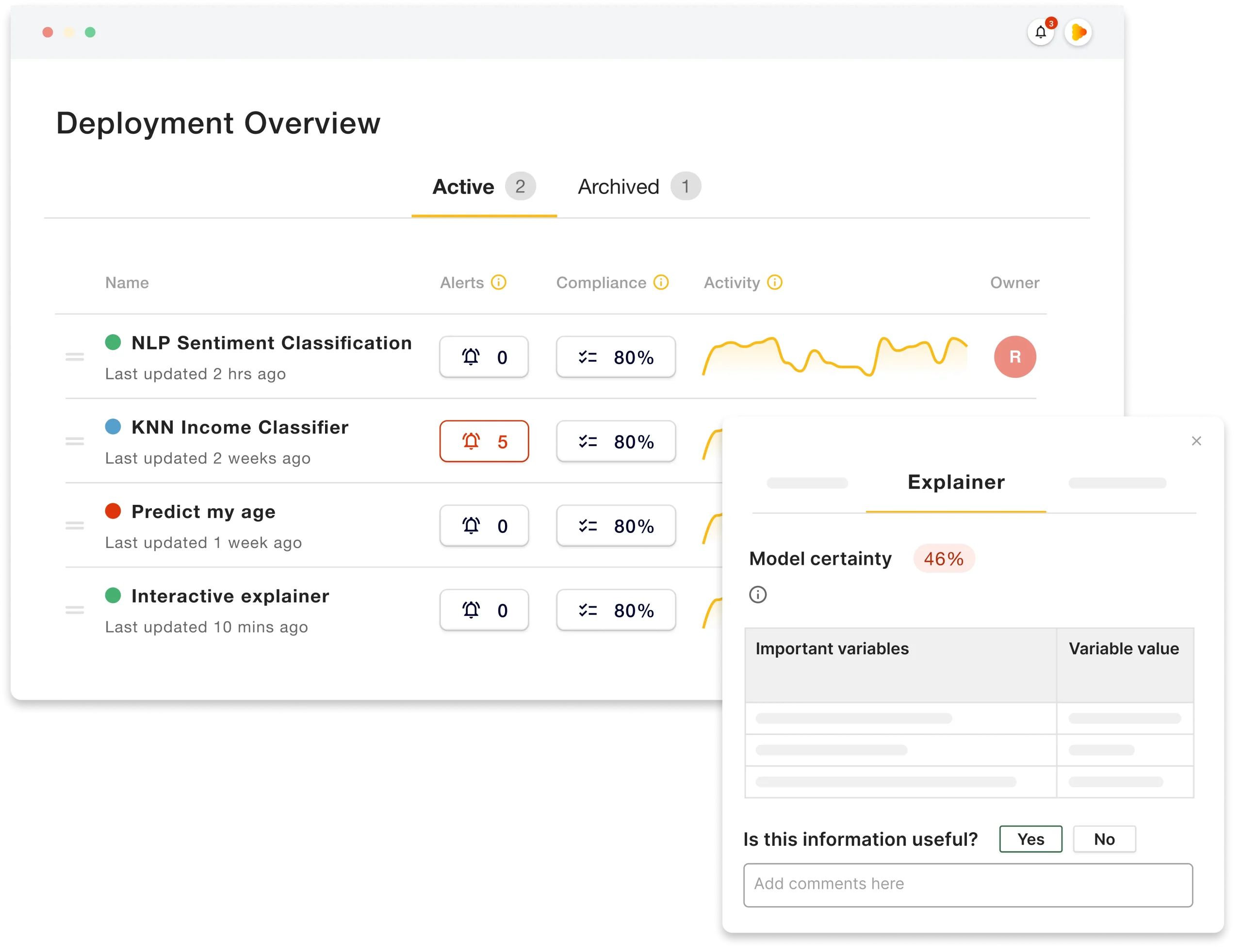

Art 13: Transparency & Information

High-risk AI systems should be developed

“(…) in such a way to ensure that their operation is sufficiently transparent to enable deployers to interpret the system’s output (…)”

- Explain every prediction with a large set of methods

- Out-of-the-box model explainability

- Have experts weigh in and give feedback through a feedback loop

- Use the collected feedback to improve model performance

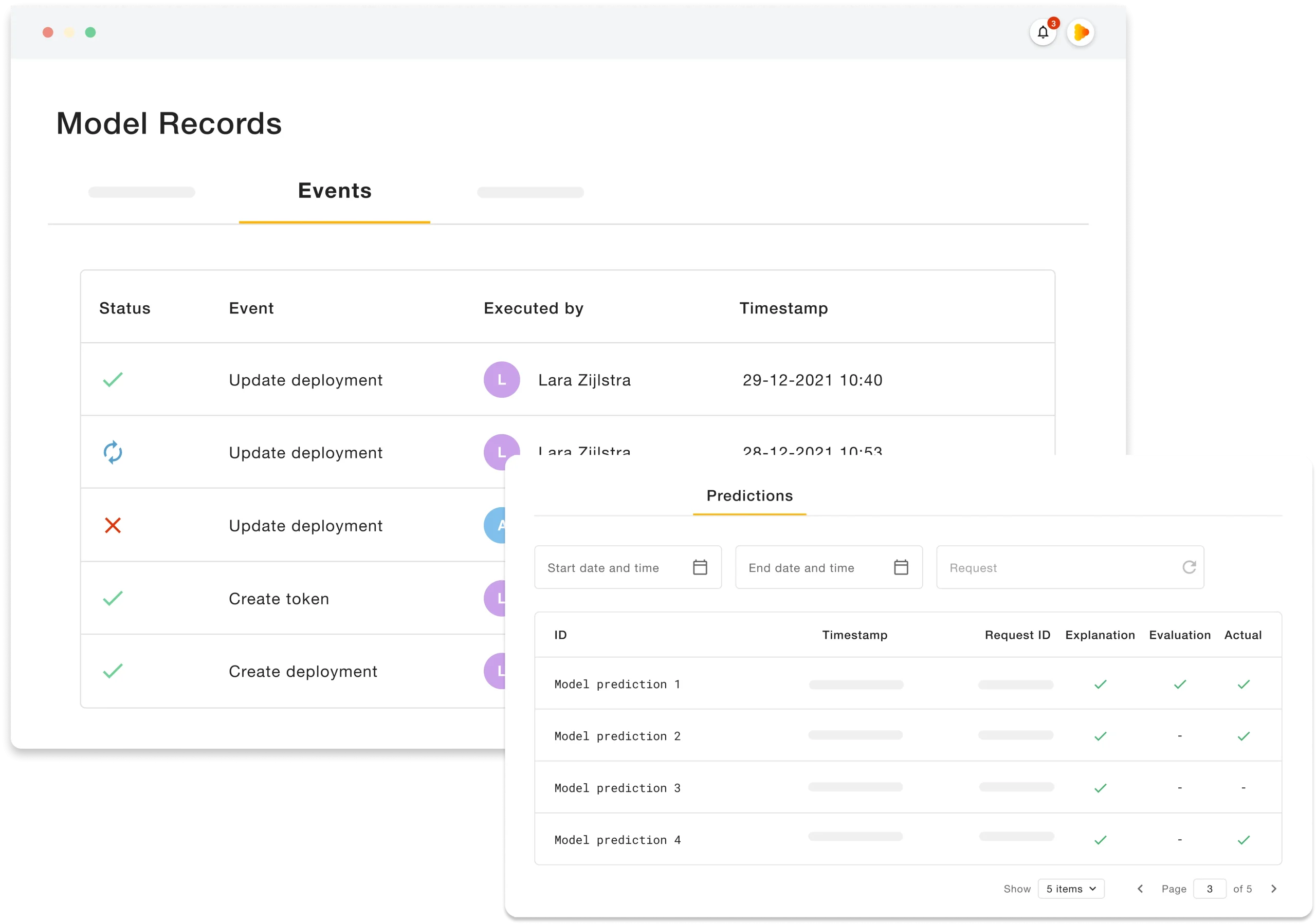

Art 12: Record-Keeping

High-risk AI systems shall

“(…) technically allow for the automatic recording of events (‘logs’) over the duration of the lifetime of the system. (…)”

- Auto recording of all deployment updates and events

- View all container logs generated by models

- Traceback & reproduce all predictions/decisions

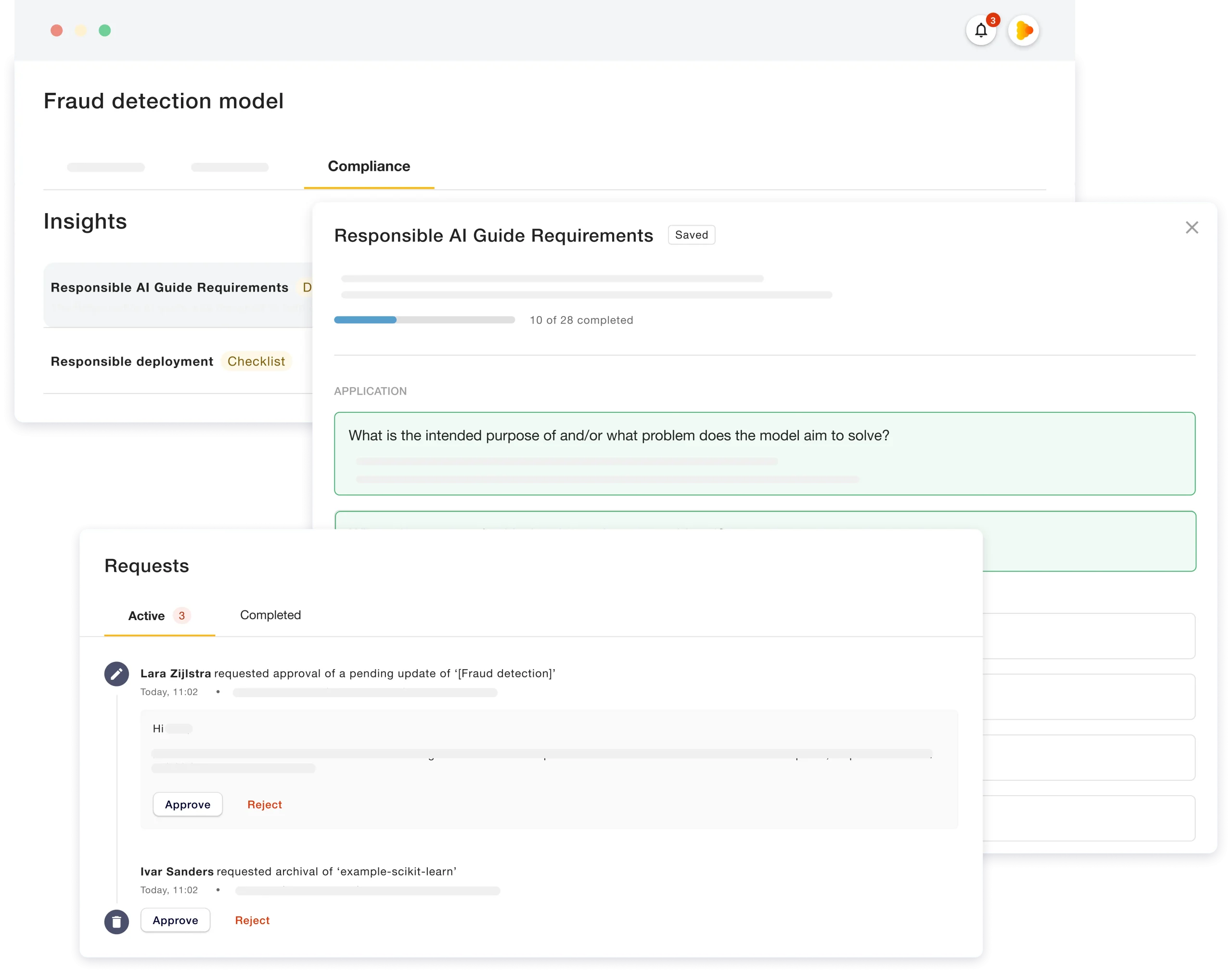

Art 14: Human Oversight

“(…) shall be designed and developed in such a way, including with appropriate human-machine interface tools, that they can be effectively overseen by natural persons during the period in which the AI system is in use.“

- Easy overview of all running models

- Get alerts on model deterioration or drift

- Have experts or end-users evaluate the accuracy of predictions

- Use the collected feedback to improve model performance

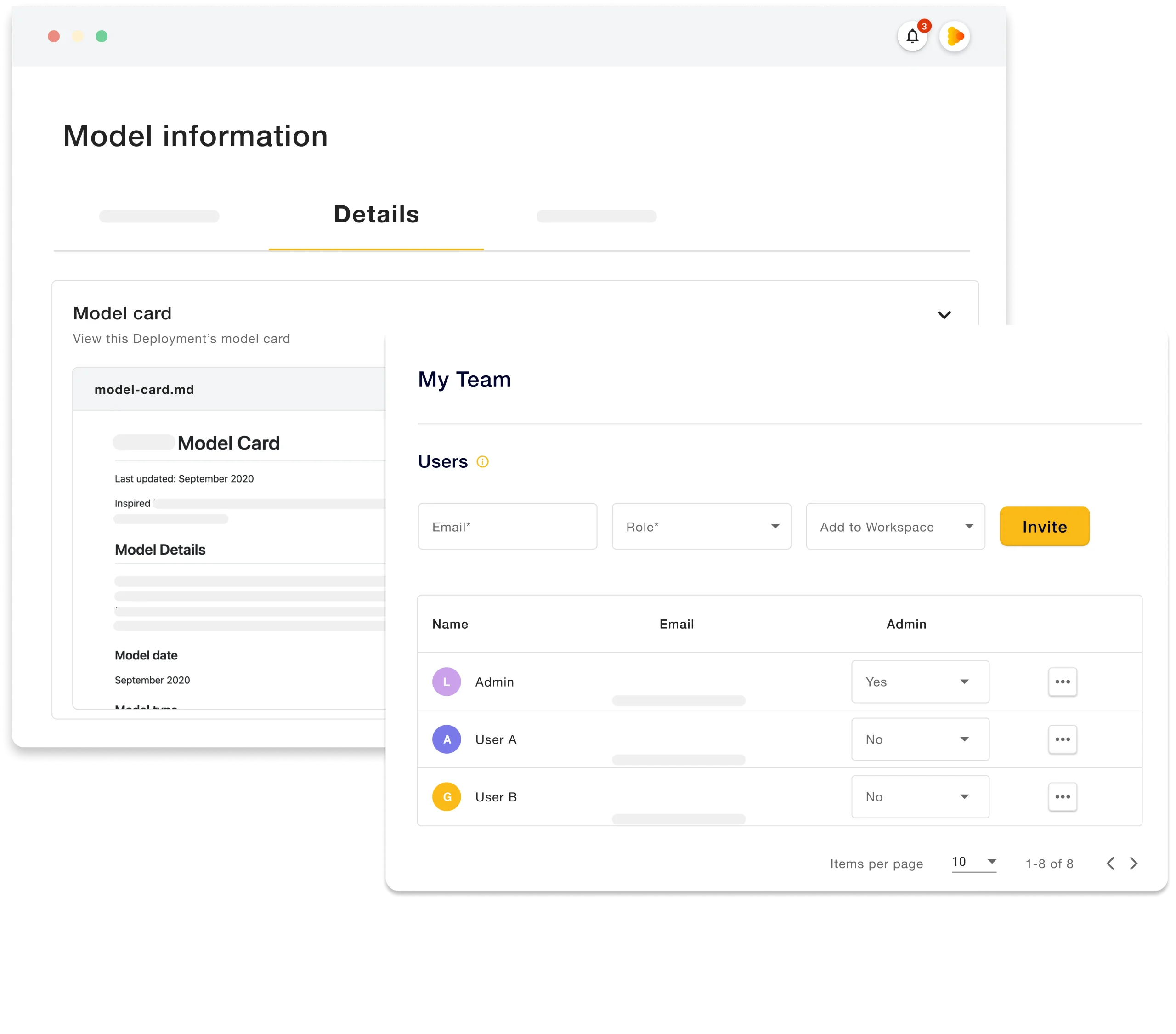

Art 11: Technical documentation

The EU AI Act specifies that

“the technical documentation of a high-risk AI system shall be drawn up before that system is placed on the market or put into service and shall be kept up-to-date.”

- Set clear ownership with different teams & workspaces

- Set various levels of user authorization

- Store all documentation & model cards in one centralized space

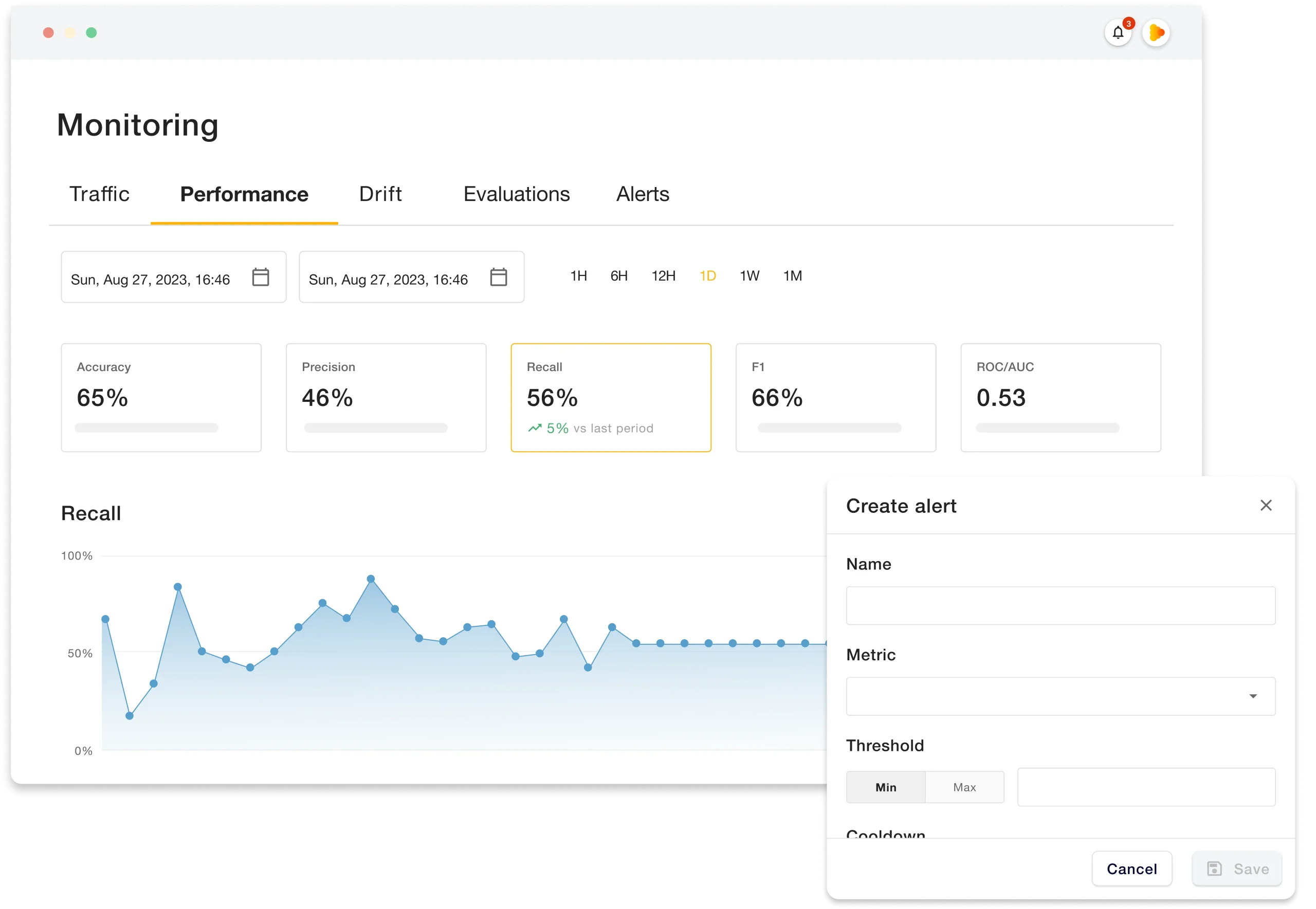

Art 15: Accuracy, Robustness and Cybersecurity

The EU AI Act specifies that AI systems should

“(…) achieve an appropriate level of accuracy, robustness, and cybersecurity, and perform consistently in those respects throughout their lifecycle.“

- Monitor model accuracy over time

- Monitor traffic, performance, drift and bias

- Create custom alerts for any metrics

- Monitor models on any platform: Sagemaker, Azure ML, MLFlow, …

- Personal keys for secure interactions with the Deeploy API/Python client

Art. 17: Quality management system

The EU AI Act requires a quality management system that is

“(…) documented in the form of written policies, procedures, and instructions (…)”

Including

“(…) a strategy for regulatory compliance, including compliance with conformity assessment procedures and procedures for the management of changes to the AI system.(…)”

- Compliance checklists on the EU AI Act and other AI safety policies

- Upload tailored to your organization compliance checklists

- Governance over any AI model in the organization