Trustworthy AI for Healthcare

AI adoption promises to dramatically lower the cost and improve the quality of healthcare. However, the lack of explainability is hindering implementation, both due to ethical as well as legal issues around transparency and fair use. To accelerate adoption, organizations must prioritize transparency around AI decisions and establish strong control and oversight over AI systems.

Learn more about how Healthplus.ai, and other healthcare organizations have leveraged Deeploy to secure a safe, compliant, and easily scalable implementation of AI systems.

Healthcare organizations using Deeploy

Establish trust with clinicians & patients

Ensure explainability & transparency

Even though even though AI models can catch patterns that might go unnoticed by physicians, the lack of understanding of model predictions rightfully causes distrust and skepticism among clinicians and patients. In a high-risk industry such as healthcare, it is therefore vital that model decisions are fully transparent and understandable.

Deeploy allows data teams to deploy models with both standard and custom explainers. The latter is especially important as certain models and applications require more advanced or state-of-the-art explainability methods.

For example, Deeploy and healthplus.ai pioneered an explainable AI system for prediction of post-surgery infections by applying explainability to dynamic time series data and combined clinical healthcare features instead of the attribution of single features. Using Deeploy, teams can easily deploy these state-of-the-art techniques and ensure all use cases in production are fully explainable and transparent.

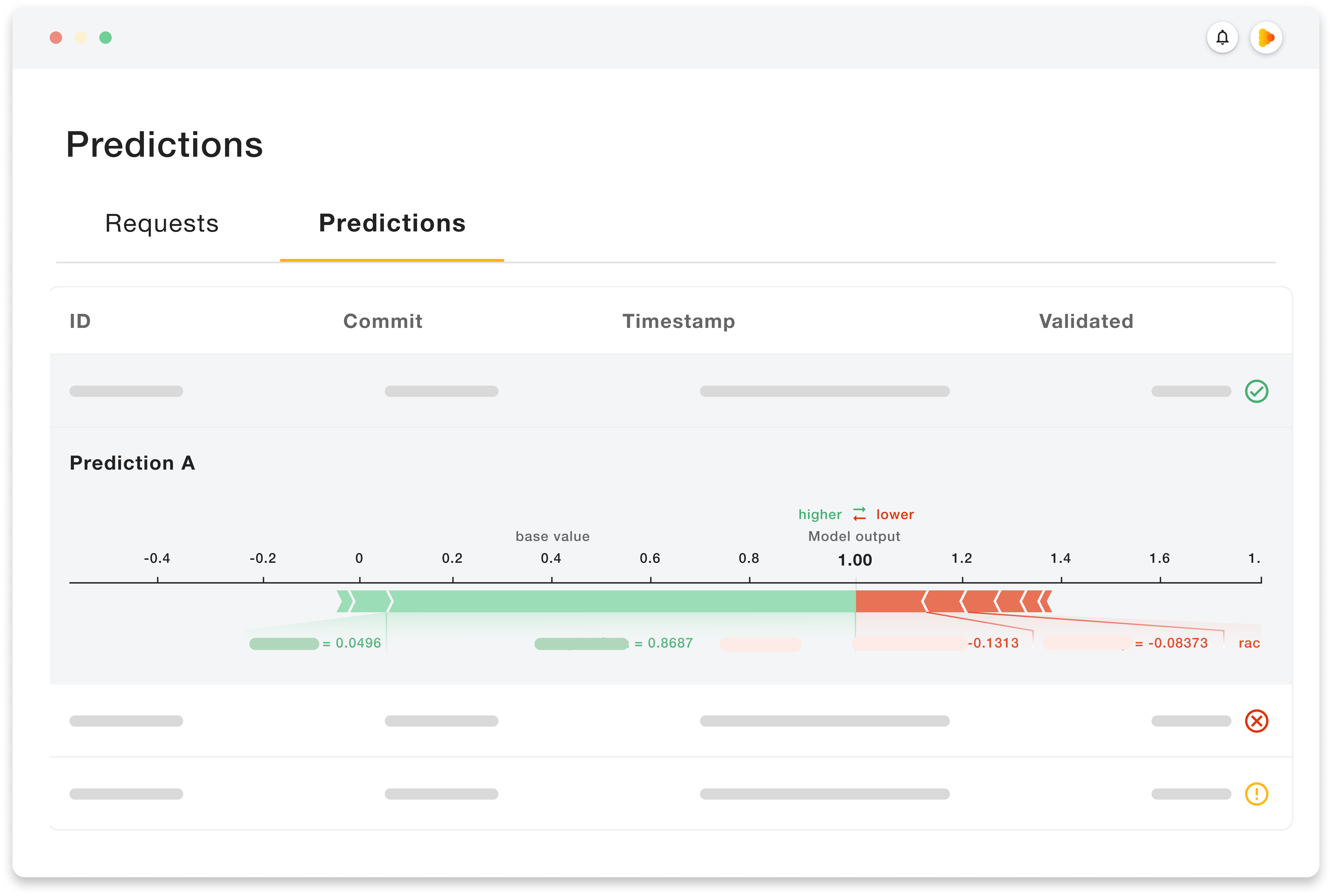

Besides deploying models with explainability, it is also crucial that the explanations align with end-users. Organizations can easily connect their user interfaces to Deeploy, allowing data to flow from both points and showing predictions and model explanations to end users.

Keep medical experts in control

Besides giving medical experts full insight into the underlying reasons behind model predictions, they must also stay in control of these predictions. Experts should be able to correct & give feedback on model decisions, ensuring that they stay in the lead of decision-making. This feedback can help retrain the model so that it becomes aligned with expert knowledge, fostering trust and confidence in the overall AI system.

Deeploy allows for a feedback system to be implemented in which users can give feedback about both the predictions and explanations. This gives the users a feeling of control and provides the model with valuable new input data, improving the quality of the predictions over time.

The data is fed back into Deeploy where data teams can monitor the percentage of overruled recommendations through the disagreement ratio metric. This helps determine model effectiveness and identify areas for improvement. It is also possible to set alerts for the disagreement ratio, meaning teams get notified when the disagreement ratio gets too high, which can inform when to perform a model update.

Stay in control of all use cases

As adoption in healthcare organizations scales, the same phenomenon seen in other industries will follow; the growing number of models scattered across organizations leads to an increasing lack of control which not only increases the time data scientists spend managing those models but also introduces governance and compliance risks.

Deeploy enables teams to deploy, serve, monitor, and manage AI models in one single platform, allowing for easier oversight while saving time and resources.

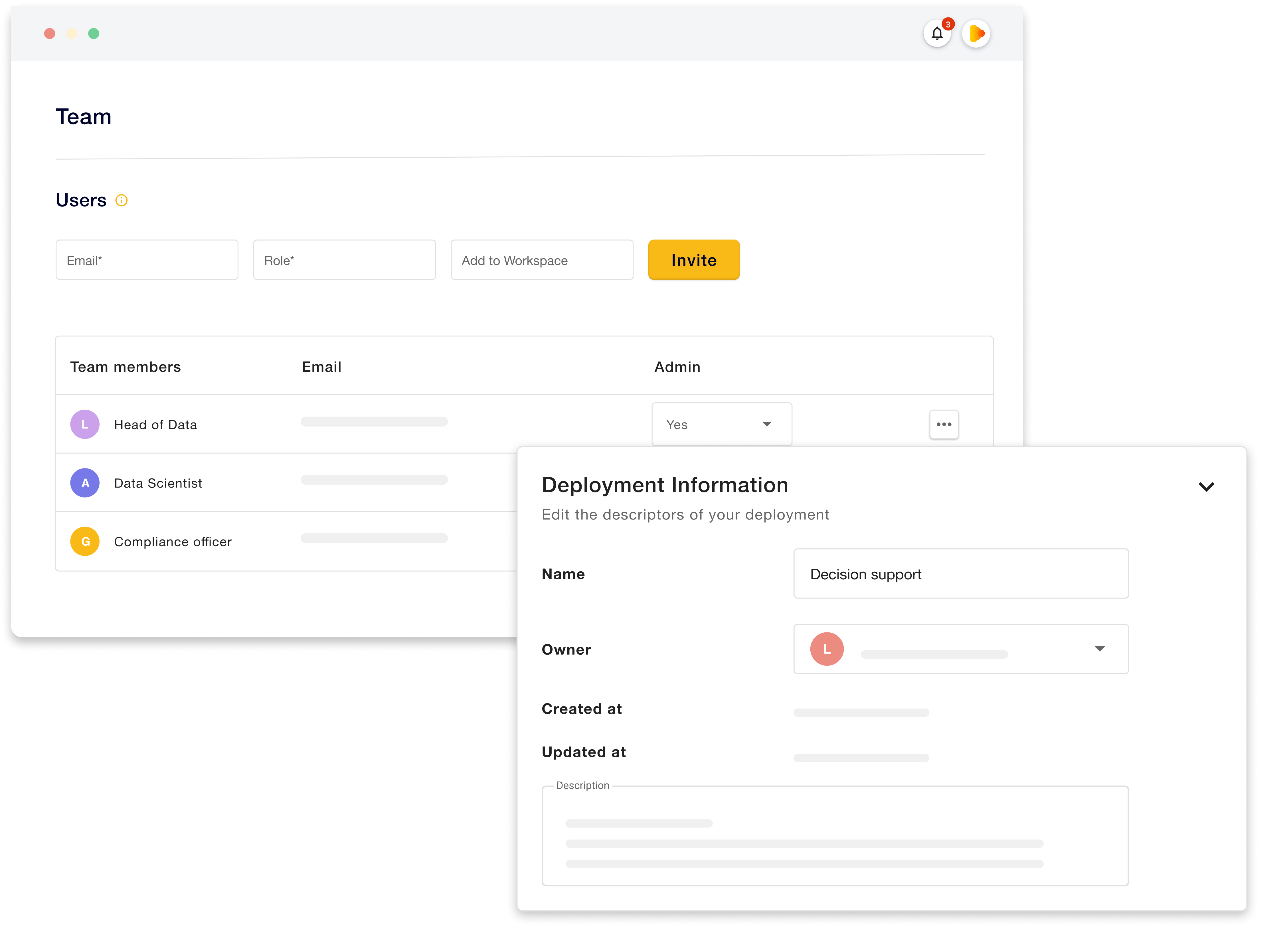

Teams and workspaces help ensure the right oversight and access to different AI applications within the same organization. Moreover, it is also possible to assign model owners to each model, who are responsible for maintaining control over its ongoing operation.

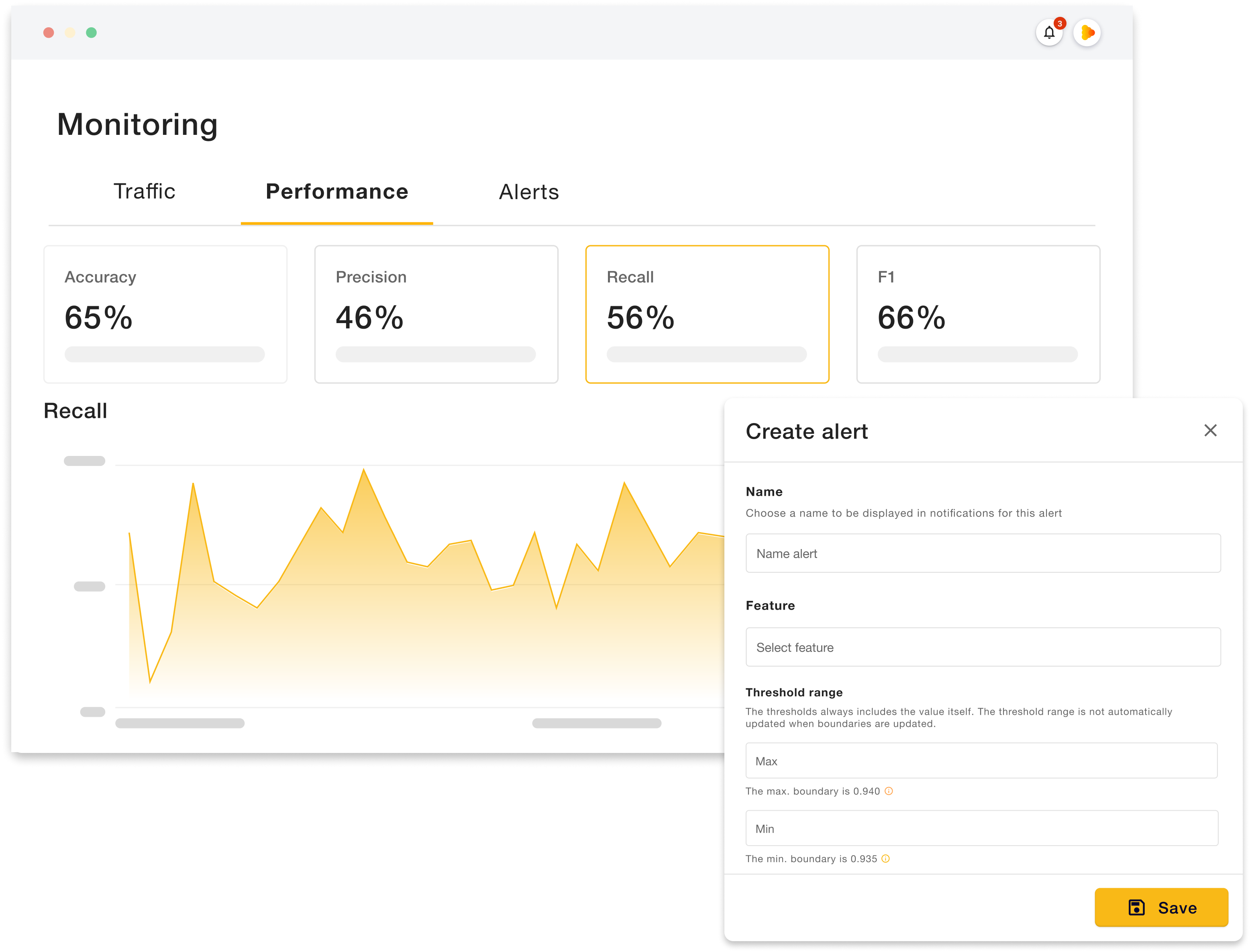

From the same platform, teams can monitor models’ vital performance metrics, directly update & reversion them, check on model health as well as review and update all deployment information.

Moreover, alerts can also be set for all monitoring metrics, allowing teams swift action in case of model degradation, which, in turn, increases efficiency and trust.

Speed up the scale-up potential

Quicker certification & faster clinical implementation

By ensuring model performance, explainability, and transparency, it becomes quicker to prove the reliability of the system to end users. This results in a shorter market validation timeline, faster acceptance from clinicians, faster certification, and, thus, faster market roll-out.

Using Deeploy to oversee, explain, and control models establishes the necessary clinical trust and speeds up the scale-up time of these promising applications, saving healthcare organizations significant time and resources.

Comply with legal requirements

Healthcare organizations must comply with regulatory requirements like the EU AI Act, and the Medical Device Regulation (MDR) to ensure patient protection when deploying AI solutions. Namely, AI systems that qualify as medical devices will be defined as high-risk under the soon-enforced EU AI Act, and thus required to adhere to strict standards of transparency, robustness, record-keeping, and human oversight.

Enhancing the transparency of AI models through the integration of explainable AI and ensuring effective oversight can tackle these challenges, and help organizations adhere to legal standards.

Maintain transparent operations

It is vital for regulatory compliance that AI systems operate transparently. As mentioned, Deeploy ensures transparency and explainability of all model recommendations. Moreover, all recommendations and their accompanied explanations can be traced back and reproduced, creating a full audit trail over model decisions and aiding in pinpointing any issues.

Keep model documentation & records readily available

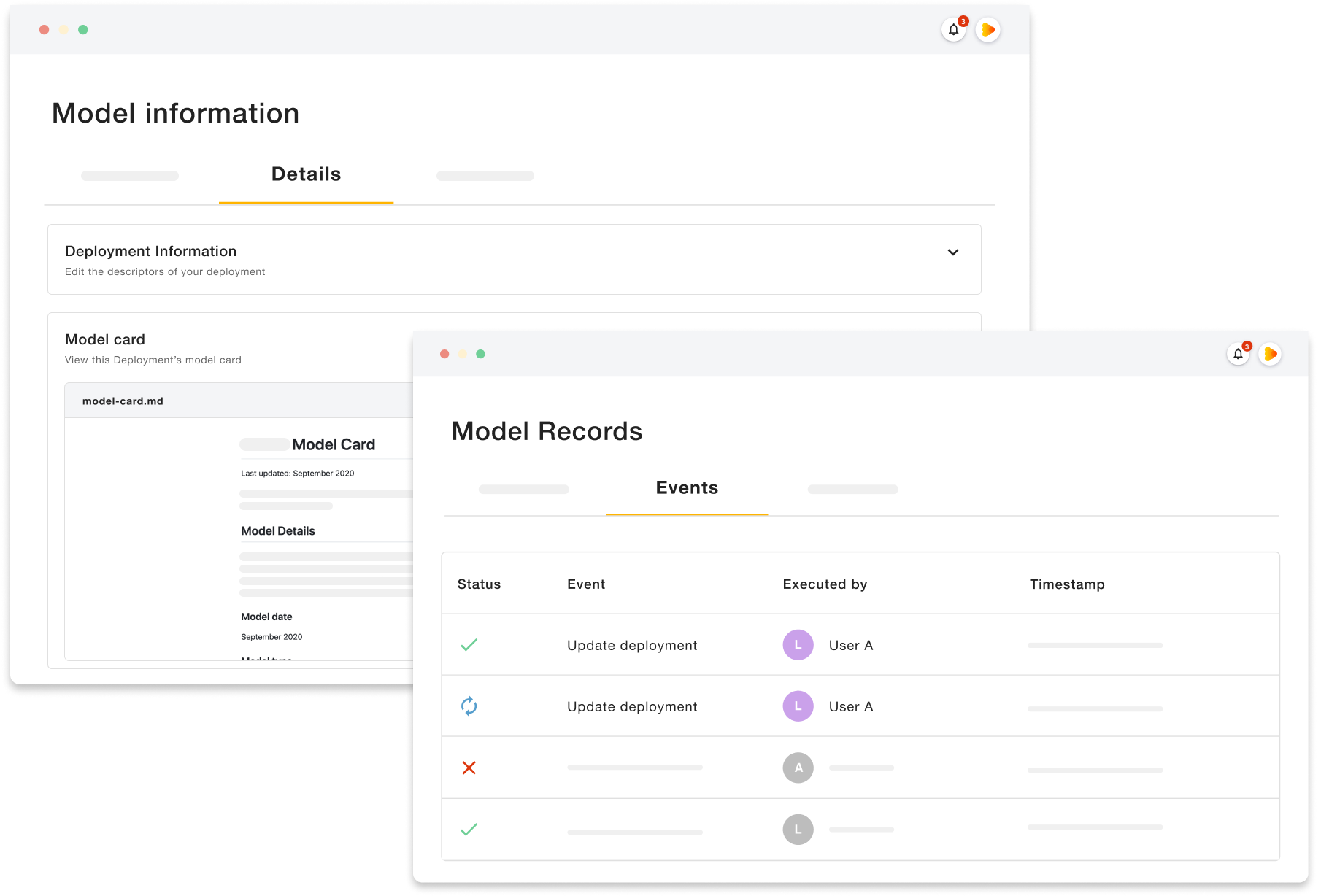

Regulations require that information on the functioning of AI systems is easily available and that all events around the deployment are logged.

Deeploy facilitates these requirements by allowing teams to create and store model and data cards, where information on model capabilities, intended use, performance characteristics, and limitations can be stored.

Additionally, all events for every deployment are automatically recorded and stored, creating a full record that can be consulted when needed.

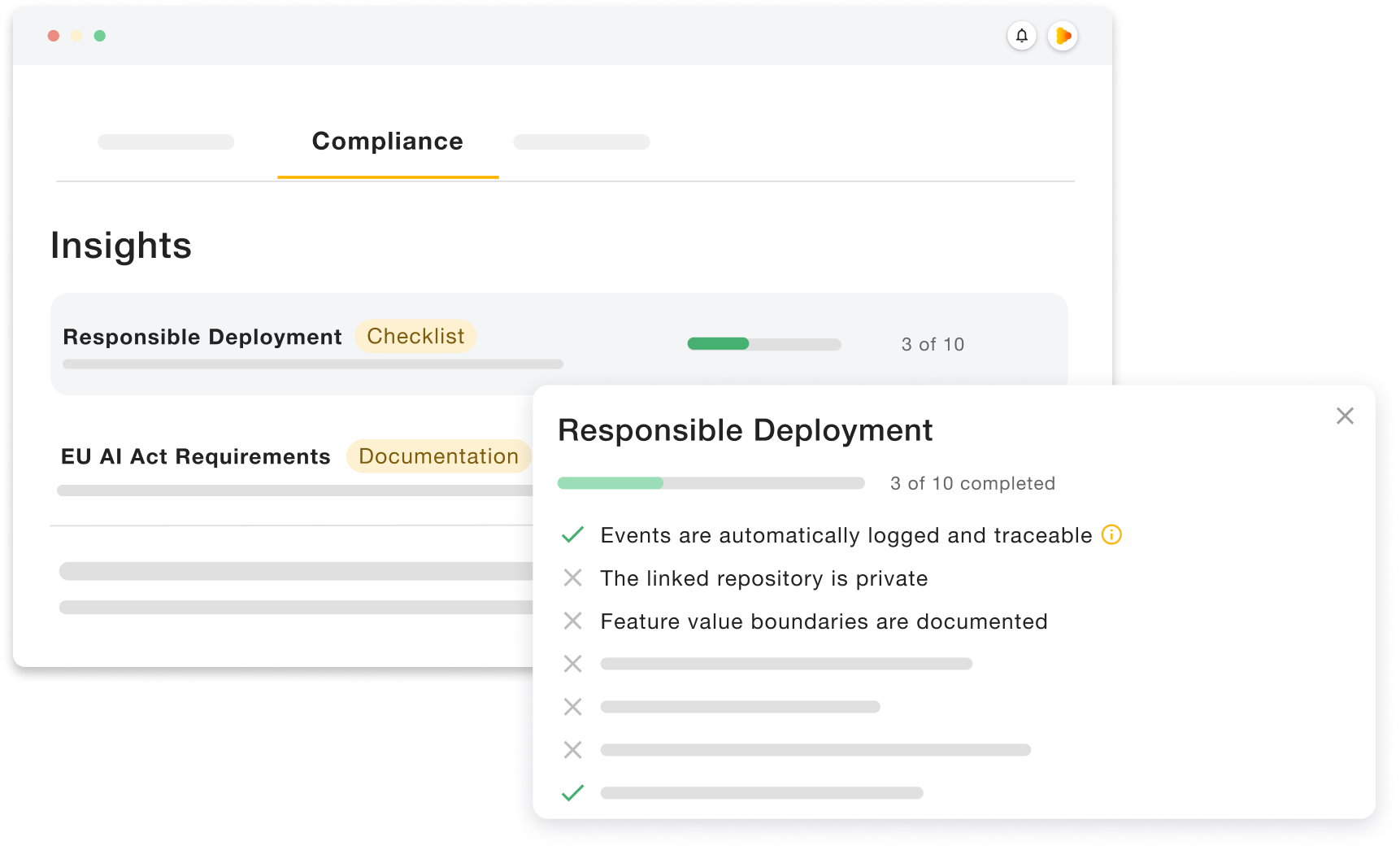

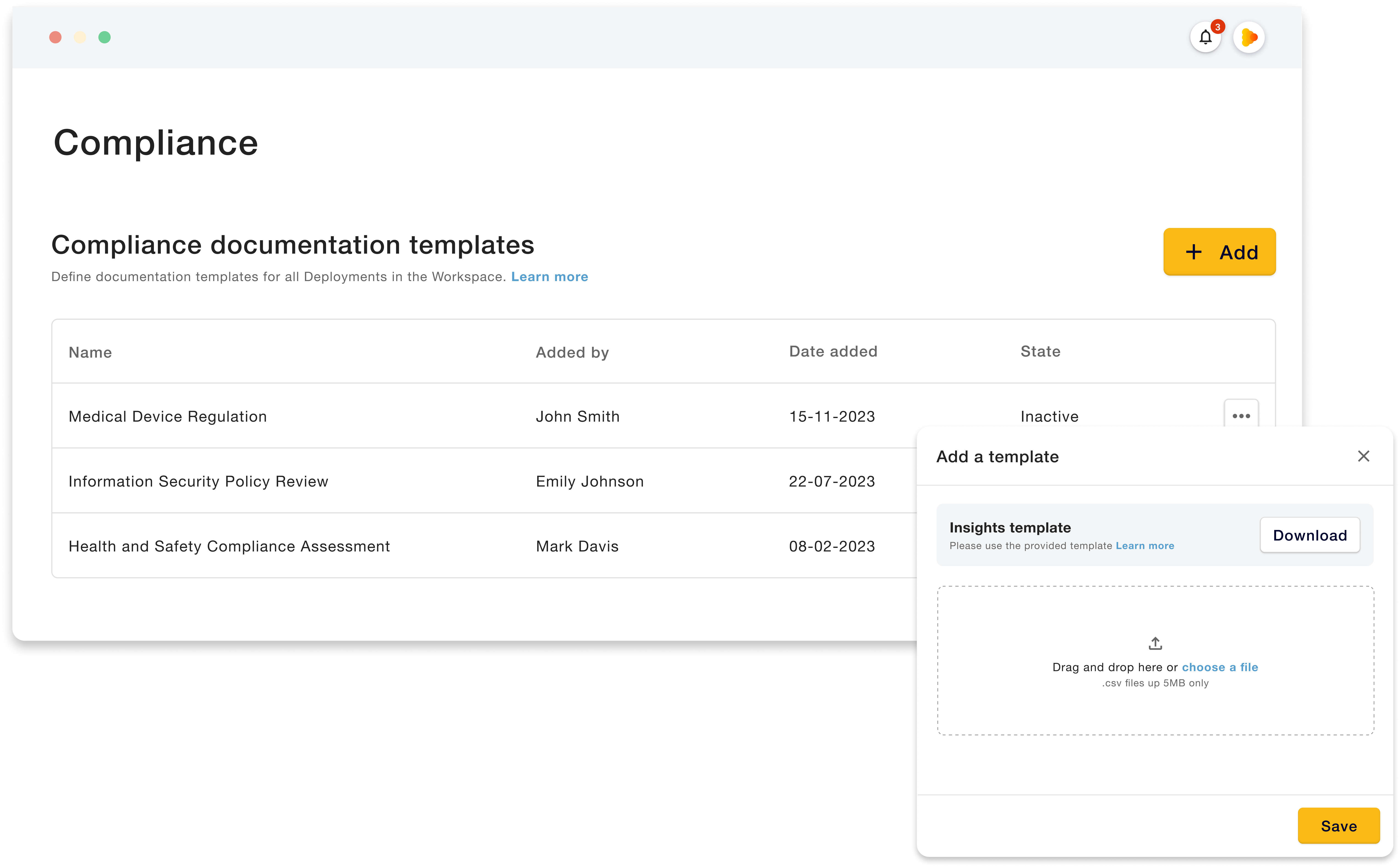

Stay on top of compliance processes

Not only do the features in Deeploy already help organizations meet essential requirements, but the platform also offers both standard and customizable compliance templates, enabling teams to verify that compliance requirements are fulfilled for every AI application within the organization.

While standard templates offer general guidance on high-level regulation, custom compliance templates allow teams to upload checklists tailored to fit any specific requirements that might be important to the organization. For instance, teams can easily upload custom checklists to ensure AI systems check the requirements of the Medical Device Regulation (MDR).

How to get started with Deeploy

Would you like to learn more about how you can take your first steps with Deeploy? Let one of our experts walk you through the platform and how it can be leveraged for your specific concerns or start with a trial of our SaaS solution.